Dismiss

Innovation

A platform built for AI

Unified, automated, and ready to turn data into intelligence.

Dismiss

June 16-18, Las Vegas

Pure//Accelerate® 2026

Discover how to unlock the true value of your data.

Dismiss

NVIDIA GTC San Jose 2026

Experience the Everpure difference at GTC

March 16-19 | Booth #935

San Jose McEnery Convention Center

41:33 Webinar

How Companies Are "AI-ifying" Their Business

This talk will break down how organizations are "AI-ifying" themselves by automating workflows, improving customer experiences, and making better decisions with data.

This webinar first aired on June 18, 2025

The first 5 minute(s) of our recorded Webinars are open; however, if you are enjoying them, we’ll ask for a little information to finish watching.

Click to View Transcript

Hello everyone, thanks for coming. Um, my name's Dean Shia. I'm solutions director for compute and AI for Insight. Just wanna start with, uh, I'll get my audience participation done right away, OK. So, show of hands, who's using AI today, planning AI for their business, or somewhere along the AI journey.

Anyone, anyone who's not raising their hands, come on. Everyone should be using AI today. All right. Let's pretend that everyone raised their hand. All right. Second question, whose birthday is in June? Is it your birthday today? OK.

And June and July, who is June and July birthdays? OK. Look at the show of hands. If I controlled the universe and I made it so that everyone whose birthday was in June, July had a successful AI project, that's how many people would have successful AI projects. Somewhere between, now, this is coming from a

study that was a couple of years ago, and I've seen a wide range, but I'm using this quote because it's the most dramatic. Somewhere between 83% and 92% of all AI projects fail. When deployed in the enterprise. So what I'd like to do today is just cover a few reasons why.

I'm gonna give you some strategies, some basic strategies on um how to deploy AI in your in your in an enterprise, and I'm gonna use insight as an example because we're going through our own um our own AI transformation. So I'm gonna crack the door open a little bit and just show you how, how we're doing it, so maybe you can get some takeaways from that.

So, the study in the previous slide, um, the ever quotable RAND Corporation, um, used that study and came up with their own research report that gave these five reasons for why AI projects fail. First, industry stakeholders often misunderstand or miscommunicate what problem needs to be solved using AI.

This is something that I'll talk about a little bit later because um finding the problems to solve is actually a pretty critical part of this. Second, many AI projects fail because the organization lacks the necessary data to adequately train or use an effective AI model. By now, you've probably heard it over and over.

Data is critical to AI projects. If the data is strategic to your or unique to your organization, that is your strategic advantage for AI projects. Third, in some cases, AI projects failed because the organization focuses on the shiny new thing. We want to use the latest LOM model,

DeepSeek just came out. Why don't we use that? So they focus on the technology instead of solving the problem. Fourth, organizations might not have adequate infrastructure to manage their data and deploy completed AI models. This kind of falls on the spectrum of one of the things that we go through with our customer

is doing our AI readiness assessment where we look at things like not just base infrastructure, rack power and cooling, but we also help them identify the skills that they have on staff right now and figure out how to mitigate that either by masking or. Uh, or managing certain parts of the of AI infrastructure. And then finally, in some cases, AI projects fail because the technology is applied to

problems that are too difficult for AI to solve. Now, if you listen to the AI hype machine, right, next week, we're going to have um like just general intelligence and AI will be able to do everything. It's actually not the case. um AI does much better when you give it smaller problems to work on.

Um, we'll talk a little bit about AI models and, and how to apply them. But, um, part of the problem is like we have customers that say I have this huge problem, we just want to feed it into an LOM and have it solved that for us and then we have to back up and say, OK, rewind, it doesn't actually work that way. Let's chunk this out and see what the best tool is to um to work on different parts of this

problem and come up with an effective outcome for your business. So I like to start with a little bit of level set because, I mean, there's a lot of technologists in this room, you're here at a technical conference, you're reading up on um AI all the time, but when we go out to our customers and, and talk with them, we'll talk about advanced strategy, latest models,

everything else, 80% of them, 80/20 rule, but somewhere around 80% of them say. That's too advanced for us. Can we back up? So I usually start here, right? The timeline of uh AI, as we all know, is GPT was released in 2022, and then we quickly went to multimodal GPT Agentic uh Agenic AI came out last year, and then this year,

if anyone went to Nvidia GTC physical AI is all the thing, there are robots running around everywhere. This, is this sound right for the timeline of, of AI? It's actually not. So AI started back in the mid-30s. Alan Turing,

anyone remember him, the Turing test that um that he talked about way back in the, in the 50s and when we get machines to think this might be the way that we determine that they're actually thinking and reasoning. The first, um, the foundations for math that all the AI is built on today started back in, in the 30s, developed through the 50s where um AI

was established as uh an academic discipline in Princeton Labs. And then things kind of started, you know, AI's kind of been in fits and starts since then, right? There's a lot of math, a lot of research, um, inventions like neural networks, um things like that, that had to, we had to go through the process of, I mean,

I, I remember hearing about AI research in the 90s. The biggest problem back then was We didn't have hardware to run it on, right? So 1997 IBM's Deep Blue defeated Garry Kasparov, first time that uh uh chess grandmaster had been defeated by a machine, and that was.

Pretty much a rack full of equipment that was dedicated to one thing, playing chess. Um, but things quickly progressed in the uh early 2010s, then, uh, AlphaGo came out and started, you know, Go is a much more uh complex game, uh, moves wise than chess, and AlphaGo was one of the early, um, self-learning models,

so it was fed in some initial information on gameplay and how to, how to play the game of Go. And then started, it started playing itself, right, and figuring out new novel ways to play the game of go to the point where Goal Masters looked at some of the moves that um that it came up with, and they, you know, no human had ever thought of what the machine came up with.

That yeah, 6697. Technology, mostly, um, well, two reasons, right? Technology and money. Um, a lot of the research is carried out in academic labs back in that time period and then once, like, you know, 20, like early 2010s, then there started to be to be

more commercial um applications for it. So then money started flowing in. And then somewhere around 2022, when Chat GPT was released, just a little bit more. We realized that there is a technology out there that was really good at um linear equations and multivariable calculus. The GPU.

That unlocked the innovations that we've seen from 2022 until today. One thing I think like this is probably one of the most important slides that that I've created, because today, AI gets associated with LOMs. I mean, to most people, and I mean it makes sense, right? LOMs were really the first time that anyone could talk to a machine and get a response that

made sense. We were, we had AI running like, you know, there's been machine learning applications running in the background since really before the year 2000. But GBT is when it sort of got out to the masses and you know, people could interact with something that could help them and,

and do things for them. But really, we've got 4-ish main buckets of uh AI that are out there and still use today. The first one we kind of call classical AI, right? This is early neural networks, um, symbolic or rule-based AI.

So this was mostly a rules-based system. If this, do that. I mean, those of us that did 4 next loops programming years ago and, and, you know, how those can kind of expand, um, very fixed, right? You do, you, you program logic into a machine. To do very specific tasks.

So early applications of classical AI were really things like production lines where you know you needed um you had a set of inputs and then based on the conditions, something would happen on a set of outputs, but the same thing would happen every time. But it was bigger than just, you know, writing a program to do for next. It it could handle um multiple inputs and create multiple uh multiple effects,

multiple outputs. Then machine learning came and this is when we started getting into training models, kind of the, um, you know, the training and learning models, kind of like when we're talking about um AlphaGo um and Deep Blue. These are machines that can learn, but.

Um, the methods and algorithms that it uses are still very, um, deterministic. So for a given set of inputs, you'll get an expected set of outputs. The machine can learn but the rules are an open book. We know how, you know, what the machines are doing and what conditions it's looking at and

what the results will be. That'll be important in a minute. Generative AI now is um using things. I mean, GPT is generative pre-trained transformers, right? We train a model, it's an algorithmic algorithmic model that's using tokens inside of

it and a new set of mathematical models that will help it create a predicted result. So, um, just real quick, I'll touch on agentic AI but agentic AI is now AI that can do things for you. So again, based on a set of conditions, I see a thing, I see a,

you know, a certain set of conditions happen, that's going to trigger an event or trigger um another AI you can layer them on and have them, you know, do multiple workflows. But the big difference here and the, the thing that I want to um just talk about real quick is the difference between machine learning and generative AI.

Like I said, generative AI is predictive, so, um, there is variability in the output based on the conditions that you give it, if you run those same conditions 10 times, you could get 5 or 6 different outputs from it, which is fine for things like um language models, right? If, uh, I mean, GPT would be boring if it, you know, responded the same way to me every time.

As long as the information that it gives me is within an acceptable set of parameters. But what about industries that are regulated or um functions that we need AI to do that require a specific set of outputs. So um like one thing that LLMs are very good at is taking uh uh documents, so like a 10K or SEC filings or things like that, taking those and summarizing them and

providing an output that's easily readable. But if you use the same LOM to like say you're a um uh investment fund and you want to use an LLM to uh evaluate some companies that you want to invest in. You're, you really have to go and check the math, right? Because I don't know if any of you have ever

had GPT do a math equation for you, but it's not good. Like when I talk about variable results, you know, GPT is kind of doing math at a 3rd or 4th grade level right now. Give it advanced equations and it, it kind of falls down. But that's where you can still use machine learning, right?

Because you can use it to scan documents. Look at keywords and highlight key sections, which may be all you need. You might not need the text output from an LLM to to get to the solution that you want. So Like I said, I just like to spend some time here so that we level set and, and talk about what, you know, like the range of solutions that are out there.

Unfortunately, what happens is, um, I hope there aren't any CIOs in the room, but CIOs, well, and there aren't airplane magazines anymore, but, um, you know, CIO sees something on substack or want to use the bright shiny object and say, let's use this tool to solve all of our problems, right? Invariably that's, that's, uh, I mean,

we see this over and over. So now just a couple of general concepts, right? This AI implementation cycle should not be unfamiliar to anyone, what's it look like? Software development cycle, right? You assess what you have.

Design, um, the, uh, program to solve it, implement it, and then learn from it, assess and then iterate on it. From, uh, sort of a, you know, high level conceptual, uh, perspective, AI is not much different. Create a create a tool to solve a problem, see how it does,

and then see how you can make it better the next time. The things that you use are different, right? We're inserting LOMs, we're in inserting machine learning models, we're using different methods to solve the problem, but at the end of the day, we're solving business problems. That's, that's what we're here to do.

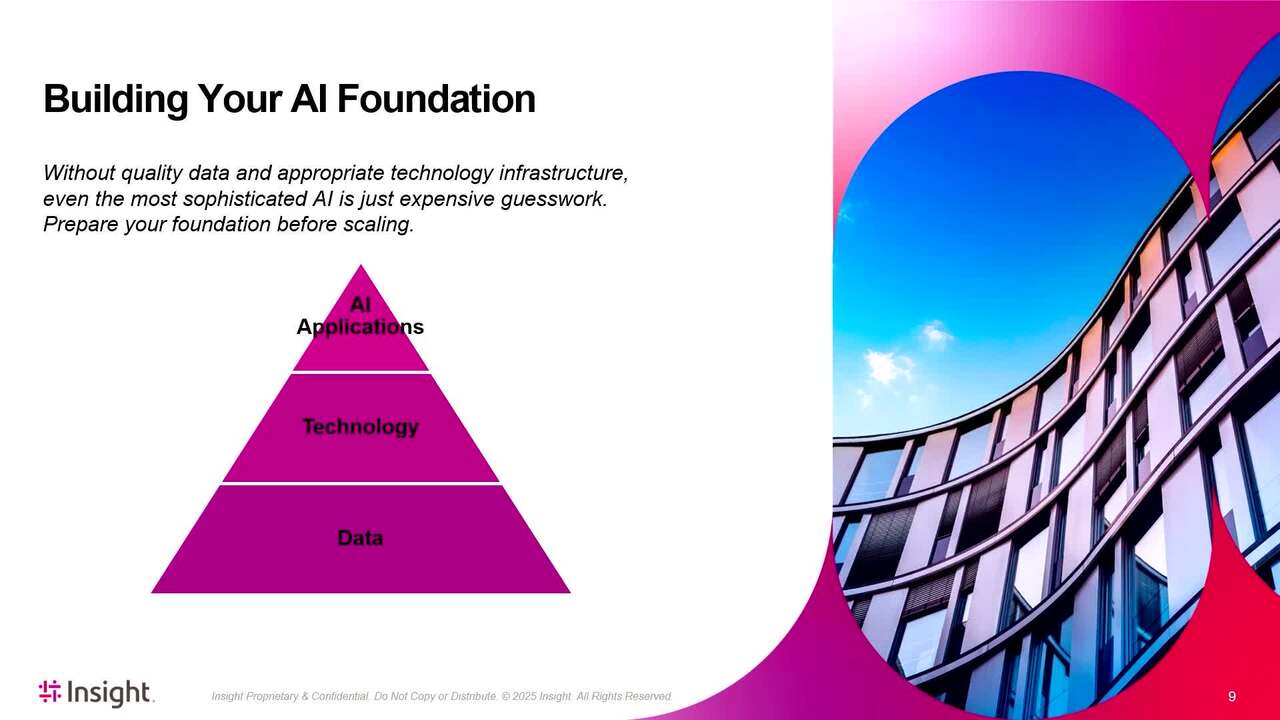

And especially today like no company is going to invest in AI unless it does one of two things, save them money or make them money. So, um, you know, but this is just, you know, high level, we, we kind of go through these four phases, they're more or less universal. The foundation that you build AI solutions on then, um,

I kind of mentioned it before, data is really the key. um. Companies have ah. Vast stores of data that is unique to them and data source does not have to be data in a database, it can be documents in a file share it can be a series of emails I like to use uh I was talking with uh one of my peers and um we were talking about how do we,

uh, how can we get to somewhere where we can ask questions about what insight does for their network practice. And uh my, uh, Mark, my, my peer said, I really like the emails that um John, who ran our network practice sent out every 234 weeks about, you know, here's what's happening in the world of our network practice,

here are the things that we do, here are the partners that we work with. John is a. Uh, content creating monster, so like these emails were, you know, this, this long, but that was a treasure trove of, of data, and I, I'm like, that's a data source. What if we just took John Brooks' emails.

PDF them, throw them into a vector database, and we have a queryable data source that we can use to get all the information that we need and get that out to our sellers, our solution architects, or whatever. It's a, you know, a quick hit again solving a very small problem, but, you know, figuring out what the data source is and then putting the right tool to it

to get it out to, uh, get it out to everyone. Now, that said, um, most enterprise data sources are a mess. Um, I'll talk a little bit about that in a little bit about what, what we're going through, but, um, if If you work for, um, you know, a company the size of Insight who deals with hundreds or thousands of vendor

partners, um, has acquired tens of companies over the period of 20 years, has absorbed business processes from those acquisitions, and has absorbed all of the legacy data from those companies into SharePoint file shares, you know, whatever. Your data is um not in an easily consumable space, but we're not gonna talk much

about how to get past that today, but we'll uh we'll talk about it a little bit. Technology is where, um, I mean, this is where we, we build applications for it, right? And the way that we like to think about it is When you're making a platform choice. Pick a platform that's more or less universal.

I mean, lock in has been a phrase for years, but like a lot of companies that we work with will start in cloud and nothing against them like there's great tools in GCP Azure and and AWS. There's great native um uh uh AI stacks, development stacks that they can use, great tools that are, that are available to any developer.

But then, what if you get to the point where you find a use case and it requires heavy inferncing on the back end, and all of a sudden you're getting charged by the token or charged by um GPUs being active. There's 2 things that are most expensive to run in cloud from an infrastructure perspective, primary storage and GPUs.

Hyperscalars are soaking up most of the latest generation uh GPUs, and they are not cheap, right? It's not cheap to acquire them and it's not cheap to to run them. Now most customers don't need the latest and greatest, but when you look at, um, you know, kind of like if you're getting charged by the token today,

if you can bring that workload on premises and sort of run it through an AI factory that you have on premises, those tokens get cheaper and cheaper as you um. Uh, as you use that infrastructure, so just some considerations on the, on the technology side. And then on the application side again, you know, pick an application stack that can

develop across platforms. Um, a lot of the AI applications that are developed are modular, so they're not tied to an LLM or not tied to a certain, uh, certain workflow. You can pull an LLM and replace it and, you know, even if you're using rag or something to augment it,

it still works fine, it's designed to. Um, but the application side again, that's where, that's where we really solve the, you know, solve the business problems that we've been talking about. So now let's talk a little bit about what um Insight is doing. So We were caught in an interesting paradox.

We have helped hundreds, hundreds of customers start their AI journey and deploy applications. Our internal AI use was. Let's just say not good, um, rare at best. So, uh, beginning of this year, our Joyce Mullen, our CEO, um, said, There's an imbalance in the force more or less,

right? We can't honestly go to our customers and tell them to use AI and show them how to use AI if we're not doing it ourselves. So we set the big hairy audacious goal to become the most AI transformed organization in North America. And um I mean, you know, the why this, why now, like we needed to transform as an enterprise, we needed to become more efficient.

We have business processes like, you know, what I described before, we are a product of acquisitions, we've inherited business processes. You, you know how it goes. Business processes get stitched together to make things work. So that's leading to, you know, you can identify a lot of inefficiency there.

But I mean, you know, just flat out, AI is the most significant innovation in human history, and Even though on that timeline, you know, there's 70 years of research into it at this point, the acceleration that's happened in the last 5 years is just incredible. Like we're just getting started on what the capabilities of, of AI AI are going to be.

But as a company, if we don't transform, we might as well go out of business. So, Also, just to give you an idea of the scale of insight in case you don't know us, um, Fortune 500 solutions in a great $9 billion annual revenue, um, and operations around, um, around the globe. So that's kind of the, the scale that we're talking about,

um, all up probably 120 to 13,000 employees around the world. So that's, you know, that's kind of the, the scale that we're starting from. So where do we start? Um, we started, number one, this was an initiative that came down from our board of directors through, uh, Joyce, our CEO and then we um uh moved

in one of our technologists, Stan Le Quinn, who is now our CTO of AI Transformation. To get executive level buy in and I mean it just kind of shows like the importance of this to our business. There is someone that is in the C-suite that is in charge of transforming our business, so it it makes a difference in influence and it makes a difference in um uh urgency that this is something that we're

tracking and, and we need it to get done. So, Our first real big splash though was starting an AI center of excellence. So what's the center of excellence? Well, it's a, it's a few things. Think of it as a team, but where the COE is really there to support AI throughout the

business across all of our business units doesn't matter if you're one of our customer facing units or an internal function like um HR finance. The COE spans across all of them and takes input from all of them. On, uh, on what we're doing with AI and what they and what they are doing with AI. So 7 critical functions were identified, finance, marketing,

legal, HR, global business solutions, growth enablement, AI guild, I'll get to that in a second, and our AI champion network. I'll get to that next. So AI champions, um, Are a way for us to get input from the BU. The AI champion is not a separate job.

It's a designation of someone that's already working in the BU and is there to act as a filter. They filter into the COE on what they're hearing from the people in their BU. And how we can use AI and then they're filtering down into the BU. There's initiatives that are driven from the COE that the um the BU will participate in and

the AI champions are um more or less responsible for distributing that out into the BUs. So, um, generally speaking across the functions, well, I should take that back, across all of the Bs and insight. There's around 2 in each function, so finance, marketing, HR, they all have a pair of um our, our infrastructure solution line where I sit,

we all have uh generally one or two AI champions in them. Um, We also have an AI guild, right? Insight has internally deep technical expertise. Now it's a little bit strange because most of the technologists that we have are customer facing. I mean, that's how we make our money,

right? solving solutions for our customers. So this kind of goes back to the eating your own dog food argument, right? We're more, we're more concerned with how we can help our customers then how we can help ourselves most of the time. But the AI guild are our technical experts that are drawn from around the company.

There's around 10 of us, um, I'm one of them, but when we go to make the technical decisions on platform, application stack, where we're going to run AI and just how we need to do things like figuring out where data sources are and, and things like that, um, that's where, where we come into play. And then, uh, supporting functions, I'll kind of talk through those as we're going through,

but as we go through a project execution, um, socializing some of the things that we're doing with, with AI, um, communications comes both internal and external data access architecture, those are sort of, you know, spot functions that we're pulling in kind of matrixing people in as, as, as uh as needed. So, one of the first initiatives, this happened um this past spring is the AI champions

across all of the BUs were um their, their first task was to create a survey and bring that into the business. Tell you why in a second, but the components of the survey were this. We did a pre um pre-workshop inventory, um, that's of like tools, software, you know, things that the BU does to uh get their jobs done.

Um, so we looked at, oops, uh, so we looked at business structure and leadership, strategic priorities of the, of the BU, what are they trying to do and trying to solve? Operational challenges of the BU. What are you doing today that is causing you the most pain, right? And like top 5 things from HBU we need them

listed out. The technology ecosystem of the plat the internal platforms that they use, the data landscape, which is where things get really complicated. Um, an AI readiness assessment, this is more of like, um, uh, how ready are you from a skills perspective to use AI, um, and then previous or current AI automation initiatives.

Now this was kind of interesting because like I think this happens with, we see this happen with a lot of our customers too. There'll be like, I remember when like uh um Shadow Cloud was, you know, a big thing a few years ago, like there's Shadow AI too. So, you know, I mean, it's easy to, you know, plug your credit card into cloud or chat GPT or

Perplexity and, you know, get their pro package and kind of start doing stuff and you know, you can pay for a private instance of chat GPT on your own and start building business applications, but without enterprise support. That becomes like a one person solution, right? Someone's using to improve their own job, which isn't a bad thing in itself, but it doesn't scale.

But those tend to like also come in fits and starts where they, you know, they'll start. Person that's using it gets busy, moves on to another role, and then they just sort of cycle out. Um, and then we started collecting initial use case ideas. Mostly with the purpose of finding commonality across the business units.

So like, um, a couple of ones that we identified early on were uh scope of work generator, right? We have um. Different solution lines that do different things, but. We both do the same thing and that we provide scope of work to our customers. So what if we came up with a universal tool or looked at a,

I mean there are there are ISP, there are software packages out there that will do the same thing, but what if we made that comment across the business and started looking for those use cases that we could get to. So what that did, those workshops led to a big set of inputs, and then we needed to do something with those inputs, which leads to the execution model.

Now, when we work with our customers and we started doing this internally, we tend to look at it at 3 levels, right? Category one is BU led quick wins. These are people developing things on their own, kind of like the same scenario that I mentioned a minute ago, but this time with support from the business and recognition from the business that you did

a thing that's adding to the efficiency of, of, you know, your job function. And uh I'll get to this in a minute too, but like. Those quick wins are things that we track. We ask for, we ask people to celebrate them and we track those, those wins,

which I'll show you in a second. Um, category 2 is cross-functional projects, right? And this can be. Um, the thing is like this, as we go down this pyramid, these things become more and more complex. So early cross functional projects like we're

getting into the category twos now, but this would be things with that can span a couple of um business units or solution lines. But with maybe one or two data sources, right? Trying to keep the complexity down by limiting the data sources, but we're still finding a lot of functions that, um that will span cross solution lines and be able to provide solutions there.

Category 3 is where things really get sticky. We don't think we're going to get to any category 3s until probably 6 months from today. Um, the reason for that is mostly the data sources that we have. They are all over the company. There's been some consolidation in the past, but until we get a handle on our data and get

to a scenario where we have like a, a data lake or data warehouse in place. Which we don't, I'm sorry, but um, until we get to that scenario where we can easily access, combine or get that data into a form where it's usable, the category 3, enterprise strategic initiatives are not going to, um, not gonna happen. Now that's not say we're,

that's not saying we're not working towards them, right? These are still being driven by the business, but they're being tracked and, you know, plan tracked and we're doing like regular updates on their execution. So I talked about tracking the um. The uh success stories we have an internal uh web page where users can submit their success

stories and are encouraged to do so. So this is an example of a um an early one that was um that was sent out there anonymized for public consumption, but our director of marketing portfolio content and digital experience, this was all, I mean like stuff that GPT is great at, right? Taking content.

Um, summarizing it, but then was able to use co-pilot studio to automate that work function. So key sentence is right there, used to take them 40 minutes, now 5, right? We see transformative stuff like that in our customers, we've helped them do that and we haven't done it on our own. Another example, um RFP response.

We've built that probably 5 times for our customers and we've heard them say, you know, we've gone from, it took us 2 weeks to get a team together to uh respond to one RFP. And for many of them, I'm sort of consolidating 5 into 1 example, right? But just with an initial uh initial pilot, we were able to get an RFP response to

80% accuracy, and it took 2 days. So that was huge for their um for their business, but I mean, they have to add a little bit of time in the end, right, because there's a predictive model that is doing this, so you want to make sure that the output is accurate and that you're not just sending an

RFP response off into space that says it's all free, you know, but. Um, the other thing that we do is we have a leader board. Embarrassingly, I sit in the infrastructure solution line and HR and finance, finance are kicking our ass right now. So, um, but this is like.

Social proof of what you can do once you sort of unleash AI and the enterprise and encourage people to, to um use it and look for use cases. This happens, I mean, look at the total number of use cases that we've generated, this is in 2 months. And these are going up every day, this is a snapshot of a of a live leader board.

Um, so, but, you know, this is an example of how AI can uh transform an organization, not just from like a time saving and, you know, time saving, cost saving, everything else, but this is transformative for our company and how we're using it, encouraging others to use it and look for ways to um to improve things. Um, All of this is still like.

Part of the function of the um AI Center of Excellence is to provide these things too, right? And I don't wanna. I, I think the biggest problem that when we hear about AI or I should say when you hear about AI and we talk about AI, we make it sound very easy, right? I, I go to Nvidia, talk with Nvidia a lot.

When Nvidia talks to customers, it's like, yeah, no problem, just, you know, set up NVAIE fire up your Nims, and you're solving problems. And then we have to be the Debbie Downers and say, well, OK, wait a second. Do you, this is all running on containers, do you have those skills today?

Can you manage that environment? Have you thought about governance for any of this? Are you in a regulated industry and you know, like what, what are the what regulations are the output subject to? Do you have compliance concerns? How are you going to secure this if it's public facing? Cause this can, you know, if it's public facing,

you can do prompt injections another uh another attack vector can be another attack vector for uh for businesses. And then how do I set up guardrails so that, you know, 99.9% of people that use it. No problem, but you know, there's gonna be one, the, the one person that is trying to do

something nefarious. So how do you, how do you protect against that? These are all functions of the, of the COE that I, I, I don't want to make it seem like we're taking it lightly, like this was all, you know, policies were put in place, guardrails were put in place.

That was all done in advance before we unleashed it um on the company. So, um, closing minutes, I just want to hit on a couple of things. I mean, this is reinforcing a little bit of what we talked about, um, you know, we, I think we kind of saw 92% when I had you raise hands before, um, you know, a lot of people are, are using AI, um, 74%,

like this is an interesting part of the AI landscape, right? When we talk about AI a lot, it's in the context of custom solutions for the company, but there are also ISB solutions that are more intended to be like a managed service where we will drop infrastructure into place whether it's um for video analytics, um, manufacturing, you know, like there's applications across a range of uh vertical

industries, but those are more geared towards like I have one thing that I want to solve today. And I don't wanna have to, like, I don't want to run everything else to have to do this. So it's essentially dropping a box to solve that one problem. Those are ISP solutions.

Um, but like what's heartening and like I was on a right before this, I was on a CIO for um forum talking about scalable AI and um it seems like the AI center of excellence concept has really taken hold, like a couple of people will call them, you know, a little different things, but Um, the center of excellence concept and having that organization that is there to drive

AI in the business, um, is really important. It's like the first step. So to see that at 62% today, actually a year ago, this was 2024, um, but, uh, to see that in place today I think is, you know, like this is getting people on, on the right track. And then I'll just click hit on this is the methodology that that we use when we work with

customers so we it's called radius devho model, but the radius part of it is um more like doing the work up front, right? Kind of what we talked about with our internal transformation where we need input on what problems are we trying to solve, what platforms do we have to work with, and what people skills do we have that we can, that we can leverage.

So, we need to figure that out with our customers up front, and I mean, in some, in some cases, it's discovery for them too because they just don't know. Um, then there's an implementation phase that's like the initial, you know, radius kind of leads to a point where you can do a pilot and then implementation is where the rubber meets the road and you really,

um, start putting the stuff into production and measuring results. But then kind of going back to the cycle that I showed, um, way back at the beginning. Uh, the dev shop is like a flexible development model where we can, um, flex in, uh, whether it's developers, architects, um,

BU specialists, we can flex people into a team that can keep iterating through with customers and finding more use cases and doing more with, with AI. So this is kind of how we, um, how we work when we work with customers. So that's it. I've got 3 minutes left.

Be happy to take any questions or or discussion.

We Also Recommend...

Personalize for Me