Dismiss

Innovation

A platform built for AI

Unified, automated, and ready to turn data into intelligence.

Dismiss

June 16-18, Las Vegas

Pure//Accelerate® 2026

Discover how to unlock the true value of your data.

Dismiss

NVIDIA GTC San Jose 2026

Experience the Everpure difference at GTC

March 16-19 | Booth #935

San Jose McEnery Convention Center

41:56 Webinar

2023 Is the “Year of the vVols!

VMware virtual volumes (vVols) are intended to simplify storage management for virtual environments. vVols provide flexible, granular, storage management on the VM and/or volume level.

This webinar first aired on June 14, 2023

Click to View Transcript

Testing. Perfect. All right. All right. We have the placeholder slide up for the wonderful recording. So is it is 2023 the year of the right? We kind of hinted that really, why are we still talking about V Balls in 2023 when they've been

around since 2015? It's been a pretty long time. Um There's a lot of really great reasons. Um If you haven't met Tristan or myself, um we're two of the specialists at VM Ware focus on platforms. I'm the global practice lead focused on solutions and strategy filled solution architect, focus on platforms, uh VM Ware, cloud block store,

et cetera, all that stuff. So we kind of think about um the intros, right? We, we just did that um the vivos primer. Um It'll be this part, it will be a little bit of the same of what we covered in the first session, but we're gonna dive a little bit more deeper and we're gonna really talk about a lot more of what we're actually doing there.

And so from a primer, like, what are, um really if we think about the early days of storage, storage was very kind of, I would say good at some degree, you had a bunch of physical servers that you bound to individual volumes and things worked. But virtualization came along and kind of challenged everything is you had a lot of these really great insights that you got at a per volume basis when virtualization came in.

Well, you now have this abstracted storage layer connected to a whole bunch of compute with a whole bunch of virtual stuff underneath. But it consolidated the good things, but you kind of gave up on a lot of the granular features that those physical volumes brought. So are kind of a solution to a problem that VM Ware themselves created,

right? So if we think about the data store granular services, snapshots replication Q OS safe mode, when you have all that bound to a volume or a, the V MS have no concept of what is actually happening there. It's all done at a storage layer. It's a legacy way of doing things. You also had that performance impact is how big do I size that data store?

How many V MS do I put on it? How do I attach this to here? And there's a lot of choices that you kind of had to make. But there is also that lack of visibility. What happens if I'm gonna have a VM Ware admin and a storage admin and the storage admin said, hey, that old policy, we're getting full on our array. So let's just change it to 30 days of snapshots

instead of 60 right? They are never gonna know. Um And so there's really not a lot of information there. And when you're troubleshooting performance issues, you didn't really get a lot of insights into those particular environments and so solve all of those challenges and more.

And so there will be a quiz at the end. So please make this down, But this is really VM Ware's definition of evils, right? Um And I definitely wanna highlight, you don't need to know the entire thing, but there's a few words that are bolded, they're integrated, virtualized, efficient for your virtualized environments and optimized.

And really those are all the things that were in that original, early days of storage that we're now bringing into the future. And so there's four main types of VOLS when we go into this, right? There's a configuration Vival which is formatted with vmfs and that's where all your logs and your VMX file lives.

There's a data which has created one per individual disk, there's a swap V which is created on power on removed, on power off. And there's a lot of changes in that in a do update one. And then a memory Vival based off what you're actually doing with that virtual machine. And so we think about what actually is a virtual volume at heart.

It's a lot of ice mapping, right? I'm gonna say it that way instead of saying RDM, because RDM makes us all kind of cry inside as V admins. Um They're complicated to manage, they were difficult. There was a whole lot of stuff, but we talked about the first word in that definition was integrated. So think about an RDM that you treat like a V

MD K. When you want a disk, you say give me a disc on this array and it magically shows up, which means that there's no more pre provisioning. You don't have to say give me a 20 terabyte data store. I'm gonna fill it up eventually, right? You're gonna take it what you need right now and grow as you need it.

We're gonna talk about data mobility. How do you take a workload that is sitting on VM Ware maybe move it to hyper V, maybe move it to a physical server, maybe move it to the public cloud. If it's a vmfs volume, it's a VM Ware formatted. You can't take that to Linux and Windows and just hydrate that data with the guest os being native.

You can do a lot of really cool stuff, especially snapshot refreshes. We're definitely going to talk about storage policy based management, right? That's really one of the things that Tristan is gonna highlight of what actually excels in what we're doing, the integrated replication management. I think we have a demo on that one,

right? And that's really a key thing and then my favorite thing is in guest on map and storage, provisioning. Our number one question we get asked, how should I provision my volumes thin, thick, thick eager. There's a lot of choices. Well, with we say both, um if you want capacity, you go thin.

If you want performance, you go thick or raw. So with they're an in guest or sorry, there are physical volumes you get all the performance, but they're thin provisions. So you get in guested on map. So traditionally, you get a whole lot of benefits with them and we see customers actually get to take advantage of better data reduction because if they're doing thick

provision V MD K for all that performance and it's a say a database application that's reading and writing all those blocks, those blocks never get unmapped. So as soon as you move to Vols, all of those in guest blocks get unmapped and you free up all that wasted stranded capacity that you traditionally do not have visibility into. Do you wanna cover this one? Sure.

So um basically the key concept in Balls is the fact that you're dealing with a storage provider now. Um so in the case of a flash array, a flash array that makes itself known as, hey, I'm available for service. Uh You know, here, here's my model number, here's my serial number, here's my name. Um Do you um need quality of service?

We, we're advertising capabilities of the storage provider layer to basically almost market data services capabilities to vsphere so that you can make intelligent policy or create intelligent policies to kind of meet business requirements. Um In a lot of cases, it could be quality of service to kind of set an upper throughput or IO limit at a level, not a volume level, but an actual V level and then enforce that for the

life of the uh for the life of the workload. Um or it could be um you want to require a workload to adhere to a policy that involves snapshots or replicated snapshots. So um is really mechanically when you look at under the hood and you look at the different types of and the proliferation of volumes on a flash array. It, it could, you know, make you pull your hair out but that would happen to you.

Yeah, pretty much. But I've, I've got terrible jokes, sorry. But the key thing is you can create these policies in vsphere and then apply them in mass across hundreds of virtual machines. And then on a regular basis, Vsphere is checking for policy compliance. So if somebody makes a mistake on initial placement or uh someone turns off replication,

then you'll get compliance alerts. You can trap on those, maybe open service, no tickets, maybe issue beat downs, but you can correct the situation and make sure you're adhering to RPO and RT O targets. Uh for example, and then we we can also do interesting things with tags, we can target by tag, but we can also assign tags based on a VM or a V disk that's

affiliated with an S PB M policy. Um, I mean, I, I think this is kind of a common refrain or a common story. We talked about the other day in the breakout or the, the, um, the flash session. But, um, typically an application owner or V admin has a conversation with a storage person and the storage person looks at their sea of,

of arrays or systems and has to make a decision. Um And maybe there's a tool in use, maybe you've got, maybe you've got um Turbos or something like that, but you have to look um for performance and capacity or maybe it's an app owner or a V admin that knows what kind of performance they require and they know exactly what capacity require still as a storage provider,

you have to actually go out and choose with balls. It's a slightly different scenario because technically the V admin could provision a virtual machine maybe from a template and drop that virtual machine, those virtual discs into a storage container or a storage bucket with V balls. So the breaking down of the silo between V admins and storage admins is,

is kind of a thing when you adopt V Balls, it changes not only the org structure but the way that you have conversations or provide services. Um And I'll talk a little bit about service kind of mindset or cloud service mindset because I think are kind of enabler for us. You just tap in whenever you're ready to take over.

I'll hand it over whenever the next one. All right. All right. So, um when you deploy V vas every shot is a orchestrated hardware based snapshot in pure, that means it's space efficient. It's uh swift in velocity. Um It's extensible, it's portable, it's all good things.

Um There's nothing wrong with a managed vsphere snapshot except as soon as you take that snapshot, you have a boat anchor attached to that V disc. You have a performance drag as you add on multiple snapshots, you further drag your performance down and it takes sometimes hours and days to commit those snapshots. When you do a delete, anybody had AAA snapshot,

delete process take over 24 hours. Wow, we should, we should print shirts. Um, 24 hours is one isn't too bad. Everybody has, uh, everybody has some kind of mechanism to watch for like snapshots to go over 24 hours in age. And you've got maybe a size based snapshot

alerting system. All right. That's kind of old school best practice. Right. Anyway, you move to and those problems really, um are, are, are kind of made simpler because we can take, um, hardware based snapshots and any time we take that snapshot, it is fast and any time we restore it's fast.

Um, and if you're doing a lot of snapshots and there's very little change in the data set. Chances are your capacity hit is gonna be very, very minimal. Um, so you can kind of, I don't wanna say you can snapshot with reckless abandon, but you don't have to stress too much about snapshots if that, um, kept any of you up late at night too is when you watch out for that,

but I don't need the mic. I have a mic. Um So when you think about snaps, right? Even with um all those discs aren't V MD KS that are nested in the file system that you actually have to physically commit and write to disc. When you take a snapshot of a AVM ware manage snapshot, all of those delta discs are now physical volumes on the flash ray,

which means they dedupe, they compress. And when we have to revert that snapshot, we have to take advantage of all the array level features. So if you have 100 terabyte snapshot, we're essentially moving metadata around. So we can commit that snapshot in probably minutes. And so that is really where we drive that

efficiency. But data mobility, this is my favorite thing is like I love covering cloud, right? What happens when we need to move data around is today we have a virtual server, right? A server running on balls, we have AC drive D drive E drive F drive and we have all of these snapshots. Let's take advantage of that if we move and say we have this big data warehousing server,

right? This was one of those beefy things we needed. It's outside the bounds of what virtualization can support. I want to take this and put it to that physical server. I'm gonna repatriate from virtualization. Well, for this, it's easy if you want, you can just copy the F drive over to the F drive and you immediately

have an instant ded Dulic copy of that data set because since it's a raw volume, you can just mount that data, you don't have to worry about converting it. What happens if you wanna do a bare metal server? The same thing if you want a P to V or V to P A server, all you do is provision a blank server and prepopulate the C drive and E drive, you then take the snapshots that existed over there

and replicate them over. And it's that simple. And you're probably doing some of these things with your database refreshes already is you're taking a snapshot, overwriting a volume and orchestrating that you can do that all the way up to the public cloud. And that's where we kind of talked about cloud block store earlier and there's a few sessions

later on too about taking all of those exact same data sets and moving it to the cloud. And so are the enablement of that data mobility platform. I got the the broken flicker again. Um And so the most important thing here is we kind of talked about pitfalls in the session. You don't have to rush and move everything to some customers will do it,

some customers will not. But the thing to highlight is that find where they make sense for some customers that's on databases and file shares and, and certain things of what makes sense for those business critical high performance applications. I say don't do it for VD. I, that's um a conversation we can hang out at the, the bar later and discuss it.

But the great thing is is that when you have a VM that lives on BMFS or NFS or, and you wanna move it to, it's a storage migration. It is an accelerated copy that is almost near instant if it's not within a flash array and it takes that bmfs volumes and converts it to the physical volumes. It's a, it's, and it's not a one way street if you say I went to and it's not working for me.

We're having issues, we're not understanding it. We don't like the really cool stuff it's giving me and I want to become a storage admin again. Let's move it back, right? Is, is a storage motion back. It's not a one way street. There's no down time associated with it. It's very, very simple.

And so why are we still talking about um because it's important VM Ware is finding a huge investment. There's a lot of those challenges and we're kind of simplifying the teams, right? We don't have storage teams. Well, it all depends on where you work, but storage teams and vsphere admin teams and network teams as we kind of have on premises teams and cloud teams and we're seeing this big

consolidation, but there was a problem with adoption for a really long time. Um I did a, a presentation at tech field day last year explore where we kind of talked about um crossing the chasm where there was always kind of these run ups of everybody adopting and then something happened and we came back down and then we did it again and then we came back down and we're kind of on the second or third evolution of this adoption.

So why now? Well, these were the big three things that really stopped. Adoption was scale. Um If you think about the first when we adopted is it didn't really work at scale, um We didn't have scale, you could do like 200 V MS and like 1000 volumes. And that didn't really work for a lot of our customers.

So we said, ok, we're gonna scale those numbers up. But if we scaled those numbers, but we weren't stable and we didn't have performance. Do we actually have scale? We didn't. So we actually spent about a good year um just focusing on hardening the S service, what is in the arrays that drive and we kind of had some really good uh um importances,

right? So the challenges were around getting started. Um Customers might have come from other vendors and had to deploy Vasa as a VM or manage it or there was complications or it was just really complicated. And so they had this really bad taste in their mouth that they didn't want to continue doing it anymore. So that was some of those challenges.

We then also had certificate management, um certificate management with is a pain if you need multiple V centers in a non linked environment, talking to a single array because you have to replace them all with sign certificates, replace them every year and do that. A auto update. One solves those challenges. So again, as VM Ware is maturing everybody else

is going to take advantage of those features, the performance of a with busy arrays fail over, right? Performance made snapshots, done time S PB M. All of those were things that said they work but they don't have everything that I need. BMFS kind of works for me. RD MS works for me. So there's kind of these things of what was actually causing blockages with that.

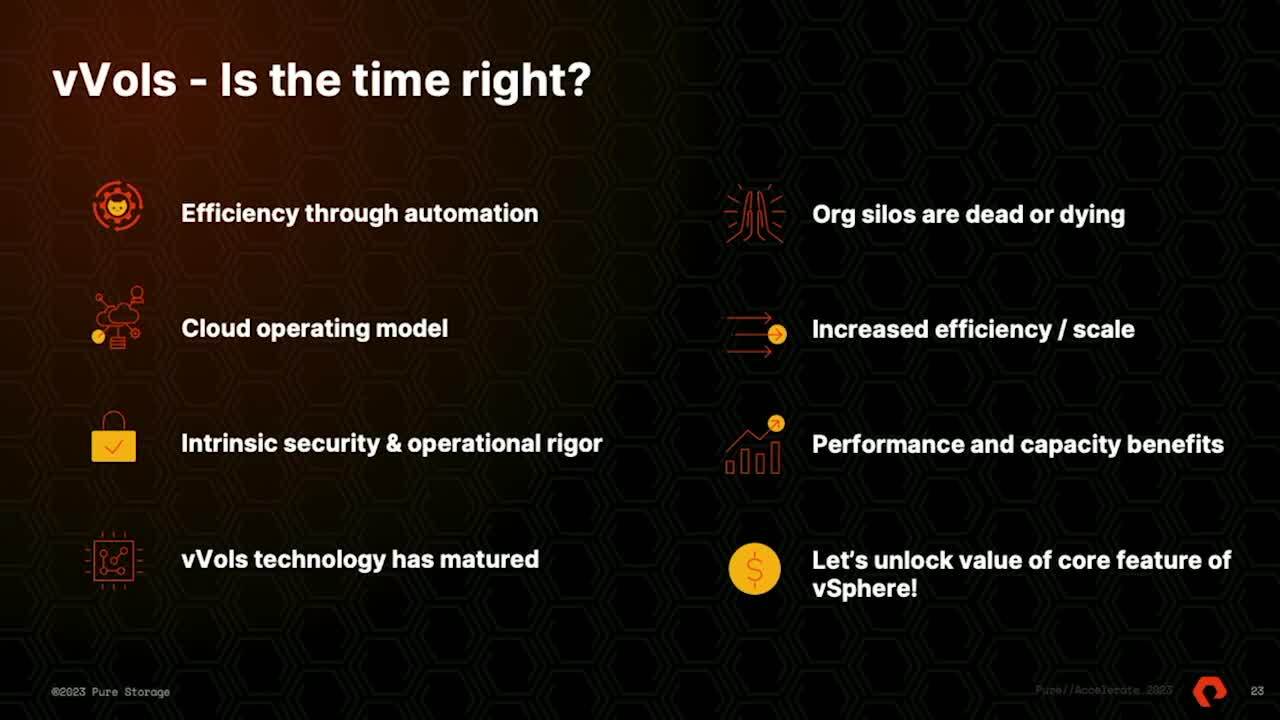

So why now? Right. All right. So hold on to the clicker. Don't lose it. I'm gonna try the keyboard here. See if it's faster. So why, why are vals a popular topic. Um Today, um this year I've done several talks that have been titled is

2023 the year year, the, the Ball and II, I used to do a lot of BD. I remember how we used to always say it's the year of the desktop. So this is kind of a tongue in cheek, kind of play on that theme. Um I do four or five demos every day with different customers and different prospects. Um And what I'm finding is the, the built in automation that comes from S PB M provides

operational efficiency and typically uh lean organizations lean it, departments that crave automation has a capability that really enables efficient behavior. And maybe um teams can focus on innovation or projects they didn't have cycles for because they're not doing tedious. Oh, I gotta provision some Luns.

I gotta figure out what size they are. I gotta call the V geeks and tell them what their Luns name names are. Things like that. Um That efficiency through automation is the foundation for a cloud operating model. Um We all, if you, if you run on prem infrastructure, everybody run a data center, like it's a, it's a traditional data center or a, it's got some walls,

got some air conditioner, got some power. I got a bunch of racks. All right. So theoretically, we could be replaced by A B Os or Azure or Alibaba or Tencent or some public cloud provider. Unless we start to operate with a cloud mindset, a service mindset and we become transactional, the business has a challenge. They need us to maneuver quickly to provide

them services and we do it in a way that doesn't tax our resources but still provides rigor and stability. So the one of the key elements right now in tech is um you know, ransomware defense, malware defense. And I think the operational rigor that comes with S PB M and policy based assignment of storage or placement of storage or assignment

of services kind of lends itself well to a foundational operational rigor which is kind of kind of key. If you focus on security at scale, you have to do everything consistently. And by policy, policy drift is a great opportunity for people to do malicious things, right? Um Technology has matured um the the the things

that David described the problems um are problems that I think everybody in the industry um kind of faced with pr is one of the leading platforms for val's adoption because we partner with VM Ware, we contribute to the and the standard. Um we are um active code contributors, but we are also um testers of the latest services, the the latest virtualization constructs.

We we do uh massive amounts of tests um in our labs, but we also partner with VM Ware in their labs with pure equipment to validate some of the new capabilities. So we've really modernized the s capability. Um What we shipped initially in 2017 is a lot different than what we support today. We've really matured. We think um our jokes are still really immature.

That's just my team. Um org silos. Um David mentioned it, you know, it's, it's getting rarer and rarer where you see a giant wall between the storage team and the V admin team or the app team, we're all having to kind of come together as we become more and more efficiency focused.

Um from a performance perspective, when we talk to large customers that have done a wholesale migration of a workload from vmfs to, they do see a slight increase in performance and they do see reclamation of capacity. Your mileage may vary, but we see sometimes 10% better performance and 10% better capacity utilization with just the move to um and Cody Hosterman, who's our,

our kind of geekiest peer. Um He's got blog articles that kind of describe why the underlying, you know, efficiency of, of capacity consumption and performance um exist. Um And he's got phd level blog articles on this topic. So if you're having trouble sleeping some night, pull up one of those blog posts because they're fascinating, but they, they do go in a great level of detail.

Um Finally VM Ware uh started developing Balls in like 2012, 2013, um became available in 2015. Um You know how VM Ware kind of raises our prices. Sometimes like vsphere prices go up a little, they go up like what 1% a year is that right? I don't know. I used to work for VM Ware.

So did David, um we're paying for innovation from VM Ware. We pay for new features and new capabilities, the S PB M policy engine that is part of Vsphere, part of V Center we're paying for. And so I believe we should unlock the value of our investment in vmware technology. If you've got uh uh a capable storage platform, even if it's va why wouldn't you take advantage of that policy engine to kind of do your

bidding? You know, that, that to me is kind of maximizing some of your investment after hearing everything we talked about, it's like, why would we use vmfs? Right. That's, yeah, there's, and there's still use cases where vmfs is totally appropriate. Um I've heard rumors, I don't know this to be true.

Maybe David, you've heard the same ones that there's more um data services, engineering effort going into val's futures than VMFS, futures. Um I don't know if that means in our lifetime in our careers, we'll see the end days of vmfs. Who knows. Um I'm hoping Chad GP T will get me out of or make my job easier.

You'll just, you'll just throw in the together. I like it. Yeah, like, and this is really that in, I kind of talked about it for like if we actually look at this big gap, it was from about 2020 to 2022. Um and kind of with 2021 is where there's a lot of focus.

There wasn't really features right. There was the spec but that boss spec from 1.1 to 1.2 had no features. It was all about the scale, the performance and the benefits. And then when we brought up a two, there was more scale, more SP PM benefits. And when we're in 2.5, 2.5 and I think three

and we're five, like they don't go in numerical order and they don't go because like we're at 1.0 in 2017 and we're in 5.0 today. Um that doesn't really scale well, but the thing to think about is a lot of really cool stuff coming um stretch to support. It's not our fault guys. We want to ship active cluster and V balls if we could,

we would ship it tomorrow. Unfortunately, it's a joint development effort with VM Ware where they're working with us, we're working with them as a design partner. So stretch to support active cluster support will come active dr support will come more performance, more scale, right? We're bringing out our fleet, we're seeing more customer adoption, we want to make it better and especially around NVME over

fabric, especially TCP support. We're going to see a lot more adoption there because we see large drivers to NVME over TCP because you don't have to really have this whole configuration of your infrastructure to adopt it. Like you do traditionally with Rocky or fiber channel. And so we have so much development on engineering than ever.

It's really one of those exciting times um to be here working up here on these technologies. There's one slide I wanted to talk to but I guess we'll talk about the outcomes. Ok. Yeah, just, just some highlights. We, I mean, we talk to customers all the time that maybe they have a small isolated use case. Maybe it's just a lab testing exercise. Maybe it's a,

a SQL database opportunity to do something with uh you know, database replication or um data protection or something like that. But we talked to customers like uh USA a big manufacturer of knives and their um their uh bottle necks were really human bottlenecks where they were inefficient in the way that they were doing provisioning operations and kind of working as a team and have kind of made

that better waiting for Ricky to take his photo, uh Silver Star brand. Um, you know, a big retail goods um company, they've seen um performance, they've seen um efficiency, they're not in the business of it. They don't want to be in the business of calculating lung capacity. Um you know, figuring out block sizes, figuring out um what block sizes are common and should

live in the same data stores for performance sake. So they've, they've seen benefit from that kind of inherent automation and that inherent um efficiency of balls um demos. Yeah, it looks like somebody deleted one of our uh references. We had a good one. So, alright, so and then one other thing that I wanted to highlight,

I thought we had that slide but um I think we originally started this with like 70 slides and then marketing took out 40 of them and then we had to put some back in. And so there's now a graveyard of a bunch of slides. But um we talked about the performance and skills. There's been some really amazing features that have been added in and I would say definitely

the last year, um A lot of it was direct tie in to. So we talked about all the benefits, all the performance, better snapshots, done time better that but one of the largest features that our customers are asking for was quotas and multiple data store support. So today, and if you're on 644 or 63 or below, a flash array was a

single container. And when we created a container, it was nine petabytes because that's the most that VM Ware supported. So we said, why not make it nine petabytes? But in some organizations, they're like, well, we still want to kind of treat it at these silos. We don't wanna mix dev test, we wanted to set

size up to 10 terabytes as I don't have nine petabytes of storage behind the scenes. And so some of those efforts in the spec and then 64 is we now have multiple container support. So what we do, we create a pod, we assign a pod quota to it. You connect the protocol end point to it and you tie that together. So those are a lot of the really great features

that we brought into a lot of the big engineering efforts around it which Tristan will showcase some of these is around our VSPHERE plug in and the benefits of how we can take everything that VM Ware is doing and step it up like, I don't know, 20 notches like bring it to 11 um where we're providing insights, we're providing replication, we're providing point in time revert,

we're providing snapshots, we're providing, providing you all of this really awesome visibility that you normally just don't get with. Cool. All right. So why don't we have a question? Oh yeah, Ivan. Ivan, is that right?

II I the question I have is about the, are you going to to be more? Mhm 17.0. Mhm Right. And what we both here. Mhm uh Don't tell me

to us. No. Yeah, I've got another name for me and this is, yeah, and that's kind of, yeah. So the the question that Isaac had was around like the protocol end point in Vasa. And that's kind of one of those things that earlier on left that really foul taste in their mouth.

And I heard this question yesterday is what about V like what happens when V center goes down? Like that was one thing that everybody scared you out of. Right. V center is down. You can't do anything with, well, if V center is down, can you manage your virtual machines? No. Right. And if you think about where we are on purity,

um S A is a native service on our ray that runs side by side. Um And I hate to ask this question, but like if you think about the durability and availability of arrays, we're now up to seven nines of durability if we pull this room and it might be two people who might have had down time at some degree and the rest are zeros like our seven nines are so greatly skewed because it's based off the customers,

not necessarily our arrays. And so when you think about Vasa, Vasa is always going to be highly available, it's something outside of that doesn't impact them. So Vasa is down on the array, the VM is run because we have that data control path, everything still runs, you just can't change anything.

If your V center is down, you can modify everything at a host level, you just can't modify storage policies. So S A is important but it's not as critical and a single point of failure as some people think. But understanding the architecture of the features are really some of the things that we kind of tie into here. And we've got a really good white paper that

walks through kind of every possible failure scenario just out on the VM Ware platform. Um That, yeah, we'll, we'll talk about that afterwards. You can find us, we'll be at the booths. All right. All right. So I'm allergic to, I'm allergic to recorded demos.

Um I like to do live demos and sometimes I fail spectacularly and it's very comedic, but I was required to do a recorded demo. So, um I, I created this myself. I'm gonna talk through it a little bit. I may pause it a couple of times and it's not gonna go on for very long, but I just wanna highlight some of the key benefits of the,

if you're using today, you realize some of these benefits, but I'll showcase a few things. Um, having vmfs statistics coming off the PR A is really powerful. Here's a vmfs volume. Um I have front end statistics. These are statistics that are um in the center.

Um I can go out and I can look at historic utilization capacity consumption patterns, things like that. But isn't it great that I can look at vmfs from a peer perspective and get back in real time numbers for data reduction performance. Go back for a second. I wanna show you something really cool. So go back in and then make it really easy. So like this is really where that visibility

comes in and we should have done a better demo of this. But traditionally with VMF, you can kind of see VMFS used array host written and array unique space, you actually knew where things were living. So if emfsu, right, that's how much data was actually written when you looked at array host written, that's how much data we were writing to the array.

So if you had 100 terabytes of data written at 5 to 1, that top line would be much higher than the middle line. And then Unique space is. How much are we actually storing? If that top line was ever less than the middle line, you had an unmapped problem. That was one thing that you could very easily highlight that you had that,

but that unmapped problem is on which of those 150 V MS on that volume. I mean, this is a great bmfs view of capacity in real time and performance in real time, but you don't know of the 10 or 15 or 20 or 200 V MS that are resident on this volume, who's actually generating the spikes in late, you know what, what's attributing? What can we attribute the spike in latency or

the increase in ops to which workload? We're gonna have to look at front end statistics in vsphere. Um I'm sorry that that might be able to help. But getting down to the level of granularity actually gives you specific volume level statistics.

And I'll, I'll, I'll walk through that. I wanted to show vmfs first. Um And then actually show the equivalent in vals terms. So um here's a, here's a virtual machine, a database server, it's got lots of discs. And when we look at the pure plug in, we look at capacity and one of the,

I think the best statistics or one of the best metrics that we highlight here in the plug in is on that far right column that uh data reduction um at the disc level. So we can actually look at which um disks are achieving the best data reduction benefit. And this is important because sometimes you come in on a Monday morning and you look at a system and the data reduction ratio has changed, maybe not for the better and you want to know

what happened and you found out over the weekend there was an application update or maybe some snapshots were taken as part of the uh of an application. This is kind of a good example because all that purple capacity there. Um that's all snapshot this blue data here. That's actually what has been committed as far as rights for this particular disk.

But having these statistics, this data coming off of the pure um real time data real accurate to back end data and available at the level is huge. Um You can still look at the entire VM and look at the high level macro view of all of the discs associated with VM. But actually peeling back the layers at the disc level is is pretty powerful. Yeah. And the one question was like,

can't VM analytics do this. So if you're not familiar with analytics, it's our physical to virtual to volume map. But that data gets uploaded from a collector to pier one takes an hour to process. So it has all this data. But what happens if you're trying to troubleshoot something in real time? You get this granular, we now have the exact same metrics.

I just talked about the VMF level down to a disc. And so like, for example, Anthony is probably somewhere in the back or I think he left, he has a 20 terabyte VM in our lab that he has four copies of and, and when that array fills up, I'm like Anthony really another 20 terabytes VM. You're just gonna add another 20 terabytes of discs.

But when you actually look at those metrics, it's a 20 terabyte disk that's actually only writing 100 gigs of data, that's only storing 50 gigs of unique data. And so if I were to do that, it's like, yeah, you have 40 terabytes of data, but it's really only using because of all the duplication like 10 terabytes. And so that's really this visibility that we're giving you front and center and we get the same

level of granularity and the same real time data in the plug in um for individual vols. I'm looking at a volume group. So this is um the performance associated with all of the, all of the discs, but I can break out individual discs here, individual volumes and depending what the volume is doing, I can look at the performance spikes, the valleys, the correlation,

look at the time span and actually derive some insights right here within um Vsphere. So what about when things break or rather what happens when people break stuff? What happens when Tristan breaks stuff? Um In the first example, I'm gonna show kind of a recover deleted disc use case. So is it common that a V admin is gonna go out to vsphere and delete a disc like I'm doing

right here? Um Probably not. But if it happened and you had to get that disk back, you can use the plug in to effectively look at your existing V vaults and then go in and um use the restored deleted disc feature um to get that disk back from um available snapshots and get it back kind of instantly like it's super fast.

Um You pick the point in time and it's back, our deleted disk is recovered, we have the same capability at a VM level. So if you have a VM on a in a bucket and that VM is deleted. Maybe you have a script that ran awry and it deleted a VM that it wasn't supposed to. We can get that virtual machine back, um, that destroyed virtual machine. But the admin said we could delete it.

That's been powered off for three days. Exactly. Exactly. But here you see, I'm missing one of my Accelerate App servers here. Uh 02 and I go out to the uh destroyed uh volumes bucket and I pick a compute resource as a target. And it comes back really quickly. A very similar use case is what we call importing data,

importing data means that we're taking a disk from one VM and that VM is back really quickly and then we drag it back into the uh accelerate application folder super fast when we import data. What we're doing is we're taking a data set or a disc from another virtual machine and we're attaching it opportunistically, it's very common in application dev environments to want to take a data set from a

production server, maybe attach it to a non production server to do some testing. And that's what I'm doing here again, using the plug in using the import desk disc feature, attaching a disk from a neighbor VM. Um so that I can do some testing or um maybe swap out um database or restore from a sequel log or something like that.

And so one thing to think about here is we're just not taking that disk that's attached to VM and doing multi attach, we're actually doing a phone operation on the back end. That's an instant and creating a new copy of that data and attaching it to this disk. So it is its own unique copy that's globally ded, duplicated. Yeah, we're for the one the one thing I would also say is everything that we do in the plug

in. So this click, click next stuff that I'm showing here is all um able to be automated. So if you want to use powershell, if you want to use a restful method, maybe you want to write Python scripts or something like that, you can automate these uh these workflows. If you use view anybody using view automation or orchestrator Aria, anybody staying at the Aria this week?

So did they name the, did they name the product line after the hotel? Explore this here? OK. There you go. Um When we attach uh or we, we make a copy, make a clone of that just gonna attach it. It happens in seconds. It's very, very fast. So um this is gonna be a little bit longer demo, but we're kind of getting close to the end here.

What we're gonna do is we're gonna actually use built in um uh orchestration of uh replicated V balls to move uh four application servers from a source site to a target site. So it's kind of like SRM light. Um I don't think I'm supposed to call it that, but the ability to um take running workloads and either migrate them or test, migrate them to see if they're viable at a destination site

maybe. Are you doing some B CD R um preparedness drills? And you want to see if you can move a fleet of virtual machines to a target site, bring them up on a test network and test our um plug in actually supports that for. Um what I'm gonna do here is verify that my accelerate app servers are part of a

replication policy. So I've got an S PB M policy. Everybody's compliant. So everybody's, everybody's complying. I open the plug in. I actually go to the replication manager feature. Look, I found them. There's my uh accelerate apps.

I've got four of them. Um It looks like I had a sync once operation, a sync once is basically just doing a check to make sure that everything is kind of uh in sync as far as metadata. Um We'll do a quick resorts world um sync once operation here, just kind of a final sync before we actually test migrate.

But I think we're actually not gonna do a test. We're actually gonna do a full migration. So I've sped this up a little bit, but it's a very rapid process just to sync your source and destination sites. Now I'm gonna actually click run but to the left there. You see test and clean up and to the right, you see a button called repro protect.

We'll take a look at that here in a sec. I'm picking my target site. I could have multiple targets to choose from. I take my point and time snapshot. I did have scheduled snapshots there that were replicated. That I could choose. I picked my compute resource target, pick my network. It could be a test network.

Obviously, we don't want to have production machines. I can choose during a run whether to power on the machines or not. Um I just click round a run. It's now executing that orderly list of tasks there. I've sped it up a little bit. I'm looking at another,

um, I'm logging on to my other V center to kind of watch what's happening. You see that my source side, if it is viable, we power down those source VM. I go out to my target site and you'll see shortly. I four accelerate app servers show up at my target site. It's still processing, doing some stuff here. We'll give it a second.

It says sink once you told it to do and had a hug snapshot. There we go. It's moving down the task list and here in a moment, wait for it. It's very exciting. There, they are four systems ready to go. What we do is there's an answer question. Um Remember the old pop up that comes up did

you copy or move this VM? We answer that question for you by automation. So we've just powered on the source V MS. Now, once the VM tools check in, we know that the V MS are viable, what we'll do is we'll actually destroy the source V MS if the source site is viable. So you can see that our app server folder is empty and our app server,

um, app servers that have been shipped have been fully migrated, powered on and they're ready to go. So, fail over is great, but fail over sucks if you can't fail back. So we've got a built in work flow that will actually let you repro protect workload. So if you have replication going in the other direction, your um target is replicating to

what was once the source, then what we can let you do is pick um a uh S PB M policy and a pure uh protection group to get things protected in the other, other direction as long as the source site is viable. Of course, the reason that's important is eventually you might want to fail back, right? You might want to um take that workload and put

it back at your production site. Um Or if you don't care about that, just forget about the repro protect button, you moved your workload. Um This is a perfectly good tool within the plug in to do migration. Now, I'm a big fan of sr ma big, big fan of, I don't think replication manager in the plug in

replaces those um platforms, those those services because they are very robust. They can re IP systems, they can make all kinds of changes. But if you need a lightweight tool, um and you need to move some workload, um or you wanna, you know, conceptually, you know, verify some workload is viable. So are you guys more interested in vivos ready to get started?

We're always here to help. We'll be at the booth. Um.

VMware virtual volumes (vVols) are intended to simplify storage management for virtual environments. vVols provide flexible, granular, storage management on the VM and/or volume level. This results in optimized resource utilization and predictable, policy-based orchestration. Attend this fun and demo-rich session on the why, the why-not, and the how of vVols adoption.

We Also Recommend...

Personalize for Me