Dismiss

Innovation

A platform built for AI

Unified, automated, and ready to turn data into intelligence.

Dismiss

June 16-18, Las Vegas

Pure//Accelerate® 2026

Discover how to unlock the true value of your data.

Dismiss

NVIDIA GTC San Jose 2026

Experience the Everpure difference at GTC

March 16-19 | Booth #935

San Jose McEnery Convention Center

37:21 Webinar

Relieve the Pain of Unified Storage Management with FlashArray File Services

This session will walk you through the main architectural tenets of the first platform to give blocks and files equal billing.

This webinar first aired on June 14, 2023

Click to View Transcript

All right, I think we'll get started. So the session for the topic for today's session is relieve the pain of unified storage management with flash file services. And my name is Manisha Gaal. I'm a senior director of product management, responsible for for file services,

and along with me, co presenting with Me is Sammy. He's the director of engineering, also responsible for flash file services. Now, before we get into the meat of the presentation, Unified has been around for a very long time, and the reason for that is it's very common to find customers having both block as well as file use cases in the same data centre.

So obviously, unified has a promise of delivering value by two mechanisms. One is it allows you to actually consolidate both your block and file workloads onto a single or a smaller number of pieces of infrastructure, thereby increasing the utilisation and giving you some Capex benefits. But more importantly, now that you are using the same architecture for both your file and

block, you get some Opex savings because it's common procedures, you don't have to have specialised teams for those two separate pieces of infrastructure and get some benefits, and typically we see customers taking one of these two approaches. The first one is that they would have a specialised piece of infrastructure for block and a separate one for files, meaning that they're not doing unified at all.

And the second one is to use what we call a single use architecture, meaning something that was an architecture that was originally built for one of these two protocols. Either It started off as a files architecture, and then block was developed later and bolted on later. But most of the management was left at the file system level,

or the vice versa, which is, They started off with the block architecture, and then they bolted on the files protocols on top of that to give you a so called unified solution. Now let's look at how each of these give you and what benefits it gives you. First of all, you know when you're doing separate architectures,

dedicated architectures for your block workloads and your file workloads. Obviously you're not doing unified, so you get neither of those. You don't get any Capex savings. You don't get any savings. But if you're doing the single use architectures on the surface, it looks like you will get the Capex savings because it does allow you to consolidate

workloads into a single infrastructure. But what it's doing is it's making managing the protocol that was developed later that was bolted on, making it harder to manage that. So in some sense, it's actually increasing your Opex cost instead of giving you any savings. And because the dominant cost over the piece of that infrastructure's life is the Opex cost,

these solutions might actually be worse off than doing separate, dedicated architectures. And which may explain why so many customers continue to do separate infrastructures for either one of those. Now I talked about, you know, compromises introduced when you do take one architecture and bolt on the second set of protocols on top of it,

let's look at what some of those compromises are. The first one is let's stick with the example of a files architecture that bolted on block. So there is a mismatch of granularity because what you're going to do is the vendor basically introduced, you know, block protocols, on top of which was in architecture that was designed for files meaning the predominant form of managing it.

Most of the data management is at a file system granularity. So what approach in this particular case would be that? OK, let's give you the block protocols, but you're still managing groups of lus, not individuals. So there's a mismatch of granularity, which leads to complication, additional complexity and loss of efficiency.

We'll get into that a little bit later in the presentation. The next is these architectures actually evolved over a period of time. So there is various different levels at which the management is distributed. Some at the disc level, some disc group, some at a storage pool level and then file system and so forth. So there's a lot of complicated.

We call it asymmetric management model, and you'll see a very different approach that we've taken. The next is, you know, because these were legacy architectures. There's a lot of limitations around, you know, the original stack. We are sticking with the example of architectures that were built for files, so the file system may have a size limitation.

These are actually carried over to the other protocol because they are layered on top of each other, and these cause You know, these necessitate a lot of data migrations that are completely unnecessary and consume a lot of space and overhead. And the same thing is required when you're trying to refresh the underlying infrastructure. If folk lift upgrade, not only are you spending time, you know migrating data,

but also there's licencing and support costs involved as well. So you know, Unified is much, much more than just a multi protocol approach. It takes, You know, it's actually a much higher bar to get the two benefits of Unified now, When we were starting our unified journey, this is about 3 to 43 to 4 years back. There were some key considerations we took into account.

First. One is, this is true for everything that we do at PR. It's a user centric design so clearly identifying who the user for this feature or product is going to be, and having a deep, deep understanding of the problems that we are solving. And in many cases, for example, with unified, most of the customers are actually used to the

pain that's associated with unified, so it's not like these would surface. In the very first conversation, you have with the customers, so you have to dig a lot lot deeper than that. Second is focus on use cases, which is shares, and V MS for now and then, you know, in the future Containers and objects and one other sort

of consideration for us was coverage versus complexity. So we want to keep the common case very streamlined, very simple. That's how we reduce the overall complexity of the solution, as opposed to a opposite of that where we try to get a lot of coverage for a lot of corner cases and thereby making the overall model much more complicated.

And finally, a future proof design, not just looking at things that we want to solve for today, but also looking at how the solution is going to evolve when we have additional performance scale, capacity, scale as well as looking at adjoining use cases. And finance is a rich, rich ecosystem of a joining use cases, so designing it with all of those in mind.

So this really boils down to four key decisions that we believe have a profound implication on how customers would the experience that customers would get by doing unified with flash files, so the first one is having a non layered approach. What we decided to do was really embed the file system or the files protocol. As a first class citizen, it sits besides the block protocol,

not on top of block protocols. None of the management models are shared between the two, and this has a very profound implication in terms of the efficiency of the overall solution and the native experience that you would get when you're doing, you know, with flash. When you're managing blocks, you'll get a block native experience. When you're managing files,

it's exports, and we're not using VMS either over block or files. You'll get a VM granular approach. The second is not to have file system with limited size, so it's an unlimited size. This is another key observation that we had that the competing approaches have this limitation, which causes a lot of complexity.

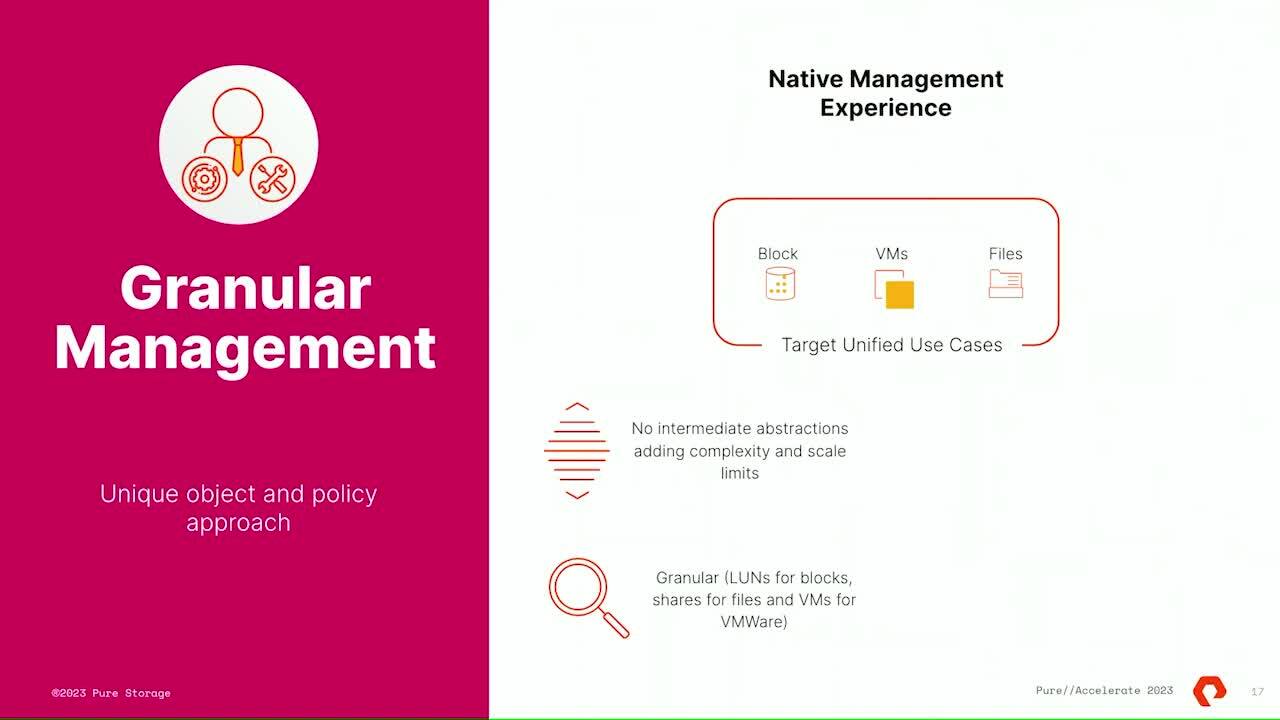

We will go into some of those. The third one is to have a granular data management model, so when you're managing, it's non granular. When you're managing, you know, general shares and exports as a share, and when you're managing VM, it's VM granular, and finally simplicity above all, anything that we can automate and offload

as a decision or as a manual task from the users we want to do. We want to do those, and we'll give you some examples of those as well. So this brings us to a Flasher. If you truly unified the first one truly unified block and file protocol platform, which is built on a very strong foundation of simplicity throughout the

data life cycle, optimised for flash all the way from DMS to the pity operating system. A future proof architecture which, basically with green and N for hardware and software, gives you a mechanism to, you know, take take your infrastructure and upgrade it and always have it be current. And last one is PR one, which is our management and a platform.

All of these are equally applicable to files as they are to block and built. On top of that is files protocol that is natively integrated as first class citizen. You know that is simple to manage, and we'll go into some details of aspects of those now. What we are going to do next is we'll take all of those four key decisions and walk you through how they actually simplify life for a

user what implications they have when you go to actually manage the solution, starting with the first one, which is a going with a non layered solution as opposed to layering files on top of existing block constructs. So if you take a traditional multi protocol approach, you would have one of these two. I talked a little bit about that. So you would have an architecture for files.

It would have management functionality built at various different levels because these evolved over a period of time. Uh, and then when it came time to actually add additional management functions, uh, sorry, um, additional protocols. The protocols were bolted on as a protocol stack, but the management was left at the

original constructs. So, for example, a lot of it in this particular example would be at a file system granularity. And the reverse is true for architectures that started off as block architectures and then had a bolted on a file protocol, either in a VM or a container or something like that. Now these have some implications. One is there are advantages of this approach.

Unfortunately, the advantages are for the for the vendor that's bringing the solution to the to the market. So there's obviously lower implementation cost and faster time to market because you're not reinventing all the functionality that was already built at the lower level. But it does bring in a lot of pain for the customer.

So there is a non native experience you're managing now groups of loans or groups of VMS as opposed to individual VMS and individual loans. Also, when you start doing that, there's a loss of efficiency, and it brings in a lot of scale limitations. Now, compared with this, if you look at the our approach, what we've tried to do is

take the files protocols and really built it directly on top of the global storage pool. And this has some significant advantages. First of all, you know, just like on the block side, we didn't have any physical constructs that you're managing. You're not managing discs or raid groups. All of that is true for the files as well. You would directly manage a file system.

You could obviously do unified. You could do both of them on the same platform as well. This does require a significantly higher level of investment because all the data management functions need to be built at the file system level, and it does result in a slower time to market. But it does give customers a much, much better experience.

It's a more native experience, so you'll be managing shared granularity so you could do say, for example, snapshot loans. All of that shared granularity or even VMS. When even if you're doing it over over NF, there is an efficiency angle. You're not wasting space. And then there are no artificial limits.

So those are the advantages you get by going with a non layered approach. Now I'm gonna turn it over to Sammy. Uh, he's actually the guy in the trenches who's been along all along this journey of the last four or five years trying to build the the file stack so he'll share the the next few, uh, areas. Thank you, Manesh.

Hi, I'm Sammy. Hear me? Yeah. All right. Um, I'm engineering director for flash files. Um, money started off with, uh, the key decisions we made, uh, while building this product. Uh, it touched based on non layered approach.

I'm going to talk about a few more of them. Um, I'll start with, uh, unlimited FS scale. Uh, where's the clicker? Here. All right. Um, I still distinctly remember walking into a conference room with the coffee in hand. Um, while designing file system size. Um, at the time,

I was thinking file system size. This is very well known concept. By the time I finish the coffee, we will be able to nail it down and move on solving more harder problems. Um, we'll see what happened. Um, once again, let's take Let's, uh, start with how limited file system size limit with with an example with a use case,

Uh, on a legacy vendor system. Um, let's assume we have storage of, uh, say find a terabyte, uh, raw storage. Uh, if you want to run this use case on this raw storage, Um, probably as a first step, you create, uh, this is on the legacy vendor system.

Uh, probably first step, you create a file system, uh, of, say, 100 terabytes, maybe a bunch of file systems, and start placing a bunch of V MS on these file systems. Now what does Now let's ask a couple of questions. First of all, for this use case, what does file system buy?

How does it help? More specifically, How does this 100 terabyte file system size help this use case? All right. We all know V MS will grow at some point. We hit a file system size per at the time. What options we have?

What options? Storage admin has. So maybe. OK, let's pick a two a three couple of V MS and move it to a different file system. Or maybe none of other file system has space create a new file system. Maybe year on year two has to be snaps shotted together.

Or these two V MS should coexist for different reasons. So maybe we cannot move like there are various decisions. Uh, storage admin has to make. And the game of moving V MS begins. We call it Game of Tetris. Now, uh, let's move to FA file.

Let's get rid of, uh, for a moment. Let's get rid of this 100 terabyte limit and the file system concept. What does this buy? Now? The V MS can grow all the way up to find a terabyte ra storage.

So you are limited only by two things, either by the raw storage capacity or you want to put a limit on a VM either soft or hard limit. Set a quota policy on the VM. So no balancing required no storage status. Admins need not worry about moving, uh, data artificially because of an artificial

limit. As man mentioned earlier, Um, the layered architecture provides, uh, this kind of artificial restriction, which which is unnecessary and doesn't help you in your use case at all. Of course, it didn't take one coffee to solve this. It took one coffee to get here.

Um, moving on to the the next edition. Granular management at Pure, we spend like tonnes and tonnes of time designing, thinking about the existing problems, existing solutions, solving such a way that it basically we take a lot of pain to solve it. But at the end of it, it provides a simplicity to our customers and your customers,

of course. Uh, block you. You all might be familiar with the flash ara, uh, block management block management is even more simpler. You create a if you want. If you want to learn, you create a volume you create a host, you connect them. Your land is ready for a database,

uh, application or whichever use case you want to use. Um, file is a bit more elaborate protocol. Uh, and of course, it took two coffee to solve, uh, management for file. Um, I'll start off with at the FA file. We don't have an intermediate abstraction.

As Manish mentioned, we don't have a layered architecture without layered architecture. The intermediate abstractions because of it, if you want to. If you want to manage a land, create a we call it as a volume, manage that if you want to, uh, manage a share, uh, create a share, we call it manage directory. Manage that.

If you want to manage a VM, create a VM even more, manage it via a plug in directly. Uh, basically, the management at a granular most underlying object, not on the intermediate layers. Once again, um, as I said, block is block is more simpler. Protocol file is bit more elaborate protocol, um, and to manage files and

shares at scale. Uh, we introduce a concept of policy rules and NS 21 attachment. I have an example in the next slide. We'll go over that once again. No costly time. Time consuming data migration is required with FF five. All right, So how do we do this, Uh, in this example?

Um, uh, there are a bunch of steps. Let's start with creating a file system. If you create a file system in flash array, it automatically creates a root managed directly. We call it managed directory just because all the management operations are performed on the directory. Uh, and these are all admin created,

uh, directories via U. I, um all right. The next step is to create a bunch of, uh, subdirectories, Uh, let's say folder ABC. Uh, once again, these are all admin created, managed directly. Third step is to create an SMB export policy.

Uh, this is nothing but, uh to tell, like who should be able to access and how set up rules. Once SMB export policy is there, attach the policy to BNC. Now you have shares BNC, or you can even name them differently, and the shares are available for our clients to access.

And if you want to limit the growth of our growth of any of the folder, create a quota policy. Attach it to any folder, the ABC, all of them or some of them. Then you have a limit. Uh, if you want to take a snapshot, create a snapshot policy.

Either you can attach it to root manage directory where snapshots are taken at root. Or you can attach it to all the folders and different intervals. Uh, uh. Different rules. Uh, then you have a snapshot at multilevel as well. Um, that's how that's how Management model

moving on once again, um, more about the simplicity. Automate wherever possible. We automate we take the pain of automation so that the customers need not configure any different things. Oh, OK. All right. Uh, so here, I'm going to take a couple of

examples, uh, to talk about only a couple of them. Um, so, as as I mentioned earlier file is a more elaborate protocol, and there are a lot of, uh, there are a lot of concepts in the file. Uh, that requires, um uh, some kind of a configuration. So one of the notorious thing is the permissions, As many of you would know,

Um, and we have, uh, a, uh uh, route admin backup operator. local user, uh, remote user radio app. So there are various concepts and in the legacy vendors, traditionally, they built it like 20 years back. Uh, this permission system evolved over a period of time at the time they build there is

a permission system. Then a few years later, uh, the system changes. Traditionally, the vendors tend to support backward compatibility or existing data usability. Um, see, they simply try to carry the legacy by building more and more config knobs to support all possible competition.

What it gives us, uh, once again, advantage for the vendor is uh, uh, this kind of, uh, design. Uh, you should be able to basically they can carry the legacy. The other thing is, if a new feature comes in, new model comes in or a new behaviour needed. Uh, the engineering team will build additional knob and ship it,

uh, simpler to, uh, basically, uh, time to market. However, all the pain is for customers now, uh, it's a rigid management model. Even before you place the data, you should know how the data will be consumed. Uh, accordingly, you set the mode, uh, set the configuration, then the place the data,

then one access in that in that manner. Uh, so basically rigid management model. Numerous knobs once again, uh, increased TCO at pure. As I mentioned earlier, we put tonne and tonne of investment, uh, time upfront to make this, uh, a simpler of course, this is going to

take, um it's not easy. Uh uh, Easy, uh, basically more time consuming to, uh, Or however, uh, the end result is either no or much fewer knobs for customers to worry about. In this case, um, we we seamlessly map NFS root and admin understand both S, ID and U. ID, G, ID, all of them internally ourselves.

Um, the the SMB side administrator is seen and visible as a NFS route. If if it is access to NFS. And the reverse is true as well, if it is a route, uh, seamlessly Uh uh, translated to admin. Um, basically, FA file doesn't have a, uh, permission configuration knob the customer needs to worry about,

and you can generate the data. Then worry about how you're going to access the data. Um, and if you change your mind or if your application changes, you don't need to go back you don't. You don't need to move. You don't need to retrofit, uh, to summarise a seamless permission model between NFS and SNP.

Uh, the next example, uh, is on active DR once again, um, while building the active DR for file, uh, we had a couple of design choices. One of the design choices. Uh, is this basically any replication technology? The minimum requirement? You need to have a snapshot technology already built.

Then once you have a snapshot technology, basically have a snapshot schedule which will take a bunch of snapshot. As for the schedule and create a replication schedule which will basically take the snapshot and move the snapshot into the other side and can't forget the target. And if you want to reverse application, perform another steps on the array,

um, to do the reverse reverse replication if you want to do, uh, say fire drill, then perform M other steps, uh, to do the fire drill. Once again, this is a design choice that were that that was available for us as well at that point of time. Once again, if you would have picked that design choice, it's, uh, simply carrying legacy. When we would have used many of the existing

piece of piece of architecture. Uh, we could have brought it out much sooner to market. However, once again, if you look at there are numerous knobs and exact same, uh, dis disadvantages and pain points for the customers. Indeed, uh, this is what, uh, we built and delivered if you want to

replicate. So if if you want to replicate a file system, all you need is if the replica link exists between source and target, move the file system inside apart, then file system gets replicated. If you want to move, replicate another file system. Just move the file system inside the pot, that's all.

And if you want to reverse the direction of the replication, all you needed demote the source target should be promoted. Uh, you can keep the target promoted all the time as well. Um, so all you need to reverse the replication is demote the part If you want to do fire drill when the sources continue to replicate, source is promoted.

Continue to replicate. All you need is promote the target. Now on the target, you can do the fire drill without affecting the source. without affecting the replication source will continue to replicate. Once a fire drill is over, all you need is demote the target.

All the fire drill data will be automatically dropped. Of course, this is a complex piece of technology, and high implementation effort takes time to market. However, it's future proof. Same advantage as I I mentioned earlier.

Uh, it's future proof because right now it is, um, we we internally, we every five minutes, we take the Delta and duplicate it, and there's no knob or configuration to it. And I'm sure we'll get better when we get better. Our customers don't need to go back and change any convict.

It automatically gets better when you upgrade your software. That's why it's future proof. Uh, with that, um, I'll give it to Manish to, uh, continue and, uh, putting it all together. Thank you, man. Thank you, Sammy.

So sure you this real quick so no problem. Basically the same. Uh, that's correct. That's something we support. Yes, absolutely. Yeah. And you don't need to think about it while creating a share or putting a data.

You can use the data. The question was, Can you do SMB and NFS on the same share. And the answer is yes. How did the most response you have to check? Yeah. So, once again, um, we are still in in the

journey of building full automation. Uh, that is a push button fail over from the whole post site. It's It's on our road map. We'll get there. That's right. So there are two kind of use cases. One is, uh, where you have a two different set of clients where one on site A one on

site B and I always access the site storage and side B. That is where that you don't need. Basically, you use different set of mount points, and so that's slightly different. Way to recover. Fire drill is OK. You can always, uh, always on keep it. You can do fire drill anytime where there is use case where one set of clients and two set

of servers and two different sites. That's where you you you need a additional tricks, like DNS rename Mount those tricks. All right, so we went through the main major decisions and its implications. We thought we'd put it together and walk you through one example all the way through and all the different implications of that now, the reason we are doing this is you know,

oftentimes the conversations is about speeds and feeds. Do you have this feature that feature? And this never comes out that there's actually a very different design. Paradigm features will get over a period of time, but this is on a very fundamental level. We've done something very different, so we thought it be good to bring that out and for

this audience. So I'm just going to walk through an example. The example we've chosen is basically managing VMS on a file system and walk you through all the different. Uh, you know, the four decisions we talked about and how this would impact a use case from end to end. So starting with that same 500 terabyte storage pool, even to get to this point,

there's a lot of physical constructs that you have to manage like grade groups and creating that storage pool. Next, you create a file system because that's the only way to start. That's the first step to provisioning VM, so you create 100 terabyte file system. Oftentimes, this is the maximum file system limit on it.

At this level, there's a bunch of data management functionality that's tied to the file system, things like snapshot clones, your data replication, data protection policy and also, obviously, the storage efficiency like de duplication. This is the unit of management for that. Now you start provisioning different V MS on it, and that's where you start encountering a bunch

of overheads and inefficiencies. The first one is because you're going to be managing these as groups of VMS. You already have to start planning even before you do the provisioning, which VMS need the same S, which VMS need the same snapshot policies, same data protection policies.

And maybe they need snapshots that both of them need to be a part of that. So this is you need to plan ahead, and there is a cost to be paid when you get that planning incorrect. The next is there is a loss of efficiency because these set of VMS are not going to ded duplicate. If there is duplicates of data with other VMS that are stored in different file systems,

or if there are Luns on the same system, they're not going to de duplicate against each other, so there's a loss of efficiency. Uh, the third one is the storage Tetris. This, by the way, is a term that was introduced to us by a customer in a in an actual conversation. Uh, so when you hit these VM start growing and when you hit that size limitation,

there may be a lot of space on the array. But because you've hit the limitation of the size of this artificial abstraction, you're going to have to move data around. The next one is, uh, if you want to take a snapshot of a single VM because you're managing it as a group, you'll have to take the snapshot of that entire group and whereby you will trap all the changes for all the VM.

Another customer described this as snapshot bloat. So this is another form of inefficiency. If you want to change the snapshot policy of a single VM or a data protection policy, you have to do roughly an equivalent of If you don't like the colour of your house, build a new house, paint it with the new colour and then move into that house so you build a

new file system. Move this VM into that and you know that's That's the way around it. The last one is when it comes time to actually do a hardware upgrade to a newer version of the controller or denser drives. There's data migration and renewal costs, software licencing costs that get introduced.

Now, let's, you know, put some orange on this picture. Let's look at how this would look on a flash at a unified system. So first of all that abstraction or the limitation around the file system, we do have a file system abstraction, but there's no size limitation to it. There's no implication of you know, whether you've put a VM on a particular file system

versus another file system they will duplicate against each other. There's no and, you know there's no downstream implication of doing that. So you really don't need, uh, you know, for our a individual, VM is a managed directory, and by the way, this automatically happens when you do provisioning of V MS through, you know, like a plug in.

This is we've got a policy that you can attach to any directory any other directory created in that in that directory will automatically become a managed directory and you'll be able to do. From that point onwards, you will be able to do manage direct granular functionality. If you manage VMS through a plug in, they would automatically. As you provision them,

they would become a managed directory, so there's no upfront planning. There's no placement that you need to think about. There's no loss of efficiency because all of this data is sitting on a global dedupe engine. In fact, if there are blocks stored on that particular array, they would also de duplicate against any of the other pieces of data.

Whether they are V, MS shares anything else. VMS can grow independently. There's no file system limitation. You can control the size of a file system, but it's done via policy as opposed to a size limitation. So there's no architectural limitation here. But if you want to do, you can control the size of a VM managed directory or even a file system.

If you want to change. If you want to take a snapshot of a single VM, you simply have to create a snapshot policy with that new new snapshot schedule and attach it to that VM and you get your new set of snapshots. If you want to change it, you change it in place. There's no data migration required. And, of course,

with our evergreen and N story. There's no forklift upgrades ever in the life cycle of that gear Or that data I should say. So this is how this particular use case would look when you host it on APR flash array a modern architecture like PR and get VM granular flash optimised architecture. So if you liked anything that you saw today, uh, there is,

uh, this is the QR code. You can, uh, sign up for, uh, a test drive. Uh, you you can actually experience everything that I, uh me and Sam talked about. Uh, you can try it for yourself.

You don’t have to tolerate the pain of existing unified storage. The FlashArray™ File Services stack was designed from the ground up with the granularity and scale to manage key use cases around files and virtual machines, while maintaining the ease-of-use you’ve come to know and love in the Everpure block protocol. This session will walk you through the main architectural tenets of the first platform to give blocks and files equal billing.

Test Drive FlashArray

Experience how Everpure dramatically simplifies block and file in a self service environment.

We Also Recommend...

Personalize for Me