Dismiss

Innovation

A platform built for AI

Unified, automated, and ready to turn data into intelligence.

Dismiss

June 16-18, Las Vegas

Pure//Accelerate® 2026

Discover how to unlock the true value of your data.

Dismiss

NVIDIA GTC San Jose 2026

Experience the Everpure difference at GTC

March 16-19 | Booth #935

San Jose McEnery Convention Center

47:17 Webinar

Simplify your Hybrid Cloud Storage with Pure Cloud Block Store

See how Pure Cloud Block Store can set you free from private- and public-cloud data silos, so you can accelerate innovation.

This webinar first aired on June 14, 2023

Click to View Transcript

All right, welcome to our session. Um My name is David Stamen. I'm the Global Practice lead here at pure for virtualization and cloud and automation. Um So really excited to be kind of coming here and talking to you guys about cloud and cloud blocks store and I'm joined together with, hey, I'm uh my name's Kyle Gross Miller. I'm a senior solutions engineer uh focused on cloud blocks store pure about 8.5 years worked

on a few different product areas including VM ware, including backup and restore. Um and these days working out and helping out do it on Club Lock store. So, yeah, we got some, some fun stuff we think for today to talk about. Yeah, there's definitely a lot of changes. So kind of how we're gonna do is kind of paint a couple of pictures and then talk about how we're gonna actually solve some of those use

cases. So we're gonna talk about really the public cloud overview. Why, what kind of am I seeing? Um And like we're seeing in general customers moving and then what's actually happening and then some of the things that we can do to actually help with that situation, kind of provide some real world scenarios of where we're actually seeing benefits.

Um And if we have time, we'll jump into a demo. If not, we always have our demo booth there and then we'll definitely save time for Q and A. So when we kind of think about the public cloud, there was this beautiful promise, right? Is you need to move to the cloud because it has so much cost flexibility, it has elasticity, accessibility and availability and security.

And these are things that really were always at the top of everybody's mind when I go to the cloud, I'm only paying for what I use. Um When I need to scale, I can easily scale my workloads. I have high, I can decide what is my availability, what is my durability and it's not my data center. So they're gonna make sure that it's secure.

Um And in some cases, a lot of these things are actually true, but there's a challenge um with a lot of these is what happens from a cost flexibility. Um You're locked into a price and a says, hey, we're gonna raise your prices by 11% next month and you're like, ok, um can't do anything about it. The big challenge also is that we'll talk into it is in the cloud while you only pay for what

you use, when you use things, you tend to have to over provision, what you're actually doing and you have to pay for more than what you're actually using. So that kind of talks about that and those are gonna be where we're gonna help a lot. The elasticity is that you can scale in auto scale, but there wasn't a lot of visibility into it. And what my kind of favorite thing is

accessibility and availability. A lot of times you need to make multiple copies of your environment, you didn't have the same snapshots. But one of the challenges is the cloud is not truly unlimited as you think about the cloud. It's, it's out there. And if I want a VM, I can do a VM,

we actually talk to a lot of customers that say I wanna be able to deploy a virtual machine in Azure East one and they're like, sorry, no more compute types go deploy somewhere else where none of your workloads are. And so it truly is, the cloud still has its own limitations. And when they need to scale, it's not gonna take maybe a month or two months to spin something up in a software scenario,

they have to really physically build out a data center, go through the same supply chain challenges that everybody is having. And so that's really that big challenge. And, and to add to that too, I think an important in that scenario, David just talked about is if you're kind of sol because you can't deploy something in one region you may be stuck replicating that data to a different region and then you start to pay

for ingress and egress charges between regions, right? So there's there are some challenges like that that I don't believe are necessarily available on the surface but come up and come into play in budgets very quickly. Yeah, and then security, it's really security is different while things are secure, you're bound by what they can provide. So we'll talk about like at state encryption

from here, everyone is familiar with safe mode, ransomware remediation. And so those are a lot of the features that we can get. And that's really where we're going to talk about some of those particular use cases. Um And then the pitfalls, right, the compute and storage silos the application refactoring the complexity and the disruption. Um Some of these things we can kind of talk

about today, some of the stuff that you'll kind of see um in the next few months or even longer, but we're kind of dis aggregating your compute and your storage. And we're really gonna kind of, I hate to say double click, but we're really gonna like dive in a little bit deeper into some of the challenges that we can actually um solve here.

And then this is kind of one of my favorite slides to kind of talk about. Um And it's really, it's understanding that when you shift to the cloud cloud data is entirely different, obviously, if you think about it, right. It is. But when we kind of think about moving data to the cloud,

there's kind of, I would say two main buckets that this falls into. There's the customer who was either born in the cloud or rapidly moved to the cloud because they had a very quick timeline and they went out there. And so for them, the value of looking at other solutions has one meaning is they want their value to equal cost, they need a way to reduce their cost savings and they want to

optimize it. But if not everything would be working pretty good for them. Um But then we have the other side of the customers that are early on in their cloud journey. Um Their staff says we want you to move to the cloud in the next two years, maybe three years and you don't have anything out there.

And so you're kind of looking at what does it look like to actually migrate this application to the cloud? Maybe you're a pure customer today and you love snapshots replication, active cluster, active dr asynchronous replication. And you've built all of these solutions into your workflow when you shift to the cloud, none of those exist. And so you have to figure out what is more

important, right? It's can I take my existing investment and kind of migrate that to the cloud and use all of those same business processes? And so it's not just about cost and that's like a big thing that we wanna change. Right. It's, well, it's probably gonna be cheaper but think about all the extra value you're gonna get in all those things and we're really gonna

get, dive deeper into kind of each one of those four categories. Yeah. And, right. And I think that there are a lot of differences between on premise system and the public club, but there's also a lot of similarities, right that have cropped up and we see this time and time again in customer accounts. And that's,

you know, if you're not careful and you're not very prescriptive about how you build your cloud strategy, you're stuck in that age old scenario of building data silos in your cloud environment. And a lot of that comes from the lack of features and functionalities or just cost of some of the native offerings available in both Aws and Azure today.

Out of curiosity by show of hands, how many folks are in Azure today? OK. How about AWS? And how about both? Right on. OK. So, so, so I'm I'm guessing for those folks who are in one or both of those clouds, this is probably ringing somewhat,

hopefully somewhat true. Um And you know, when you think about like for example, on Azure managed disks, right? You can with premium SSD, for example, um you're stuck in a scenario where you're potentially over provisioning capacity or performance or both in order to be able to fit say a 4.1 terabyte database and you may be

stuck provisioning an eight terabyte premium SSD volume in order to hit that capacity limit. But you're also wasting 3.9 terabytes of space, right? And you know, and in fairness, Aws and Azure don't know what you're doing with it, they're just reserving that capacity for you to use and that performance. So again, there's some very tricky pit I think that come into play here.

And then of course, enterprise features and functionality ultra SSD on Azure does not have a good snapshot story, right? So how do I, so you're stuck making multiple copies? So when you pick up capacity size for ultra SSD, chances are you're going to have to double it or triple it and then figure out well, where else do I ship it to?

Because what happens if I have an outage and I need to restore it? Right? So those kinds of considerations, I think uh come into play quite often. Um And the big thing too is also doing this chart is in Azure, there's four main types and aws, there's even more, but you have to balance all of these features. And for the customers like multi cloud,

think about nine different disk types to manage each have different considerations. So like multi attached snapshots backups, if you're an Azure, right, premium SD can do all of that. They can't do multi attach, um ultra disk can do multi and you can scale I ops independently, but then you don't get snapshots backups replication.

So there's always this trade off. And then my still favorite feature in Azure and they're eventually going to fix it is the inability to do an online disc resize. Think about all your business critical applications running in Azure. You run out of space or you're approaching space. Will you have to shut down that VM resize it power back on?

Not really good for those tier one applications. Yeah, no doubt. Um And so really what, what have we done at pure? Our engineering has gone out, evaluated all of those different storage options in Aws and Azure. And we've come up with what we believe is the best of breed solution to effectively sassy our on premises flash array.

It's the exact same code base. It's the, it's the exact same purity operating environment is our on premises, flash rays, same feature set, same functionality set. And what that enables you to do is really streamline, moving into and out of the public cloud using pure replication technologies. I think a huge benefit that I don't think we

talk about enough is that, you know, when we, when we replicate snapshots, we're replicating de duped compressed and encrypted data and we never have to send the same bits twice. So that has a huge potential for savings on your ingress and egress charges as you start to move data around to different regions or even different availability zones inside of the public cloud.

So there's a huge amount of benefits there. And you know, oftentimes we we've definitely encountered customers who have moved one or more workloads to the public cloud. You know, thinking it's the greatest thing ever and then a bill comes up or, or they cannot meet a performance sl a that they thought that they could meet.

So they have to move it back out of the public cloud. So I think that using that purity operating environment really gives you that flexibility to move around your data where it's needed to be at the minimum amount of cost and as quickly as possible. And the one thing that sets apart, if we all listen, I have to listen to Shaq. It's Charlie G when he talked in the keynote,

right is the most important thing here is one management plan through pier 11 pity operating system. We didn't just create a cloud os, we took our on premises os and took everything that we're doing and ported it to the public cloud and did an exact software to find solution. So this isn't physical hardware sitting in a data center like some other vendors are doing is we are truly software to find.

So any time cloud gets better, we have the ability to get better. And we're actually that kind of showcase a pretty awesome thing that will be kind of shipping in the next 23 weeks. Yeah, absolutely. And, and, but just to maybe quickly level set on, what are some of those enterprise features that the Ultra S SDS, the premium S SDS, EBS block storage,

you know, some of them have some of these but not all of them have all of these and these are those enterprise level features that on premises, customers know and expect and quite frankly require, right? Things like data reduction then provisioning is a huge one then provisioning in terms of both capacity as well as performance always on encryption, right? Having that data protected,

we VIPs 142 certified, we use a 256 bit encryption at rest, you know. So all that data is very secure in the public cloud, right? Because that data is not under your physical domain control, it's sitting out in an Aws or Azure data center. So we actually enhance that protection.

I think another important thing to mention as well is that while we operate within the bounds of an Azure or AWS availability zones, we actually enhance inside of those SLS because we have dual controller architecture, for example. So should a VM go down or should a rack go down in a S that secondary controller VM will take over with 100% performance 100% features without any in performance transparently for your applications.

So just a ton of upside just in terms of slas up time in the public cloud is of course a very big deal. Yeah. And kind of when we think about pr right is one of the things obviously as Aws you guys get asked, what about GCP? We'll talk about that later. Uh Well, not today, but after this, it's, we don't really care what cloud you're in is well under the covers,

architecture looks entirely different at the top level feature set is exactly the same, the exact same thing as your on premises environment. So same api same everything and that is really the important thing. But what's really cool is when we kind of think about Evergreen in the public cloud and what happens when we actually start looking at what is available um on premises.

Evergreen is traditionally every three years, right? You get new controllers, you get new discs and your ray looks brand new again, if you wanna go to the next slide is that when we kind of think about what have we actually done? So when we first created cloud block. So it was I think 3.5 or four years ago and in Aws, we had to use what we call the virtual

shelf construct is we used 2 EC2 instances and a bunch of E two instances attached and we had to use three shelves worth. So a maxed out instance was actually 2 21 23 instances to be able to do because that's just what worked. We still had savings and value. But it didn't make a lot of sense. When they released the I three Ens, we were actually able to reduce that density now into

two shelves and actually give you better performance and everything. And we're also seeing that next generation of evolution within Azure. So while under the cover, we can tell you everything we're using, does it really matter to you if you're just managing a purity interface? The great thing is when we think about all of those constraints that architecture brings,

when we first deployed an Azure, we had to use ad series instance and we had to use ultra discs because ultra disc require specific instances. Ultra discs had the performance, they had multi attached, they had what we did and that's where we've been lucky for us. We are a software solution. So Azure comes out with really cool stuff. We get to take advantage of really cool stuff.

So you'll see here at the bottom, we're going to be releasing a new cloud blocks store skew the MP series. So instead of utilizing ultra disks, we're now going to be using the new premium SS DV two model. And so what that's gonna do is we're also adopting an E series generation. So not only are we going to be faster from a generational perspective,

we're two CP generations newer. Um We're also getting additional benefits of the Azure instance types. And then since we're using premium SS DB two, the actual cost of the infrastructure goes down. So if you think about customers who are deployed today in Azure and they're migrating to the A the new Azure skew, they're getting something that's about probably 30% faster and

in some cases 50 to 70% cheaper with their existing license. So think about that value where you are now saving $1000 a month. And now all of a sudden you're saving $10,000 a month. And again, that value is not to be determined. Yeah, and the thing I was gonna add to is is part of the we've taken some of those cost savings, right?

And just enhanced the entire platform that, that V five E series VM that David talked about cannot be understated because we're basically doubling our available back end I with that new controller VM. So it's this thing is getting a lot more performing and a lot cheaper as well. And I think, I think that's kind of been a a wake up call for a lot of public cloud customers is,

you know, block storage is in a lot of ways kind of a sump cost right within the public cloud, you can use that Azure and Aws budget for more interesting things. So let us help you kind of minimize that storage TCO spend and be able to use, you know, more impactful Azure and AWS apps to help the business. So we've got a few common use cases with CBS that we wanted to touch on and this will be

expanding soon as well. But for today, you know, this is really that TCO play, right? I think is of utmost importance and probably top of mind for a lot of people, obviously, data reduction comes into play, compression comes into play, then provisioning comes into play. And that's, you know, when we start to talk about 30 or 50 terabytes at minimum,

that's where you really start to see our data reduction take effect and save you money relative to using those native offerings on their own. You know, I wouldn't recommend a customer if they've got five terabytes of data, probably not worthwhile to deploy CBS because I mean, if you need the enterprise features, absolutely deploy it. But the cost savings aspect of it doesn't

really come into play until you hit maybe that 30 to 50 terabyte range, disaster recovery is obviously a huge use case, right? I think, I think for a lot of a lot of folks out there like using the public cloud, it's a very attractive Dr target versus going out and buying space, building a data center, basically building an entirely separate DR site.

I think the public cloud has a lot of utility and functionality to help with disaster recovery. Think about using pure storage replication, we have multiple replication options that we'll talk about more later. Um But if you, if you want to have like kind of that cold or warm dr scenario where you've got a cloud block store instance up and running, we've seen numerous customers do that.

So they replicate over say a sequel VM or a sequel volume. Um But then you can also leverage test E against that as well, right? You've got this sequel instance that's up there running, it's got a very recent copy of your sequel data and then you're able to do test e work against it in the public cloud because you're spending,

you're paying for it anyway, right? So I think that there's a lot of value there as well. Um And then the last one right is sometimes you want to move out of Aws or out of Azure or between and we have that functionality as well where you can basically move data between clouds using our replication technology. And again, that's going to save you some money because we're sending reduced data encrypted

data and we never send the same bits twice. Cool. So when we kind of think about this, right, that first one is that value equals cost. The other three are kind of value equals features and cost because you're getting all of those benefits. So what are some of the things that we can do to kind of drive down those costs?

The first one is extremely straightforward if you know pure, right? Data reduction is our key thing. We're gonna save you money because we are only storing the unique blocks in the back end data reduction then provisioning all of that. It's amazing. The other ones I kind of like to do are performance.

My favorite and least favorite question is how fast is cloud block store? Right? Is it the same as a flash rate? No, we're competing against cloud storage. So it's not gonna be 6500 ops at six gigs per second. We're competing against the local discs. But what we do give the ability to do is have performance efficiency.

And we're gonna kind of highlight that in like two slides. But think about it this way is instead of saying this volume needs five ops 10, 15, 2030 building and then building out this bubble as you say, I have an array that does all of this. If that workload needs that performance, it has access to it.

And they're, we're doing all these things to actually save it, we save on compute. And you're like, hey, David, how do you save me on compute? You're a storage company. When you think about those IO two, those IO one, all of those instances that require those high performance discs, they need storage optimized instances, storage optimized instances are a

premium compared to CP or memory. If you're running a database server, you probably want a memory and AC P I instance, not A storage. But if you want ultra, you gotta use that. And so all we care about is the network adapter we're in to your Azure and Ec2 V MS. So if you need to push 10 gigs of throughput, get a VM with 10 gigs of Nick, we don't really care.

There's a lot of optimization there. And then on the network, this is what Kyle really highlighted and this cannot be um kind of stated enough. There's two savings we'll see here on the network side. One of them is gonna be around the actual cost savings of an ingress. And egress perspective is if you're migrating

data out of the cloud, you pay for every bit you do when you move data into the cloud, it's free. But take a scenario that you have to move applications 100 terabytes that takes you 10 days um and it's free, but it takes you 10 days. If you're doing that with peer, say at 5 to 1, you're only sending 20 terabytes of data in.

So that's now taking you two days instead of 10. What happens if you're doing this for disaster recovery? Well, you replicated 100 terabytes in when you want to sync that back, you have to do a full sync. So 100 terabytes at 35 cents per gig. And my ma let's just say $35,000 right? Just in egress and an additional 10 days of

time to be able to get that application back up and running, it feels a 10% rate of change. We're only gonna send a terabyte out and it's gonna cost you $350. So not only is it the value of the cost, it's the value of time and effort that you need to actually move that application. Um And so again, real world scenarios, this was modeled off of the ultra disks.

Kind of like what I was saying there is that entry 0.70 terabytes was about where we were. Um I think this was about a year ago in Azure. And again, the more you, the more you write, the more you save because again, data reduction with the premium disk, we'll see these entry points kind of start at that 30 to 50 terabyte mark. So we'll start seeing a much lower entry point

for customers to kind of see that first year savings. The other big things around cost, right? I mentioned when you provision performance, you provision at an individual disk, but you have to provision for peaks. So think about a data warehouse application that has a bulk load twice a day and it needs a whole bunch of performance. You're provisioning for that peak 20,000 ops

per VM you're paying for that all the time, but you're only using it 20% of the time. So what happens with that? Well, you're wasting that. And again, think about this at scale that times 20 is a pretty big chunk of change with. And I guess we don't really have that slide on this deck. But when you think about it this way, cloud block store is an array.

So when you have an array that has say 40,000 ops available to it, it's more is you have that balancing of workloads that when that application needs that performance, it has it available. When another application needs that performance, it's available, you don't have to stay on my SQL server C drive doesn't need anything. That data drive is gonna need 20,000 ops. That log drives gonna need 10,000 TB is gonna

need 50,000. Like you don't have to look at each individual layer. It kind of allows you to kind of step back and just manage things at a much easier level. And I think an important point here too is that CBS also coalesces rights. So from from non adjacent workloads. So meaning if in this example of one of them is oracle, one of them is a data warehouse.

We're going to be able to basically coalesce those rights. So you're going to get actually improved performance right performance capabilities from non adjacent workloads on the same CBS array. So it's also a really nice workload consolidation play from that perspective as well. You get, you can basically get more for your money when you,

when you, when you use that cool things and then again IO two, you get that provision I ops independently requires those V MS to use all tries to stay the same thing. Um The interesting thing about ultra disk is not only do you pay for I ops the instant and throughput, there's also a VCP reservation that as soon as you enable ultra SSD functionality on those instances,

you automatically get charged for each core that you are using. Even if you didn't attach this, say you were deploying the VM and you're attaching the disc in 10 days, you're paying for that reservation. So again, these are where we see that savings. Um Again, I'm kind of gonna skip over some of these.

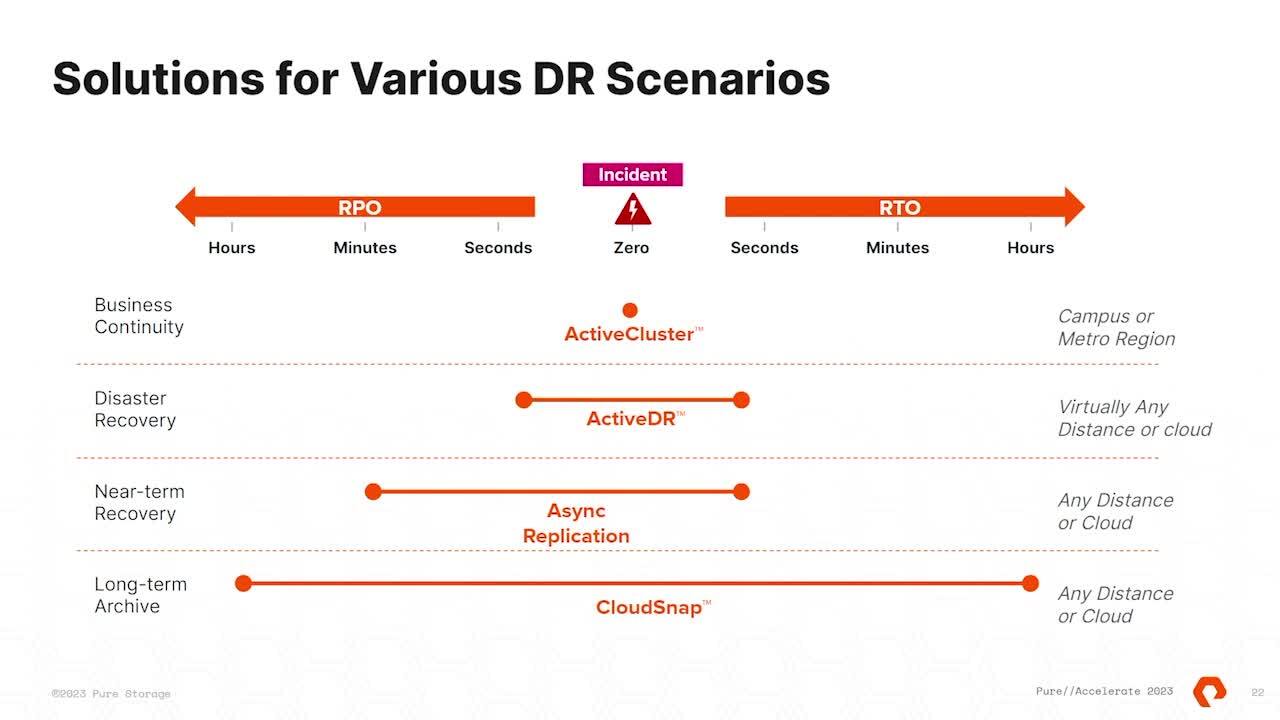

We talked about this. Um So disaster recovery. Yeah. And I think this is, you know, for, depending on the workload, depending on your level of L A that you're required, right? We have numerous solutions out there, right? And I think that's part of that is the problem

of choice, but I think that it's also this is kind of the full ecosystem of availability that we offer today. There's tons of options out there. Active cluster. I should mention the only scenario that that CBS doesn't support, which is important to note that if I've got an on premises flash array and I need to and I wanted and I'm moving or replicating data to Aws or Azure active cluster

requires an 11 millisecond round trip latency. So that's, that's the one instance on premises, flash ray to cloud blocks store where active cluster is not supported. All of the other options here work and they all have higher level of RPO RT O. Active cluster does work if I've got a CBS instance in a region and in two different availability zones,

if you want that kind of H A experience in the public cloud to have transparent fail over if shouldn't AZ go down, I think A W has just had an outage yesterday, you know, so it does happen on occasion. But um but and then I think the only other one I wanted to touch on here as well is cloud snaps is a really good cost effective method for customers to be able to maintain their data storage slas basically,

you're replicating pure storage, data store snapshots to Azure Blob AWS S3. So you have that really low cost option to offload your data, keep it around or move it to a completely different region. Should something really impactful happen to an entire Azure A S region? It's at least available somewhere else. You can rehydrate it and rebuild your

infrastructure in a separate region if there's some large outage for some reason. Yeah, and like the one thing to think about is many different solutions depending on your requirements. Do you just need business continuity where you're gonna do active flush and have high availability across availability zones? Do you need hybrid replication across regions? Or across active DR as and there's gonna be

kind of that shift of complexity versus cost versus requirements and it's not a, you pick one, right? It's you can kind of mix and match all of these together and build out a kind of pretty cool environment. So this is something we did with what we called a pure validated design. But it's essentially a way to think about how

can I build this highly resilient architecture that really takes advantage of a bunch of really cool stuff. So we think about here in data center A we have a lot of our primary workloads in data center. B we have this, this was a business critical application. So we had active clusters set up there while we were doing all of that, we were actually offloading snapshots to a flash array C.

So that kind of had that primary copy of one day on that primary array for the next 7 to 30 days. It was sitting on that flash ray C. So if something happened to our data, we could actually pull it back down on premises. Well, what happens if our data centers no longer existed if it's like me, we worked in health care and the data centers were two miles apart and I live in Florida.

So that means the hurricane is gonna definitely hit both of those. So let's replicate it up to the cloud, right? We're gonna recover to cloud block store. And we have that ability. But again, that's if we want warm data, what happens if we had 10 databases, eight of them are business critical. Two of them are,

it'll be nice to have let's store those in purity and cloud snap and S3 cheap and deep. We keep them for as long as we want. When we need that data, we hydrate it out of cloud block store or hydrated into tod blocks store. So it kind of shows that you kind of get this ability to kind of mix and match and kind of see what actually works for your particular environments and kind of showcases the the

different um particular use cases. And I would just add on on that slide real quick is, you know, you can use stuff like Azure site recovery manager to to really kind of fully orchestrate that backup solution for that sequel example, meaning that you can use a SR to replicate over your sequel boot volumes to Azure spin up an Azure SQL VM and then you can use pure cloud block store and basically bring it back.

Whoops. There we go and we're back. Yeah, we I think we lost our preview. Maybe we'll see if it works. Let's go. Um So yeah, so kind of when we think about this cloud snap is one region, but we actually have to do the ability to do multi region cloud replication. So you want to offload to east but also have

that secondary copy replicate to West. It's all stored in that proprietary metadata encrypted format. So again, just shows you how you can recover if you are to in cloud block or in cloud, you wanna make sure that you're storing a local copy as well as a regional copy. We have many ways to kind of accomplish that. Yeah, because I mean disaster recovery is not a snapshot, right?

You need to move that data somewhere else to have a true Dr solution. And again, there's just a multitude of ways to to accomplish that. Yeah. And kind of when we think about pure snapshots. Yeah, snapshots, right. What did we do very well? Data reduction and snapshots, our snapshots are awesome.

Cloud does not have awesome snapshots. There's a lot of challenges there. And so when we kind of think about what makes sense, it's taking DEV test, analytical workloads, creating clones that are very good. And as kind of Kyle mentioned, right, disaster recovery and test DEV are two of our most popular use cases.

There are also two of the most popular use cases that work together. So think about a disaster, disaster recovery, you're replicating data to the cloud, it sits there, you're not doing anything with it and you're paying for it's just sitting there warm. Why not make better use of that? If that was a production database, why not clone that for test of UAT staging?

You're refreshing those databases nightly and taking advantage of all of that data. What happens when you actually have AD R event? You've actually been refreshing every single one of those databases once a day for the past two years and said, hey, if I have to do ad R scenario, I know it's gonna work because it's been part of our business processes for the past two years.

I'm not changing anything. I'm just saying, instead of doing servers 23 and four, I'm not doing server one. And so it's kind of proving the value that you get that you just cannot get in the cloud. And if I'm a DB A doing DEV test in the cloud, I want the freshest most recent data available to do test DEV work against, right? And because our snapshots are more or less

instantaneous to restore from, you know, you that, that DB A has that level of insight into the most recent available data. Cool. So that was, yeah, how are people dealing with the operating system? Right. Yes. What is in here to ask us? Yeah. So that's kind of,

I think we have the slide here and I'll repeat the question. So we don't have that. I thought we had that slide here. So the one thing to consider is that cloud based. So the question was kind of how do you handle the operating system discs and what are the protocols you support? So great question there.

So the one thing to think about with disaster recovery today. And there's more stuff we can kind of have one on one with NDAS and your account teams is that today, cloud based V MS have to boot off of cloud based storage. So when you're doing disaster recovery or migration, you're treating it as too separate kind of work flows. One for the operating system,

which can be done thousands of ways you can use VMX com vat um any CRS right? You're essentially taking an on premises VM or an on premise physical server and converting it to a cloud based VM where peer comes in is orchestrates all of that data. If you're using some of our partners, like they can actually orchestrate the both of them together and not have to use two separate

tools. But it really comes down to what I call a modern I application or a legacy ish application. If it's that legacy application that someone installed 10 years ago and it's as old as we can. I, I actually heard this conversation yesterday is I have an application in my data center that's old enough to drink now.

Um And those are the applications that you actually have to use that as your site recovery and convert that because no one knows how that's gonna work. It's probably using some USB keys. But if you have a modern application, you treat the OS as ephemeral databases, file shares, um applications that are deployed through infrastructure as code.

Kind of going back to that very first slide. How are you actually getting your data to the cloud in the first place? Well, I don't care about my OS disk. It's a database server. I like cares about the database and the log. So I'm gonna deploy a sequel image and uh EC2 AMI I'm gonna deploy it, snap my volumes and have it up and running or I might already have a warm sequel server there

with blank discs. And all I have to do is a quick snapshot, refresh and get it. So that's kind of where we see some things that are happening and there will be some things in the future that will simplify the migration and the disaster recovery accelerate next year. We'll have a much more exciting answer to that question, but it come back and to mention

protocols. Uh CBS is today. Um NVME TCP is on the horizon. Um Right now, Linux kernel support it, Windows does not um and different clouds support it. So it's kind of one of those things that it will be there. Um But there is no fiber channel um in the cloud.

So technical demos, right? I think we have enough time. Um we made it there and hopefully it it works. So um we're gonna kind of do two things, right? So one of the things that we didn't really highlight too much was how does cloud block store get deployed. Well, it's easy. You go to the marketplace, you subscribe to it,

you enter some details in and you say go um what we have the ability to do is actually um tie in. Um Is there a video here? OK. How does all the fun demos? So what we actually do is when we deploy via terra form, you set variables is the key vault, you to store your credentials to net pairing because we actually have the SSH to be able to do some configuration,

a resource group, a virtual network and your subnets. And again, we have all this documented in our platform guide. But what we do is very easily love code. I love being able to do automation because it makes things very repeatable in a format. So you pass in all of those parameters and you put the purity version in the plan and you say next it goes out and deploys cloud blocks or

its software defined. So it's gonna take you about 10 minutes to actually deploy. So it's actually gonna work. So I know it. So when we kind of think here, this is where we are, we kind of have our main TF which is if everyone's not familiar with Terraform, it's essentially um files that are built out.

Main dot TF is where you have the bulk of your code, you have variables which are defining the variables, you have the TFRs, which are the inputs you do it in which is initializes everything. And then you do a plan pretty much says everything you put in does it actually work? I'm gonna actually build out and tell you what actually is happening, making there. Once you're there,

you do terra form apply and normally you have to do a proof, but we don't, we just go force in production. So you ought to approve it. So what this is actually going to do is go out and actually deploy all of it and you can have to build these full run books. We have something called a quick launch. It requires VNET subnets, net gateways, all of that, you may not have that in your environment.

You might have a net new green field environment, that particular environment will actually go out and deploy every single prerequisite. So think about PO CS labs. You don't want to leave this running all the time, you might want to be able to tear it up, tear it down and terraform allows you to do that. So again, everything here says known after

apply. So what's actually going to happen is once it's done, we're gonna output your management IP, your IP your replications IP and then you're going to manage that array just like you did on premises and it manages the state. So it knows exactly where you are, you know how it deployed it again, very quick, it was deployed. I don't even know if we,

we had the time particularly it's about 10 minutes. You get, you know, we put you guys through that, but it does take a little time to deploy all the CBS resources. Yeah, and it's deployed as a managed application. So everything is in this self contained bubble. Think about it as a physical array that sits

within your data center and then within that there's like the Azure V MS, EC2 V MS all the network adapters, the NSGS um and kind of all of that. And so again, like an array, you don't have to worry about managing anything. Demo two, we're going to talk a little bit about using safe mode. Um And what safe mode is, right is, it's essentially does not allow you to eradicate

data, right? Because if a bad actor gets access to your array, your environment, first thing they're going to do is delete all your snapshots and encrypt, the one remaining drive and hold it for ransom, right? That's the nature of ransomware. So really what, what this does is it does a few things.

So it secures snapshots by blocking their eradication for up to 30 days. You can configure that if you want less. Um And then you can also do it on a per protection group basis as well. So maybe certain data you don't care about if it gets ransomware, maybe it's ephemeral data, maybe it's log data, maybe stuff you don't care about.

Um And anyway, if we move on to the next slide. So in this example, and I'll show a couple of slides in this demo as well. So, right, this is this is kind of a repeat of the of the previous slide, right? But it it also enables you to replicate data into the public cloud as well.

And the only way to unblock this is a support request to pure storage as well. So it's a very, it's kind of like almost having two keys to get into your safety deposit box at the bank, right? So pure support has one, you, the pure storage owner has the other one. And really there's just a couple of easy steps, right?

So you could create a protection group on the CB or flash ray as you normally would add your volumes to it. Um And then you, you know, enable and define your your schedule. How often do I want to take snapshots? And then you optionally can have it replicate to a to a replication target as well, then you edit your safe mode, config you turn it to ratchet it,

click. Yes, once that's instantiated. So you cannot turn this off. So that's why it's important with without a call to our support. But here's what that does not allow you to do. You cannot delete it, you cannot lower the amount, you cannot lower your frequency of replication and you cannot eradicate any data, right?

So should you get attacked by a ransomware bad actor. They're not going to be able to get in there and do these things to your protection group, which again is really important to keep your data secure. Now, if we look at a demo here, imagine that my on site flash array has been compromised. I've replicated this data to my CBS instance. And now I'm going to copy down this from blob

storage to my CBS array. So that's what I'm doing here. Basically, I've got the data down on CBS. Now, I'm going to copy that snapshot to two sequel volumes, right? So I've got sequel volume one sequel volume two. And I'm just spinning, there's been a disaster on premises and I'm spinning everything up on

the public cloud in Azure as fast as I can. So I take those two volumes, you can see they've been created here. Now, I'm going to create a new protection group and apply safe mode to it. So I'm going to add those two sequel volumes. I've just copied instantaneously copied from a volume. I'll call this one sequel safe mode,

clicking into it. I'm gonna go ahead under the members. I'm gonna add um those two volumes that I've just created. Now, I'm gonna go ahead and set my snapshot schedule. We'll do it every eight hours and then now I'm gonna ratchet that.

So this is basically applying safe mode to this protection group. So all those things that I cannot do is I'll go ahead and show next that, oh, I actually cannot do these things that I normally can do without safe mode. So let's just say at this point, so now I'm going to take a on demand snapshot. And now let's say at this point, somebody who's a bad actor has gotten access to my CBS array

and now they want to destroy all this data that I just stood up. So here's my protection group and I'm going to try to destroy it and I can't because safe mode is applied to it. Ok. Now the doctor says, OK, well, how about I will instead go into, go into this. I'll try to delete the snapshot, I'll eradicate it and I can't.

That option's great out. I'm gonna try to lower my snapshot schedule. I, I'm gonna try to turn it off. I can't. So think about that person at their last day of work. They're mad. They're angry admin, they're gonna go and delete everything.

It's not that. So it's not just about ransomware, it's about creating that, taking that immutable snapshots one step further and making sure that while the data can never be modified, it also can never be removed um in any, in any, in any kind of way. So yeah, it's very powerful and this kind of just highlights that it's really the same

purity. We're able to provide these features in the cloud and kind of have this hybrid model that you just cannot get on. Yeah, cannot eradicate, right? So again, so I he was able to delete the snapshots but he cannot eradicate them off of the array against, yeah, super powerful tool in the fight against ransomware.

Um So kind of when we think about this vision, right? It's we want to make sure that we're bringing that evergreen model to the public cloud with that unified licensing model. And again, enabling pure storage, hybrid cloud between your on prem and your cloud. And again, while all the stuff we talked about the benefits are like with replication and user

usage is that if you have a Flasher on prime, you get that in the cloud, you don't have to have a flash rate on prem to do this. Um It's if you have cloud only stuff or you're not an existing pure customer today, you not have to be pure. And that's one thing we kind of just want to make sure that we're highlighting it's available to anyone anywhere.

Um Again, QR code links to our um platform site where it has links to all these videos, demos, walk throughs K BS, deployment guides, contact information, slack groups, you name it. Um We have it there um for that and I think the next slide is gonna be Q and A. So um kind of any questions that anybody has anything we covered or anything that you wanted

to know about cloud or cloud block store that we didn't cover. Um, you mentioned, uh, you know, some day you guys are very proud is, uh, the DFF here was built on the FF and force to use whatever this they have, how much of a challenge is it, it doesn't really matter. She's lower performance.

So I think it really depends. Right. If you think about DF MS, it's all about the on premises, infrastructure and we're doing that because that's what enabled us to be successful and what we're doing kind of had this conversation with the customer earlier, right? We don't use S sds, right? We use raw in the DFM and that's there in the

cloud, right? We chose a very careful consideration is we wanted something that wasn't to be future proof and we didn't want to do the legacy way of taking our arrays and putting them into the data center and refreshing them every three years and new arrays, right? That's legacy technologies. We wanted to build something that was on demand and an evergreen model that worked for the

customers. And if you think about this right, we didn't really talk about performance if we talk about on premises, right? We have flash AY XL for super performance, the X for latency optimized C and now the E is, it's not just about the performance anywhere, it's about the efficiency. So the E is all about the ESGS cloud block

store is providing all of those efficiencies in the cloud. So we didn't really need to think about DF MS and this raw and, and what is the way to get the lowest latency from the CPU to the memory down to the is our goal was to cater to the wide breadth of users. And then where we see things that make sense is we can build specific skews that say we're

right now, we're using the E series instances, say there's one that is the E 1 28 that now can do 300,000 ops. There's gonna be a premium to that price, but it's just like the XL versus X is, do you really need that performance? If you're using it for Dr you don't need 300,000 IOP 24 7, you have the ability to scale it up. So that's kind of the thing of where we

abstract is if you hear our pitch, when we talk to customers, we don't really talk about the architecture till call 234 or unless the customer asks because what's under the covers doesn't really matter. It's all the features and everything that we can deliver on top that provides that value. And yeah, I will maybe take a little different approach to that.

It was very difficult to engineer this because you know, you're using native, you're basically playing the cards that Aws and Azure deal to you from an infrastructure perspective. So weeding through all of those different options and figuring out. Ok, what works and how are we going to essentially satisfy, you know, our on premises flash ray was,

was definitely a pretty significant engineering undertaking, you know. And the other thing I would mention is in terms of performance, I think of it as kind of blow single digit m uh millisecond latency. So similar to a flash ac in terms of performance call. Thanks for the question. Any other question?

I got another one round two. So you're talking about, er, we can all agree that VM Ware is the predominant uh leader that you know what they put on. These emfs can't really do much with that and be replicated any plans with A BS or B MC and AWS to work with I want.

So um today we get the engineer and the sales answer. Yeah. So, so today you can do in guest with V MC and A B and cloud block store, but you're right, you do not. So you can do, you can basically map via guest from an A VS or B MC based VM to CBS. We have customers doing that today just to right size,

their A vs and V MC node size because they're stuck in a scenario where they're potentially deploying excess A VS or VM ce SX I host to, to reach a certain storage footprint. So you can use NC today with CBS. We have customers doing that. Um Yeah, so we'll have a, we'll have a better answer to your question it soon. Yeah. So the, the one way to kind of think about that

is when you're using the hosted VM Ware solutions. It's VM Ware cloud is VM Ware's Cloud on Aws. So it runs what they call cloud train, which is a very specific cloud VM Ware operating system, which is based on specific versions and stuff. It's very hard to get things added. right? They don't install stuff, they don't configure

all of that. And so the partners that are out there are file vendors that are, are kind of there. Um On the other side, Azure, Alibaba, I think it's C CV are using the separate version where it's essentially normal VM Ware. And so for those, there's a little bit more flexibility to work with them and kind of build

some partnerships which again, you might have news at some point on specifically what we're doing. But the key thing is, is like we understand that solves a lot of the challenges. Um and the best thing to do is reach out to your account team and we can really do more road map e kind of features, really talk to specific use cases and and model your particular environments.

There's, there's exciting stuff coming, we'll say that much. The question up here. Yes. So uh for, for a or kind of uh in the art of yes. So the question was around multi cloud, how does that work, right? So it's purity on both sides. Our evergreen one licensing is a unified model. So if you buy 100 terabytes,

you consume 100 terabytes. We don't care if it's an Aws and Azure. If it's one array, five arrays is, you can write up to 100 terabytes and that's what it's there. So it actually helps because if you have data that sits in Azure or let me just start with Aws because Aws is the problem child for this right now is Aws is buying up so many companies and a lot of customers started there.

But if you're in retail, health care, pharmacy, retail, I think I said retail, um you may not want to be in Aws any longer because you're giving money to your competitor. So what happens when you actually want to take that from Aws to Azure? Well, one is you're replicating every single VM independently across the link with some third party tool, you're probably, you're probably paying for and having to re factor all of your

applications again with pure right unified approach. It's an in ISSE connection. So you deploy an Azure VM, you replicate the data volumes, connect it up. So whether you're doing it from on prem to the cloud and you do it that way you're going from Aws to Azure, Azure data S, we're unifying that approach and making it extremely simple

Cloud and cloud storage is here to stay and will only continue to grow. This brings new challenges and new use cases to think about and consider. Learn how Everpure Cloud Block Store can help you get more from your cloud storage.

We Also Recommend...

Personalize for Me