Dismiss

Innovation

A platform built for AI

Unified, automated, and ready to turn data into intelligence.

Dismiss

June 16-18, Las Vegas

Pure//Accelerate® 2026

Discover how to unlock the true value of your data.

Dismiss

NVIDIA GTC San Jose 2026

Experience the Everpure difference at GTC

March 16-19 | Booth #935

San Jose McEnery Convention Center

47:00 Webinar

Catch up and Skill up to Become a Kubernetes Admin

You will learn Kubernetes basics, and how Portworx delivers the features needed to build modern apps while also providing an enterprise-grade data management layer with features like automated capacity management, disaster recovery, security, and so on.

This webinar first aired on June 14, 2023

Click to View Transcript

Uh, thank you, everyone. Good afternoon. Hope everybody had a good lunch. Uh, thank you for joining us for this session. Uh, what we are going to talk about is how you can scale up and become a community's admin. Right. So this is going to be that 101 track depends. I don't know. We'll do a quick poll and figure out where

people are with their community journey. But the motive of this presentation is just talk about the basics, what they are. We have a couple of slides at the end to talk about what works does, but majority of the presentation is just focused on basics. I didn't see anybody leave, so I'm sure everybody's in the right room. Perfect. Uh, so, uh, let me introduce myself.

My name is Pavin. I'm part of the technical marketing team here at Pure Storage. Working inside the cloud native business unit. Uh, focus. I've been personally using communities for the past four years. I think, uh, started without, like started when people were not running straight, full application or not comfortable

running straight full applications and seeing the evolution of how now we can, uh, have now we have so many constructs, like position volumes and position volume claims and operators and things like that that make that journey easy. Obviously, if you didn't get any of that, we'll cover all of those topics and all of those concepts in this presentation, uh, quickly looking at the agenda,

we'll talk about infrastructure. Revolution. Uh, how we ended up with communities, uh, how we ended up with containers. Talk about what are containers. What are some of the key terms that you should know when you are thinking about containers? Same. We do the same thing with communities Why you need communities in the first place. And then So what are some of the community

resources that you should know from a one on one perspective or from a community space perspective? So we'll see a lot of a lot of yaml files. Yaml is just yet another market language. You'll see a lot of those code snippets. Don't worry. Like I think if you deploy it once, you know how to do it over and over again. So but we have examples of how each of those

communities objects work. And what do they actually do? Uh, uh, in the in the in the almost the end of the presentation, we'll talk about some of the gaps. Like communities is the best thing since life said, but not really like there are still some gaps that we need to fill, and then we'll identify those gaps. Talk about the works portfolio.

But then that's it. Like we won't go into the port details, uh, join us for the next session at two o'clock. Uh, if you want to learn more about port works. So talking about the infrastructure revolution and how we actually got here, right? I'm sure everybody in this room has seen a form of this diagram. But I just wanted to, uh,

repeat it, right. Just to make sure that people have gone from bare metals to virtual machines to containers. And why do we Why have you done that? Talking about how we started. Um, this covered Maybe the last two decades. Uh, people were running applications on bare metal servers, Uh, in a 1 to 1 mapping.

You were when, Whenever you wanted to deploy more applications, you had to find new servers, maybe buy them, maybe find them in your data. centre and then install the operating system. Install the application on top. This resulted in a lot of, uh, low resource utilisation. Maybe your servers were maybe 20% utilised. Obviously not a great,

uh, parameter or a great statistic to report up the chain. Uh, which then led to the virtualization evolution. Uh, VM Ware came in. Everybody started running multiple of these applications as virtual machines on that physical servers. I'm sure everybody at PR accelerate has touched some VMware technology.

Uh, if not everybody here in the room will be a VMware admin. And then, eventually, I think over the past 5 to 7 years, people started using containers and containers became a thing. Containers became mainstream. This allowed users to even break down their virtual machine.

So you, instead of having a guest os inside each VM inside each virtual machine, object containers can be that lightweight package where you all just have your application code and all of its dependencies. If you need a specific version of python, you have that inside that container, and that makes it lightweight, and it also makes it portable. So there are benefits to containers that we

look at But this is what the evolution looks like, right? Traditional deployments, virtualized deployments to containerized deployments. Uh, before we move on to the next session section, can we do a raise of hands in terms of how many people already know what community is or have deployed one community cluster? OK, perfect. 40% of the room, I think, um uh,

we'll ask the question later, but let's talk about what are containers. So containers are these lightweight packages that allow you to bundle or package your application code with all of its dependencies, all of the libraries that you might need to run that application code. So if you're running, uh, you're writing an, uh, your application in Gola and you need a specific version of Go,

uh, you can package all of that up in a single container image or a single container. And then let's say if your developers are doing that today, they can build their that application on their laptops with that dependency, and then we can move that container from Dev to testing to staging to production. But as an admin, you don't have to worry about installing that specific version of Golan or

specific library that might need it to run that code. All of that is enclosed in that container and that can make it portable talking about the benefits. Uh, we already covered a lot about portability. Uh, containers are are lightweight and and And help with that, uh, talking about density, since you can stuff a lot of more containers on the same number of

physical hosts, uh, the containers can give you a higher density level even when compared to virtual machines. So with virtual machines, if you are able to hit that 60% 70% utilisation of your physical resources containers can help you increase that utilisation percentage as well. It can help you run more containers on a smaller footprint when it comes to servers. Containers also give you that added benefit.

Uh, that you can run them on bare metal notes. You can run them on virtual machines, or you can run them in the public cloud. So regardless of the infrastructure, since everything is packaged in that one need unit, you can move your containers wherever you want agility. Uh, if we will look at what a container image looks like in the next slide.

But if you look at a container image, you have, uh, the base OS or the like. If you need open two version 18.04 you will have that as the basic base container image. And then you just add the packages that you need. You are not installing each and every, uh, package that's available on a sent to us. Uh, open to our rail system. You're not deploying everything you are just

deploying the components that you need for your application to run are talking about security. Since you are only installing or only using the packages that you absolutely need you not. You don't have to worry about, uh, vulnerabilities being introduced in your application stack or your infrastructure stack just because you you don't know what else is running on the system. It makes it contain like it makes it

containerized, uh, for the for me to reuse the term and then manageability perspective, the experience that we spoke about from a development perspective, right? You have your application code on your laptop. Uh, you can use principles like continuous improvement, continuous delivery, C I CD pipelines. You can use git ups,

principles and take that application that's running on a developer's laptop to a system in production very easily. You can use automation capabilities. You don't have to upload, uh, 100 gigs or terabyte of files to a repository. And then when? If you want to deploy things in production, you are pulling everything down and deploying it, uh,

on your production stacks, there is an easy to manage component when it comes to containers. So if we talk about what a container image looks like so talking about some of those terms, right? Uh, this is on the right. You see the text box? That's how you define a container image. So you start with a base OS layer instead of open to being that 20 or 30.

Gig, uh, the root OS disc for your virtual machines. You can actually have, let's say less than a gig of, uh, operating system per container. And then once you start from a base image, you can just decide what package do you actually need for your applications? In this case, we need red already server, and then I need to expose it on a specific port.

And this is what a container definition. Looks like this is what a container image looks like. I don't need any other packages. I just need this to run my application. And I can pull down my, uh, application code, package this up as an image, and then I can pull this, uh, or I can push this to a container registry. So what is a container registry?

Container registry is a is a repository or a registry where you host container images. So let's say you are a developer, right? Once you are happy with local development environments, you want to just make it available for your operator to pick up and deploy it in production. At that point, what you do is you basically push your container image to a container registry, and then when you actually want to

deploy things in production, you pull that container image down and you run things in production or in staging or whatever other environment you want it to be. There are two types of registries or container registries for individuals that are just getting started for, uh, uh, for smaller teams that are just getting started, they might start using container registries that are public.

So doub dot IO uh, Amazon, Google Cloud. All of those have their public registries where you can just create an account, push container images and then pull them down whenever you want to. But then obviously that's not secure, right? You don't want your container images because it has your application code or links to your application code.

You don't want that available publicly. So eventually, as you go down this journey, you will start using something like a private registry. You can deploy private registries inside your own data centre. You can use something like a red hat, uh, to host it locally or if you wanted to. You can also use these,

uh, Amazon Container Registry or ECR or GK Container Registry in in a private mode, where your container images that you're pushing there and, uh, are not accessible by everybody on the Internet. So container registries help you to store your container images. That's about it when we start talking about containers, right?

Uh, I know I didn't go to the keynote, but I know so did, uh, And he pointed out to me that one of our customers spoke about how they are running 30,000 containers in production. Uh, and managing 30,000 containers individually is not an easy task. You need a container orchestration system to help you,

uh, not only deploy those containers, but also manage them on a day to day basis. This is where you need a container orchestration system. I know we are talking about and everybody is talking about, but I think back in 2018, let's say there were multiple different options that were available to customers or available to users for orchestrating their containers.

I'm sure you might have heard terms like Do or Miso and was an open source project from Google Cloud. All of these were different, uh, container orchestrations, options that were available to users. Communities being an open source project won that race, and now it has become the de facto standard, and the community has basically made that a

standard. So you see everybody all the have a flavour of or a distribution for communities. Amazon has Amazon EKS Red hat has red openshift, VMware, vm tan, and so on and so forth. Everybody has a managed distribution or a distribution that you can use to orchestrate your containers. So that's the need for combin. Right?

But what? What does it actually look like when you're deploying combin on bare metal nodes or on virtual machines or in the public cloud, you have a control plane component that is responsible for scheduling your containers. So you see components like API server, which is that end point and have different components inside and outside the cluster. Talk to combin.

You have a scheduler and a controller manager, which takes that request like please deploy these containers for me and then it has the knowledge like it looks at all the different available nodes, looks at which note has available resources and puts your containers on that note. So communities does that orchestration for you. There are There are gaps in this approach that we'll discuss later.

But it does that basic orchestration for you. Uh, CD is a key value data store that every community cluster will have, which is responsible for storing all the metadata, uh, involved with running your own communities. Cluster so and then you have your worker notes. Worker nodes are where your actual user applications run,

so each worker node can run many containers. Uh, whenever the Cube scheduler asks a worker note to run a specific container. It talks to the Cube lett. It deploys the pod. Don't worry. We'll talk about what a pod is, but for now, for this slide, just think about it as a a container. It deploys that container on your community's

worker node and then runs that application for you for services like VM Ware, Tanz or some uh, versions of red eye openshift. These are things that you deploy on your own, and that's why you have access to both the control plane and the worker notes in your cluster. But if you're using a managed service like an azure, a KS or azure community service or a Google cloud GK or even red hat on AWS the Rosa

Service, you don't have access to the control plane notes. They are being managed for you, so you don't. You don't have to worry about making sure that they are always up and running. They have the correct version installed. It's a managed experience for you. You only get access to your worker notes, and then once you talk to the API to deploy your applications,

it gets provision on your worker notes. So talking about a few capabilities, uh, provides you with service, discovery and road balancer. So let's say I'm deploying those 1000 containers on my cluster, right? How do they talk to each other? Uh, there are constructs inside communities like services that enable that communication

between different components without the user having to do any any additional steps. Uh, it it it provides load balancing functionality. So let's say, uh, a container inside the same cluster wants to talk to a different container inside that same cluster that can be done using a load balancer as well. Or if you wanted an application that's still running on V MS.

Uh, if you wanted that application to talk to a container that's running inside a coin cluster, you can. A coon can also help you with that. There is a construct that can help you with that, uh, storage orchestration again. Uh, I know everybody has gone through, uh, the deploying virtual machines on VMware and deploying virtual machines on Let's Say,

hyper V and VM Ware does it in V MD K format, and you have VH, DS and hyper V. And there are these different standards in different way. Virtual discs are created and deployed on your virtualized infrastructure. What does is it has a standardised approach of doing things.

It has a a standard called container storage interface, or C SI standard, which basically means that regardless of your storage provider, you always use the same constructs to deploy persistent volumes or volumes where containers can purchase data. So that's another standardisation that provides for you.

Cities also gives you the ability to perform automatic roll rollouts and roll back so it can help you with doing non disruptive rolling upgrades. And if you don't like the new version that's running, you can always roll it back in a non disruptive way as well. So can do all of that heavy lifting for you communities is that desired state system. Once you tell it to do something,

it will go and figure out how to do that. Think about it like a cruise control on your car or a thermostat like once you set it to a specific value, it will make the necessary changes required to make sure that the current state of your system matches the desired state that the admin has set for that specific application makes sense till this point. OK, uh, and then self healing,

right, The the user state, the, uh uh, current state to the desired state Mapping also helps humanities provide self healing capability. So let's say I define my con. Uh, my, uh I asked my comin to deploy three, pods for me or three containers for me. And if one of those goes down, Coben has the ability to run a reconciliation loop where it basically deploys that the third part that might have gone down and bring my current state

to match the desired state. So it does provide self heal capabilities. Now that we know, like what is it is from a very high level perspective. Let's talk about some of those compute storage, networking and security resources that you usually see through being thrown around. And that might seem jargon to you right now. But hopefully after this session, you you can talk in some of that same lingo.

So talking about compute resources, you have to start with a community pod. So I know we spoke a lot about containers, but when communities deploys your applications or it deploys your containers. It does that using the concept of a community spot. A pod is nothing but a collection of containers. A pod is the smallest addressable unit when it comes to community,

so you can have multiple containers running inside the same pod. And again, you don't want to end up in a situation where you have like a monolithic pod where you have, like 100 different containers. Running in the same pod pod just makes sense if you have containers that need to share the same network space or need to share the same storage space to be in the same pod. So that's how containers and pods are related

to each other. If you look at that yaml file, this is how you define any and all cobin resources. You always have an API version. You always have a kind of parameter. Where the value is is always the type of cobin object that you want to deploy you. You can give it a name. You can assign it labels,

but then, if you look at the specification section, you'll see I'm deploying a couple of different containers inside this pod. I can give give the container my name. I can point it to the image. So when I'm actually defining or deploying this yaml file against my communities cluster, it knows it has to go and pull down the B box dot colon 1.28 image from that container registry and run it locally on my communities.

Work or not. So that's how container registries and communities work together. In it, containers are things that even before my application container has started in it, containers are used to do certain things before the application comes online. So if I want to set something up, I think about it like a cloud in it,

like before your application actually starts, Uh, you want to run some processor or you want to run some tasks. There are also a couple of liveness probes and readiness probes. What does this mean? Liveness probe is it? Communities will continuously check the condition of your running container. If it's able to meet the live liveness probe

requirement, it will continue sending traffic to that container to that po. If the liveness probe fails, it knows that there's something wrong with that pod. It will stop sending traffic to it. It will spin up a new one and then start sending traffic to that new port. We talk about readiness probe. This is when you are deploying that new

container, right? Or deploying that new pod. You want that part to be fully functional before you start routing user traffic to it so you can define those conditions. You can define those pre checks using liveness and readiness probes. Next, if you talk about replica sets, uh, so if you are deploying pods,

you're just deploying pods one at a time. But again, that's not how we deploy pods, right? If we deploy pods in groups, I want three replicas. I want three parts for my specific application. You define that using a replica set object. So replica set allows you to set the desired state configuration for the number of application parts you actually need.

So in this case, I'm using a replica factor of three a replica of three, which means when I apply this replica set object, it will deploy three pods, and if one of those or two of those parts fail at some point, it will add two more parts so that my current state matches my desired state. Uh, so a replica set is basically used to, uh, make sure that I have the correct number of

parts running on my communities cluster. But what about different versions, right? If I have a new version for my application, how do I apply that against my cluster? This is where deployments can help you. So a deployment object is responsible for not just the number of pods, but it's also responsible for the version of the pods.

So let's say I'm running engine and I deployed originally the 1.14 0.1 version with three replicas. One Do I want to upgrade it to 1.14 0.2? I just, uh, update my deployment object pointed to the new container image. And it basically creates a new replica set object, creates three parts and then moves all of my traffic automatically So it doesn't do this, Uh,

disruptively. What it does is it spins up a new part with the new version, takes down one part from the older version, adds the second part, takes on the second part from the old version, and that's how it does it. Non disruptively between different versions for your applications. And then finally, we have state full set objects, right?

So when we are talking about deploying state full applications on containers, you have things like databases that you might be deploying on comin. And you don't want your secondary notes to come online before your primary node. That's not something you want. Uh, so that's where you use something like a state full set object where you're deploying

your primary node or your master node first, once it comes online is when we proceed to deploy the other pods in your cluster. So let's say I, I always want my, uh, my zero part to come online first, then my sequel one and my Sequel two. As my read replicas state, you deploy that using a state full set object, and you will get that order.

If you want to scale up, you, uh, let's say from a three note to a five note cluster. You can rest assured that the naming for that will be the same as well. So my Sequel two and my Sequel three or N four. If you are scaling your application down, it will remove the N minus one part so it will remove the MySQL four, then my sequel three, and then scale down your cluster as well.

So your master is always going to be around talking about the next object. Demon sets so demon sets are useful when you want to run at least one pod on each node in your communities cluster. So this is helpful when you want to run something like a logging solution or a monitoring solution, you want to collect metrics from each worker node in your cluster.

You define it as a demon set object, and whenever comin deploys these demon sets, it deploys one copy of the pod on each worker. Note. If you add more worker notes to your communities, cluster cities will make sure that it deploys more parts to meet the on those new nodes as well. If you remove nodes again, it will take down those parts.

So, demon said, is just making sure that you're running one demon or one pod on each worker node in your cluster and then jobs and ground jobs. Communities does have a way to run a single instance jobs, or you can run it on schedules. This is similar to what we have in Linux today. So communities also has those constructs where a job can represent the configuration of a

single, uh, job or a single command. And then if you want to do it over a period of time, you can specify a ground job object inside communities. So with that, we are done with computer resources. Hopefully, now when people talk to you about pods or deployments, you know what we are talking about.

Uh, next let's move on to the storage section, right? Uh, from a storage perspective, there are three things that you should know. Uh, first is the persistent volume object so persistent volume is just that piece of storage or that actual volume where containers can store data. Why do you need a persistent volume in the first place?

Because pods, as we discussed earlier, are ephemeral in nature. They can be deployed, they can be re spawned, they can be redeployed on a different node. And whenever it gets redeployed, it's not the same pod. It's a different instance of your container. So how do you save data through of these recycles and reboot operations are This is where a persistent volume can help you

persistent volume maps to a port. It helps your part persist data or store data. If the port goes away, a new new part comes online. It connects to the same persistent volume and has access to all of the data that the previous part had written. So, uh, defining a persistent volume, uh, is easy. It's just a PV object you can define,

uh, the name, the name space that the the the persistent volume should be in. And then to consume that inside a pod, you just add it to the volume mount section. There are two types of persistent volumes. Uh, nobody does the static way anyway. But static way was when, Let's say, if I wanted to deploy a persistent volume, I created a con or configured a lo on

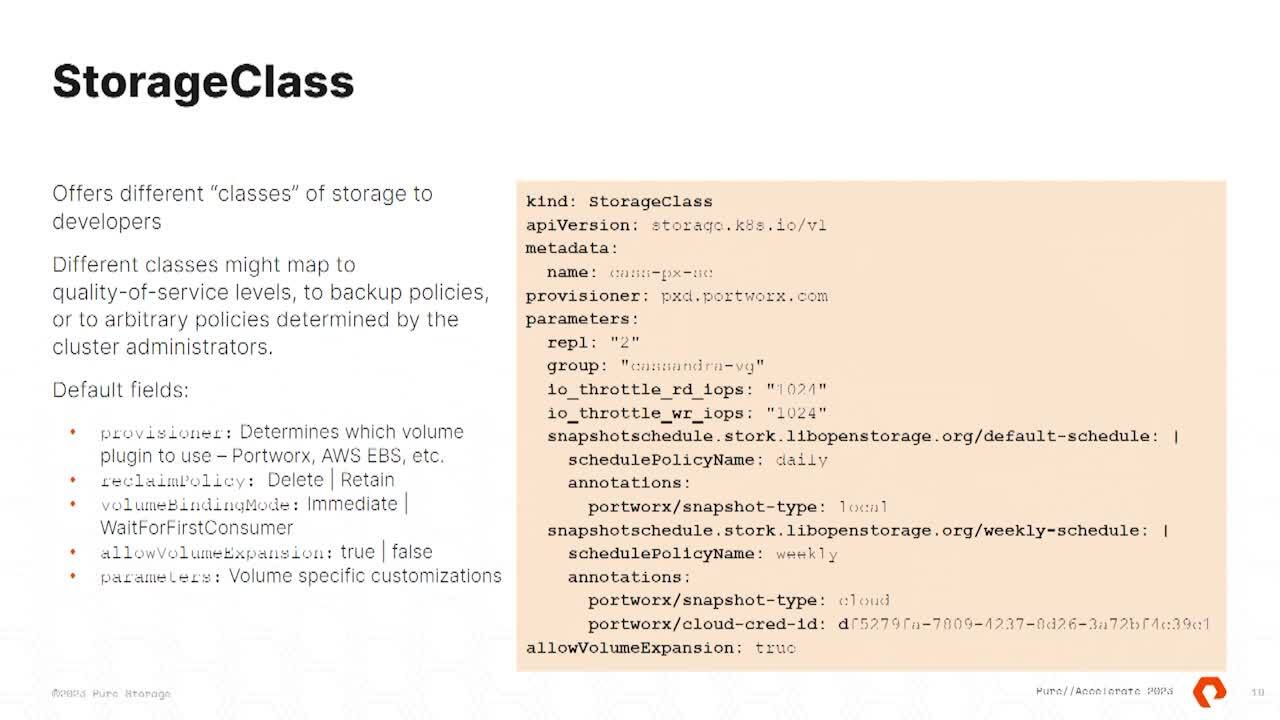

my back and storage array and then mounted I. I want my application port to use that Lon. That's how I statically create a PV object and mount it to my back and storage array. But that is slow. That leads to more ticket creation. And if we are talking about scale, it won't really help you. It will slow you down instead of adding agility. So a dynamic way of doing things is when you

have a storage class object where when your application is asking for a persistent volume or a place to store data, it asks a specific storage class object, and the storage class automatically talks to the underlying storage system and deploys that persistent object for you. So as a user, all you have to do is deploy a storage class or configure a storage, storage class object and the all all the storage

orchestration is done for you. So if your developers are asking for, uh inside their pod specification or pod files storage class, can automa automate the storage provisioning and mount that inside your pod So again, removing some of that heavy lifting from an administrative perspective, the way port works helps you with this, uh, helps you is,

uh, port works can allow you to create multiple of these storage classes. So these are different classes of service that are available for your developers, and you can fully customise how storage is being provisioned for you. So let's say you wanted a storage class with a replication factor of two again we talk, we can talk about how port works does replication in a later section.

But if you wanted multiple replicas for your persistent volume inside your, uh, storage cluster, you can do that. You can define affinity. Anti affinity rule ox also allows you to set maximum values. Like if a persistent volume, you don't want it to consume more than 100 and 24 up from a read and write perspective. That's really low.

But if you want to, you can configure that inside a storage class object. If you want to specify a snapshot schedule so you don't have to go back and, uh, uh, create snapshots for 1000 persistent volumes that you might be running. Having that policy inside your storage class will help you automate all of those things. So any persistent volume that gets dynamically provision automatically inherits these policies

and has that snapshot schedule, and then finally, the way an application or a report requests for storage is by using the persistent volume claim object. This is where you are. You pointed to a specific storage class, so I want to use the Port Works C storage class. I want write once or a block volume for my application. And I want two gigs of storage.

Once you define all of this, our communities will talk to the storage class that you have linked here. Ask it to provision that volume dynamically for you and mount it to your pod. So that's how the storage orchestration inside communities works. So next let's talk about network, uh, any questions till this point before we move to

network? Respect it Storage. OK, so yeah. So the question was, how do we find out what different parameters are supported in the storage class parameter? Uh, if you're talking about port works, you can go to our documentation site docs dot port

works dot com, and it will be listed there. The parameter section is completely customizable by the storage vendor. So if you're using a different storage array or a different like if you're using AWS EBS in the cloud, you can go to the documentation site and they they will have the few things that you can customise. Since port is completely cloud native or

software defined, we allow a lot of customization. So we have a big list. But in other cases you might be restricted to, like the type and the amount of storage that you, uh, that you want for your applications. Ok, cool. Thank you.

Talking about network resources pods and storage is great, but your pods or your containers should be able to talk to each other. This is where you have service objects and a service object. Basically, is that layer of abstraction is that load balancer layer? Where if, let's say part A wants to talk to part B or,

uh, component A wants to talk to component B. They talk to that service object in between. So, uh, because as we all know, pods can come and go so they whenever they are redeployed, they have a new IP address or a new endpoint. And obviously you can't keep going back and updating that end point inside your original or your source spot. Having something like a service object inside

communities will help you to route traffic traffic to a consistent endpoint inside communities, and then your back end parts can keep coming. Keep going if you add if you started with one part. If you added five, the service object will will make sure that it routes traffic on a round or basis to all the different parts that sit behind that abstraction layer.

Uh, there are different types of service objects as well. You can define the service type as cluster IP. This means that only, uh, uh, the only containers or ports that are running inside the same communities cluster can access that container or pod. Uh, if you are talking about node port, this is where we assign a communities.

Assign assigns a random port from the 30,000 to 32 7 67 range. And you can basically access this service from by using the node IP. So whatever the communities work on node IP S call in that port number and you can access that application. And then finally, we have the load balancer type. This is important when you are when you want to

access applications from outside your communities cluster as well. Let's say you are running in the cloud. If you deploy a load balancer service type, it will create an AWS ELB instance uh, elastic load balancer instance and allow you to connect to that application that's running on inside your EK. If you're running on Prem. Uh, you need to configure something like NSXT

or metal LB as the open source project to to use this load balancer functionality. But if you wanted to, uh, for any traffic to come our exports over HTP and HDPS and you wanted for communities to handle, uh, SSL domination TLS, uh, name based virtual routing. You use a second object called ingress. So if you look at the diagram right here, right, you can still have your service object.

But you can define rules and terminate your SSL connections inside that, uh, ingress object. And that again, that's how you define it. Every yaml file looks kind of the same. It, as you can see, it has a kind parameter, a kind of key value pair a metadata section, a spec section. And once it's running, it will also have a

status section a section. When you look at it, a network policy again, these are firewall rules, right? How do you stop part A from talking to part B. Uh, how do you stop any other part from talking to part A. You can define all of these things inside a network policy object again Everything is a yaml file you can define.

Ok, Uh what? IP S shouldn't have access to this part. What port or what? Port numbers you can you want? Restrict this access by default. Everything is allowed inbound and outbound. Uh, so you do make sure, like if you're running things in production, you have to make sure that you use a version of this and enforce network policies because you

want to, uh, restrict traffic getting to your applications. But that's how network policies can help you talking about security resources. We have a few, uh, that we want to talk about. So the first couple are roles and cluster roles. So roles and cluster roles allow you to map a set of or create a set of permissions for resources that are inside your communities

cluster. The only difference between a role and a cluster role is role is specific to a specific name. Space inside your communities cluster. And I know we didn't talk about name spaces, but if you're running a multi tenant communities cluster, you have different name spaces which are just resource groups or

resource pools inside your communities cluster, so you can have 10 different applications running on the same communities cluster just in their individual name spaces, and you can use the concept of rules to have permissions associated for objects inside that name space. So roller will help you with that cluster role gives you the same level of flexibility, but at a cluster level,

not a specific name space level. But how do you map it to an actual user or actual group? That's where a binding comes into the picture. So if you want to use a specific role and assign it to a specific user or assign it to a specific group, you create a role binding object where you are mapping those two things together.

If you want to do this at the cluster level, you can create a cluster role binding and point it to a cluster role, and then assign those privileges to a user or a group. If you are if you just. If you're just creating a role binding, but you point it to a cluster role, it will still work. But it will still create that role binding for that specific name space.

So that's how roles and cluster roles and role bindings and cluster role bindings work inside communities. And then finally, we have service accounts, service accounts again. I'm sure many people know what our service account usually is. Inside is kind of means the same thing where it's not a human, uh, like it's not a user or a group.

It's, uh, if you are deploying, say, let's say, third party applications that need access to your community resources. You can create a service object, a service account, object with specific set of permissions and use that to talk to different either the community's API server or specific resources that you have running inside your community cluster. So finally, a diagram instead of just I

commands. Uh, this is what an application typically looks like when it's running inside a community cluster, right? So you have your entire community cluster, which is the outermost box, and then you have individual name spaces. So a name space can have all the different communities objects inside a specific, uh, for for a specific application.

So let's say in this case I'm deploying a state full set object, which is my compute resource, and I'm specifying a replica factor of three so this is, uh Let's see. OK, these are the three parts that basically belong to that state. Full set object. And it always makes sure that there are three parts.

Uh, you you need a service endpoint. If anybody else wanted to talk to these set of pods, you have a service endpoint in front of them. And then these pods wanted to store information into PV CS. So they ask for a PV C object which eventually gets them a persistent volume using a specific storage class. So this is how everything that we just spoke

about fits together. It makes sense. OK, awesome. So that is how you deploy it manually. But then the next thing to make it easier, there are these things called communities. Operators and operators are nothing but software SREs or codified SREs that know how to deploy a specific application inside

communities similar to as part of your data. You might know how to install a specific app how to upgrade that specific app. This is just doing that from a community operator Perspective Communities operator knows how to deploy a specific application. So instead of me deploying or configuring individual L files for state full sets for services For all the jobs that I might need.

Operator knows all of that in advance. You just give it like, OK, I need a post stress cluster. The operator knows how to deploy all the state full set objects or the persistent volume objects and configure all of that inside a specific name. Space inside my communities cluster. So operators are a really helpful concept.

So if you are trying around or or if you are looking at combin, make sure that you start with operators to make it a bit easier and understand how they work. Next, let's talk about the C SI standard and, uh, talk about why the C SI standard was needed in the first place. Uh, so when communities started as an open source project, right, there were only a certain a small subset of storage vendors that

had the capability to provide persistent volumes to communities. Port works being one of them. But all of that code, all all of that vendor code lived inside the open Source Communities code repository, which obviously made it not flexible, which made it really slow to add new features from a storage perspective and made the code base really heavy.

Not the best way to do things right. So the community came together and developed a specification called the C SI Specification, or the container storage interface specification, where they created a standard and asked all the storage vendors to create plugins that work with that standard. So you can. Any new storage vendor can come up in the ecosystem and have their storage interoperate

with communities by just complying with the C SI spec or with the C SI standard. This gives the the community community the ability to not have to worry about bugs introduced in the storage vendors Code storage vendors can add more features on their own communities, can keep adding features or keep improving on their own. Creates a disconnected architecture but with a standard in place.

And the same thing happened for CN I or from a networking perspective as well. So for you to use different uh, for for you to be able to have your pos talk to each other or for your different communities, resources talk to each other. You still need that, um, networking capability inside your community cluster. You can't just use your Cisco switches right inside your community cluster.

That's why the community came together and built AC N I standard or a container network interface standard where there is a standard way of doing things. We looked at service objects and different types of services of inside communities. And this is how, uh, communities resources talk to each other. So there is AC N I standard as well.

So any questions before we look at gaps, I know we have 10 minutes remaining. We can do questions at the end as well. But those are all the one on one things that we wanted to talk about. I have two more slides or three more slides. First, let's talk about some of the gaps in communities, so communities definitely solves many challenges. But there are still some things that it doesn't

do well. And I just wanted to put together one slide. Not a pretty slide, uh, to talk about those right. Uh, first being the lack of consistency, even though communities is that, uh uh, uh standardised or consistent orchestration that you can run on bare metal and virtual machines and in the cloud because of the uh,

the plug in architecture, like the C SI architecture and the CN I architecture. You can have different, uh, differences or inconsistencies when it comes to storage. So you might have a different set of features that you can get from pure flash array compared to what you might get with a W CBS and azure discs in Microsoft Azure and it it it. The responsibility for accounting for that or or handling those inconsistencies falls on your

application team, which is obviously not something you want. So there is that inconsistency when it comes to communities or running state. Full applications in production, high availability communities will always deploy pods, uh, over and over again inside your communities, such as it does match your current state with your desired state. But if you are running state full applications,

it just handles the pod. Uh, it depends on the storage vendor to reattach the persistent volume so that it has access to data. And if you're doing this across availability zones, if you are doing this across racks, it might take you 10 minutes 15 minutes for that three month operation to happen, which might end up creating downtime for you. So you need a solution for that?

Answers high availability question encryption. We, uh, like, if you do a quick Google search on encryption and communities, all you will find is how communities helps you encrypt your secrets, which is where you store user credentials. Uh, but it doesn't help you encrypt any of those data that you are storing in your persistent volume.

So that's the thing that you have to rely on your storage vendor to make sure, And because of that inconsistency, you have to make sure that you choose a solution that gives you that consistent experience. So you're not adding encryption capabilities inside your application over and over and over again. Inefficient resource allocations. So when we look at the port spec right,

there is a section that we didn't include in that yaml file where you can specify resource requests and limits, so the minimum and maximum for your CPU and memory and storage resources. Uh, CP and memory research doesn't do storage, but it's not required by default. It's an optional component that you can add, So if you leave your developers to just create those yaml files and deploy it against your community clusters.

You might have applications that are consuming many more resources than they actually need, or many more resources than you want them to use, so it doesn't like really help. You set those limits in place, and it doesn't have any capability to set those at the storage level. That's why when we looked at the storage class section we look at, we looked at how port work has that parameter.

We allow you to enforce those limits, even from a storage perspective. Talking about anti affinity. Uh, when we spoke about how the Cube scheduler works, it just looks for available resources on your worker nodes, and it deploys those parts there. Which doesn't mean that it enforces any any sort of anti affinity rules. You might have a three node application that's

running on a single community worker node, and if that node goes down, your application goes down, so it doesn't matter if communities will spin that up. There is always downtime. You need a a solution that can help you enforce these anti affinity rules so that if you have three replicas of your application, they're spread across three different,

uh, worker nodes in your cluster. Backups doesn't do backups. It does snapshots, and I know we are a pure accelerate, and we all know snapshots and our backups. So you need a solution that can help you protect your application from an end to end vis. It's not enough to just protect your persistent volumes and have snapshots.

For those you need a solution that can protect your pods. Your deployment objects, your secrets, your config. Maps everything inside your community's name, space and back it up to an S3 repository. Disaster recovery doesn't do disaster recovery at all if the primary cluster goes down the recommendation right now, if you do a quick Google searches,

Oh, deploy your yaml files again on on the new cluster and you are. You should be up and running, but they fail to take into consideration that there is actually application data that's running on my primary side. You can't just deploy your application again and hope your application data shows up. You want a disaster recovery solution where you have the primary secondary,

you have something like a VM Ware metro storage cluster or an SRM system that helps you bring your applications back online, and then finally, migrations, right? Humanities is consistent. You can run it anywhere. But you can't move your applications between these different clusters. So you're kind of tied into that system. Unless you figure out a way to,

like, take all of that information, take all of that data and put it on a different communities cluster. So some of these challenges again is gonna help you with. Yes, all of these, Uh uh uh, if you attend, like, the next couple of sessions, but yeah, uh, just some things to keep in mind as you're going through, Uh, the the the learning journey around communities.

We have five more minutes. So what is port works, right? I? I did have to do a pitch, right? Uh, the support works is that number one communities data platform that provides you with storage and data management capabilities, disaster recovery and backup and recovery capabilities or data protection capabilities as

well. It gives you database the ability to run databases on communities using a single platform, uh, which is a hosted control train service from Port Works. So we can run on top of your communities cluster itself. It is completely cloud native completely software defined.

We run our own software as application ports on your communities cluster so you can run port works anywhere you can run your community cluster. So on Prem on bare metal nodes. Virtual machines backed by a VMFS data store in the cloud backed by, say, AWS CBS Port works can run anywhere and give you the same set of or consistent capabilities when it comes to that storage layer.

Uh, this allows us to run on Prem Public Cloud or on the Edge and then allow you to run any kind of application state full application on top of your community clusters. Uh, the the portfolio is kind of broken down into three different products. Uh, port enterprise being the primary one being that storage and data management layer, providing you with capabilities like high availability and replication encryption.

Address those application I control where you can enforce those limits. Uh, snapshot capabilities. More and more capabilities in product enterprise. But it is that high performance and highly available storage layer that can run anywhere you want, where you can run communities.

We then have port works, backup and port work. Disaster recovery Port Works Backup is a community native data protection tool. So it it was built for communities. So it wasn't a legacy tool that has kind of been modernised to protect your applications. Running on communities Port Works backup actually allows you to map to your communities cluster, talk to your API server and protect your entire name space.

All the different communities objects along with your persistent volumes and store it in an S3 repository or an S3 bucket. This can be AWS S3 Google Cloud Object Storage bucket or your on Prem or flash systems and then from a Port Works disaster recovery perspective. Uh, Port Works is kind of the only vendor that does through communities disaster recovery across different distributions.

So if you wanted to create a synchronous DR solution between two communities clusters your primary and secondary, you can use port works to have a stretched storage cluster across two different communities. Clusters and create migrations. Schedules are recovery plans that can help you have a plan in place in case your primary cluster goes down. You can recover all your different application

components on your secondary cluster almost instantaneously, so it gives you that zero RP or zero data loss solution where you don't lose any data and you can bring your applications back online in a matter of less than a couple of minutes. Uh, if you wanted to do a cross region architecture or even a cross cloud architecture, Port works can help you with that as well. You can have an Amazon EKS cluster and a Google

Cloud GKE cluster, and you can create an ayc DR solution where, if anything goes wrong with AWS, you can recover your application from GKE. The only thing to keep in mind in this case is there will be an RP of 15 minutes, since it's asynchronous So with disaster recovery, Port Works has those couple of options and then finally talking about Port Works Data Services.

This is that a database platform is a service offering from Port Works. It's a hosted control plan where we have a curated catalogue of all the different of 12 different databases or data services that we support, including things like Modi, B, Enterprise, Microsoft Sequel, Server, Post, Cassandra, Elastic Search and so on and so forth. I don't want to name all 12 I.

I don't think I can even remember 12 at this point. Uh, but you can deploy these different databases from a U I or a set of API S and deploy it on any communities cluster. Uh, where you want to run your databases? Uh, we we we provide you, uh, again, level one supporter. I don't want to go into details, but yeah, data services is that hosted control service that

can help you run databases on communities with that? I. I do have a demo for you. Um, I know we're running out of time. If you guys want to stay back for a couple of minutes, we'll look at how you can run. Cassandra on is using enterprise. If I can just find find my mouse and if it works?

So here. What we have is we're using the open source. Casandra operator. Uh, you can download it from operator hub dot IO, or you can look at its details, and, uh, instead of relying on the operator to do things like high availability, do things like encryption do things? It's like volume placement and anti affinity Port works Enterprise is providing you with

those capabilities. So once you have deployed the operator to deploy your Cassandra instance. We look at the port work storage class, so in this case, what we have done is created a storage class object with the provision that's PXC dot dot com replication factor of two. So in that storage layer or software layer that we have, we we we will deploy your persistent volume and have a replica available for you.

Uh, you can also specify a group, a volume group which enforces those anti affinity rules. Set those I throttle rules for read and write IOP. Or you can also do that for storage bandwidth. And then we have snapshot policies. Uh, you can have a local snapshot and a cloud snapshot, and you can store that in in any S3 compatible bucket.

So all of that is configured inside your storage class definition itself. So now that we look at the actual Cassandra Cluster Yaml file again, uh, it helps you define things like how many CPU and memory resources do I need? How many nodes do I need? What should I name my Cassandra Data Centre? How many racks do I want inside my Cassandra ring?

What's the version of CASS. I want to deploy, and then at the end, I just ask it to use the storage class that we have already configured for it. So point it to cash dash PX dash C and ask it to create a 10 gig persistent volume for each Cassandra node in my cluster. So once you apply this yaml file, port Works will, uh,

like the operator will deploy all the state full set objects. It will deploy those spots, but port work automates the storage provisioning and gives you persistent volumes at the end. So let's go ahead and apply that yaml file again. QBE CTL or Cube cuttle is something that is a cl I utility.

To apply these yaml files against a community cluster, I should add that to my notes or or my slides as well. But once you deploy your yaml file, it will create those three persistent volumes. It will start. Since we are using the operator, it will start deploying, uh, all the different pods and the state, full set objects and the service objects that are needed

to run Cassandra. Once everything is up and running, you can start using this Cassandra cluster. So this is how easy it is to deploy Cassandra on communities with port works for that storage layer and not have to worry about things like high availability, encryption, FND rules, things like that. So, yeah, that was a quick demo.

At this point, we can just open it up for any questions that people might have. Or, uh, And if you don't have any Thank you so much for attending, uh, this session. Uh, we do have a couple more sessions throughout the day. Uh, that go into a bit more detail into what Port works does. We wanted to keep this session as a community

one on one session. Uh, feel free to ask any questions. If not, Thank you so much for your time today.

Organizations running Kubernetes at scale in production has led to the expansion of responsibilities for virtualization admins. In this session, you will learn how to become a Kubernetes admin that builds and delivers platforms for developers. You will learn Kubernetes basics, and how Portworx delivers the features needed to build modern apps while also providing an enterprise-grade data management layer with features like automated capacity management, disaster recovery, security, and so on.

Free Trial of Portworx

No hardware, no setup, no cost—no problem. Try the leading Kubernetes Storage and Data Protection platform according to GigaOm Research.

We Also Recommend...

Personalize for Me