Dismiss

Innovation

A platform built for AI

Unified, automated, and ready to turn data into intelligence.

Dismiss

June 16-18, Las Vegas

Pure//Accelerate® 2026

Discover how to unlock the true value of your data.

Dismiss

NVIDIA GTC San Jose 2026

Experience the Everpure difference at GTC

March 16-19 | Booth #935

San Jose McEnery Convention Center

38:35 Webinar

Panel Discussion: How to Make AI Succeed in Your Organization

Hear from experts on how to build scalable AI platforms with Everpure & Portworx®—balancing data, infrastructure, and performance.

This webinar first aired on June 18, 2025

The first 5 minute(s) of our recorded Webinars are open; however, if you are enjoying them, we’ll ask for a little information to finish watching.

Click to View Transcript

Well, thank you. uh, first of all, thank you for coming and joining us at Accelerate uh, you know, this is our, our annual user group and our our we're not anything without you guys. So thank you so much for our partners, for our customers, for the analysts, for everybody else that's here that joins us for this event. This is all about you and we wanna make sure

that, you know, we're fulfilling what you need and that there's a mission of why you're here. We wanna make sure we're meeting that. So today, we're talking about how to make AI succeed in your organization, and I wanna let you know just kind of a program note that we will have a question and answer at the end. So feel free, jot it down, lock it in,

text it to your mom, whatever you wanna do, but hold on to those questions and we'll be able to answer those at the end for our panelists. So, I guess to get started. These guys probably don't need introductions, probably know them. They're very popular, but, uh, we're gonna talk to uh Tony and I'm gonna let Tony introduce

himself. I'm Tony Pikada with Nvidia, so I, uh, lead the AI systems, uh, product marketing group, and, uh, just gotta say it's been, uh, you know, we've been at this together a long time and, uh, I always love partnerships with Pure opportunities to do this kind of thing, so I'm indebted to Pure for putting this session together.

Thanks. And you may know Tony from such things as GTC and other events where he speaks regularly and we were lucky to pull him in, uh, to have him here for this and to be able to talk to you folks. So, and then we've also got Victor Victor Alberto Medo. I'm a principal analytics and AIFSA.

I get the privilege of not only working with our great partners from Nvidia but also with some of our largest customers and trying to figure out uh the best way to implement their AI strategies and to make them become real in a really scalable way. So I'm really excited to be here. Uh, I'm a little nervous. I don't know if you could tell.

I also, uh, uh, sell Dos Equis, uh. So everybody stay thirsty. I have to say that I get a nickel for every time I say that. The most interesting man in the world, and he can come talk to you in your shop. So just remember, we're all people that want to come and work with you and help your teams

achieve what you're looking for with AI. So don't think that any of us are, oh, Victor was up there on stage. He's not gonna come to my shop. We're absolutely gonna come to your shop. We love that. We love interacting with customers. We love talking about technology and where it's going.

And more importantly, we love talking about AI. So, uh, my background, I'm Nathan Wood. I lead our cloud native architects for North America and US Federal. So we really focus on the, uh, the Portworks product line and what we do in that space and how we bring uh a software defined storage layer into Kubernetti's to be able to support

AI workloads. And I'm sure that all of you have thought about Kubernetes if you're thinking about AI so we can talk more about that. But I'm gonna, I'm gonna turn this over to Tony and, and have him run through, kind of give us the, the Nvidia view. Yeah, thank you. Do you mind if I stand?

Oh, absolutely. OK, I'll sit down. I'm gonna watch you. Look at that. So first of all, thank you for joining us late in the day. You know, it's uh been exciting but obviously busy week. Um, you know, an interesting thing happened at the beginning of the year.

You guys all felt hopefully you, you heard about the, the whole deep seek moment, right? And uh that really kind of lifted the veil of, of helping kind of the, the world realize that a highly efficient, high, uh high performance model could deliver such deep levels of reasoning and long thinking, uh,

but much more efficiently developed than anything before it. But what that's done now is democratize AI technology, especially from an inference perspective for almost any of the organizations in this room or people that you know who want to tap into its power, right? But, um, an important revelation also came with kind of the deep seek moment if,

if you'll bear with me. So traditional inference, if you think so, you, you've got a trained, tuned, customized model ready to deploy into production, you deploy it in production and essentially it might look like this, right? You feed it tokens in terms of your prompt and you get answers out, right, in the form of output tokens,

fairly simple one shot inference. The reality is in the age of long thinking and deep reasoning, aa deep seeker. Any of its peers or competitors, the world actually looks like this. You feed it a prompt, and then the model goes off of multiple decision paths,

multiple possible options of an answer to the question you just posed. And it thinks through those options and churns through those repeatedly, eliminates the bad ones, chooses the good ones, further eliminates bad ones. Finally, Uh, lands on a candidate answer that is ideally suited to the question you pose, but and, and eventually spits out an answer, right?

Maybe it's an essay, maybe it's a uh uh a travel itinerary, maybe it's any problem that you gave it, but essentially along the way, hundreds or thousands or even millions of tokens were expended that you, the user, never sees. And what this reveals to all of us is that in the age of deep reasoning and long-in models,

the actual consumption of infrastructure on the deployment side of the AI experience is 100 times or more greater than anybody realized. And what this means is that in the era of deep seek and it's and, and the like, You need to be purposeful in terms of the kind of infrastructure, the compute, and the data platform that enables all of this, right? You need to be making wise architectural

choices that bring together that delicate balance of compute, storage, network fabric together. In a way that allows those hundreds of thousands or millions of tokens to be created and generated for many, many users simultaneously with the lowest latency possible, right? And the, and with the highest quality of

experience for those end users, right? This now looks more so than any, any other period in AI's history, like an, a production enterprise workload that runs 24/7 by 365. You know, for the last I'd say, uh, 68 years, almost 10 years in fact, uh, when we first introduced the world's first AI supercomputer.

I remember back in 2016, we shipped the very first one to OpenAI. And since that time, OpenAI had been quietly amassing a very large scale infrastructure that then culminated ultimately in the chat GPT moment that we all experienced a couple of years ago, right? But what's also happened along the way is that organizations maybe like yours have moved out of, let's say the realm of,

you know, experimentation, research, science and academia, no offense to anybody who, who's involved in science or academia, those are very important. But many of you are actually using AI for real pragmatic enterprise use cases. There's, you know, less about exploration, more about growing top line, reducing costs everywhere possible.

Delivering a better experience to your patients, your customers, your business partners, whatever it is, right? And this is why I think today's conversation is so important, right, in terms of platform. We call that platform an AI factory. Hopefully you're hearing that vernacular from us and,

and, and our friends at Pure here more and more every day because we believe in 2025, if you are committed to infusing your business with AI you need a durable. Enterprise grade 24 by 7 infrastructure, a platform that can create those tokens, AI answers at speed for many users with incredible concurrency and ultra low latency, right? And if I'll just walk you through kind of my

convenient diagram here, if you liken today's AI factory to factories of old, right, if you think about the industrial revolution, the first factories that use raw materials, whether, you know, you heard Matt Matt Hall talk about Coal and, and, and, and steam and creating useful work out of those raw materials, you'd essentially now take foundation models because maybe very few of you today need to

create a foundation model for your business application. Probably, and more likely than not, there is a great model that's ready for you to use today. So you take a foundation model, you take your customer data, patient data, intellectual property, and with the right tools, you can then create a viable prototype.

That's ready for fine tuning and customizing. You can deploy it in production and guess what? That model, that application will continue to be fed with, if, if you will, the lifeblood of AI which is data, which resides on pure storage, right? So you have this essential flywheel of model

creation, prototyping, delivering production, fine tuning, retuning on an ongoing basis, right? And you'll see pure storage is really kind of If you will, the heart of that AI factory that keeps the infrastructure fed with data. And everything that you're going to hear about, uh, from us, you know, over the course of today's session is about how you can realize essentially your own

AI factory and your own operation today in 2025, not next year or years later, but like right now. So with that, I'm actually going to stop clicking through slides and I'm gonna. Oh awesome. Hopefully you can hear me OK. I'm gonna stand as well. So speaking of the partnership that we have, we can, we can really call back to collaborating

on co-engineering something that was called AI, and that was a way for us to come together, bring together the compute component, the upstack components, as well as the storage and underlying data competency. Um, we've been doing this for a while. We have exabytes of storage serving uh AI clouds, and we're installed it more importantly in the 13,000 plus more uh customers

in actual production, uh, workflow. So we understand the discipline of being in production with our customers and we know that those disciplines need to over overlay on this new production workflow as, as, uh Tony mentioned. All right, so we, we want to take a moment to kind of talk about this journey because every customer is gonna be in a different part of this journey.

And I've, several of my customers have actually gone through this. Uh, they've started on a very simple deployment with like a baseball deployment, a couple of DGX servers, a few 100 terabytes worth of, of uh storage, and They start experimenting. They need to build the confidence. Their data scientists are experimenting with models that they're familiar with.

Uh, the more adventurous are trying to develop some more interesting foundational models around, uh, my, my telco customers are trying to develop really interesting foundational models, um, and really to, to create, start messing around and start figuring out how they're gonna bring. Uh, AI to answer the questions that the organization has tasked them with.

And that's the first stage, right? Um, where, where we're, uh, we're developing these models in a, in a private cloud, self-hosted. When we move up to the stage where we're now a bit more familiar, now we have some muscle memory.

We can now start to. Optimize our workflow and customers that are at at fine-tuned AI really are starting to bring their proprietary data into their, uh, the, the models that they're using and really trying to bring that DNA into the model so you get answers very relevant to the business, uh, asking the questions.

And then, of course, uh, when you start moving towards more competency and more capability, Uh, you really start pushing very domain specific, uh, models such as in finance, and I mentioned telecommunications, um, and in life sciences, so very specialized with very special data, very curated and very unique to the type of workload that they're trying to solve for.

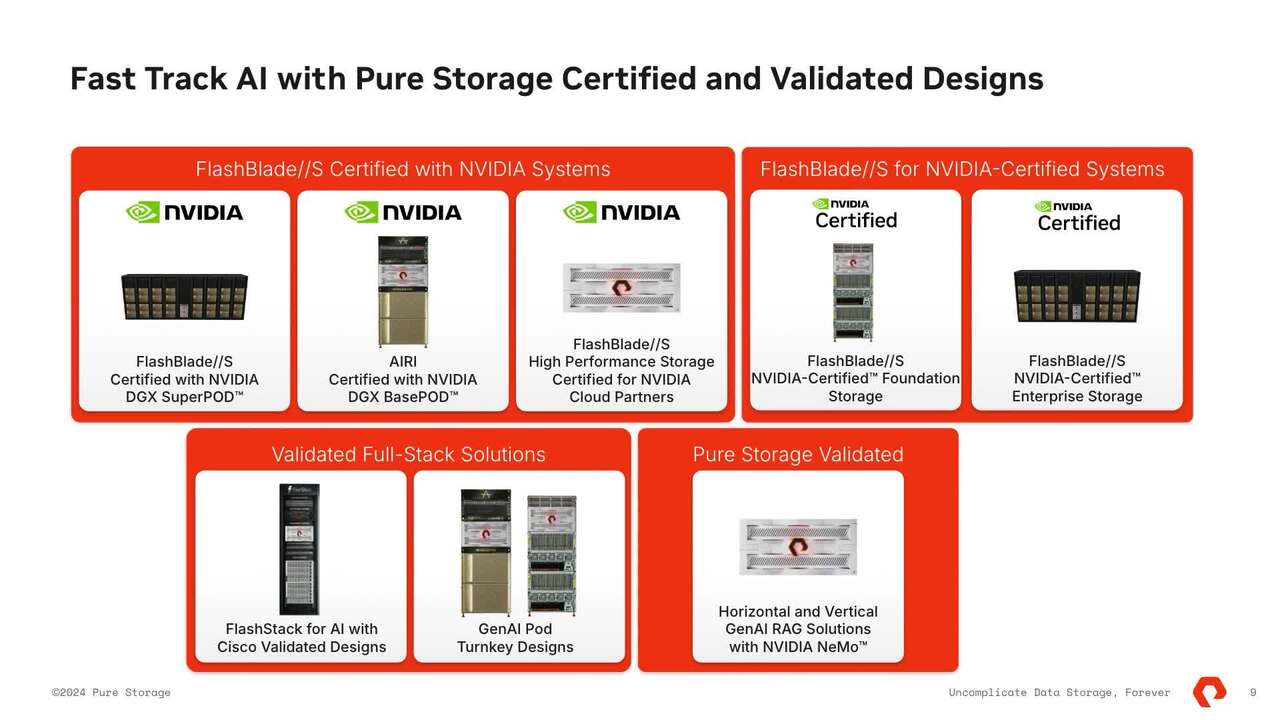

And if, if you get to the, the cloud scale AI, uh, hopefully, we've been through this journey with you. All right. So the other important thing where we come across is we're actually helping customers uh consume at each step with very with validated turnkey solutions and we wanna meet you where you are in your journey.

And this is everything that we've worked for when we talk about our storage technology, when we talk about port works, when we talk about the certified architectures that we've developed with Nvidia and that we've uh. That we've worked very, very hard to co-create. Um, the, the other very interesting thing is the co-engineering work that we're doing,

uh, some stuff, uh, that's very interesting around key value, KV cash. Uh, and I think that that's gonna be an impactful when you talk about Infrastructure becoming even more important for supporting a large, large inference at scale. Uh, having a very capable platform underneath to support that becomes a key component of

success for it. So, one platform for all your AI enterprise capabilities. Um, this is part of our enterprise data cloud. When we talk about what Pure has been able to put together through all of our various technologies, uh, we provide not only the consumption, the consumption model, the SLAs, uh, with our direct flash technology and purity,

111, interface, one control plan. In order to to create uh a layer that is very, very extensible. Um, with pure Nvidia and AI, our solutions with BasePod, Superpod, and certified systems, we really now can deliver a stack beyond just storage, uh, beyond just, uh, providing that capability across multi-dimensional type workloads.

We can provide a stack that can help you deliver those containers that are gonna deliver the cloud native AI that's out there. And there's one thing that I think it's important to know that customers when they build AI platforms, they want to develop platforms that are easy to consume and easy for them to deploy and create self-service capabilities.

So several of my customers, uh, when they first started, it was very bespoke, it was for the data scientists, but as they went to production, they're looking to create services for their non-expert systems, non-expert consumers, so you're citizen data scientists, and they want to enable, um, you know, access to these tools in a way that's very scalable,

in a very shared network and shared infrastructure environment. For the expert systems, they want to create uh direct access to the uh discrete hardware so that the data scientists and PhDs have a direct access to do all of the heavy duty lifting that they need to do. Um, and with our platform, they can actually deliver those two components simultaneously and

with one experience. So whether I'm developing a shared leverage infrastructure for many novice users or I'm delivering discrete infrastructure to, for my expert consumers, uh, the same experience can be delivered with Pure and what we've, uh, with, we've developed with Portworks. And of course,

the, the engine, the, the AI component is very important, um, but there's also the data curation and data engineering. This is where we're helping our customers create glue and data mobility from their institutional data to move into AI training AI functions. Of course, we can't say enough about this partnership.

We've gone through several iterations with Nvidia in order to really prove that our technology can support uh the varying differing scales of, of, uh, implementations. So from uh BasePod to our Superpod capabilities, uh, and of course, most recently NCP.

We really can meet scale not only at the small exploratory stage, create and develop that, that unit for growth when you need to grow into production scale. We're easily creating that one, that one system that can be deployed once and then multiplied over and over again to meet the inference at scale. Of course, we also have validated full stack solutions, and we continue to work on

developing very turnkey Gen AI solutions at varying different verticals. That's where we, from there. Thank you. Yeah, so, uh, I wanna go back, I wanna go back to this, and you know, we have port works up there. We talk about container data management, but what we're really talking about with that is

Kubernettis, right? And so that, that's where we really have to think about what is the structure? What's the infrastructure that underlies all this? I know it's not sexy, you know, we're not talking about LOMs and asking questions and finding all the answers and tokens, but it's the underlying architecture that really lets us optimize for the AI that we're

driving. And that's what makes that infrastructure sexy, right? Being able to have that capability and being able to drive that across the environment. So we also need to think about how we're doing that, and that's where Kubernetti's comes into play. It's where Portworks comes into play in order

to be able to help drive that. So I just, I just wanted to point that out and we'll just, we're, we're gonna leave it here and, and, and, and we're gonna keep going. Um, so we have some questions that came in and you know, the, the guys have seen these, but I wanna ask these questions to the team and have them kind

of, uh, bring their answers to bear. And these are things that were submitted by different folks that we've talked to out in the field and then we'll get to your questions, right? Because we want to get questions from the group and we'll get those too. But I wanna lead off first with with Tony, right?

Tony's Tony's our expert, uh, you, you can't build AI without Nvidia, right? It just doesn't work. Um, so as we talked to Tony, I want to know, as you talk to customers about evolving AI journey, what has changed about what they're trying to do now versus what a few years ago they were doing GI Gen AI as a use case really opened the door to enter.

Enterprises who are maturing in their use of AI and I think, I think you covered that some in, in kind of your, your lead up to this, but give us the extra insights I I'd love to double down on that because really and truly if I think back over the last, you know, 9 years, we've seen this remarkable evolution, uh. And, and here's the thing, this is gonna

probably put some folks on, on edge a little bit, but um you heard uh my friend Matt invoked the term shadow AI in hopefully in the, in, in the keynote this morning. And that was a very real thing. I will say that, you know, in the AI gold rush as the uh the uh provider of picks and shovels in that gold rush, we certainly spent a lot of those early years arming well funded users,

data scientists, people who needed access to incredibly high high performance infrastructure, um, to do their life's most important work. Oftentimes training a foundational model uh from. Scratch well outside of IT with without any kind of IT governance, and we created, if you will, or we're a party to creating this, this shadow AI problem with silos of infrastructure that sprawled across the

enterprise. You yourselves may have this in your own organizations with lots of discrete, uh, business units or teams that kind of stood up their own technology stacks, um, replicated their own infrastructure, maybe a lot of in cloud running up opex there, right? And Now, today, we find it's a very different conversation with these organizations.

They no longer want to sprawl distributed, unorganized, unmanaged, underutilized, uh, silos of infrastructure. They want centralized centralized shared infrastructure on a common platform. Most of the customers we talked with today are asking us, help us build and manage our own AI center of excellence.

And think of it this way, um, I mentioned. AI factory, think of that as the platform, but that platform is part of a bigger construct that brings together people, process, and technology, right? So today, a lot of your organizations are thinking about how do I, uh, bring those workloads together so I can improve the utilization factor of my

infrastructure, right? How do I consolidate best practices for developers, for instance, how you go from a viable prototype to a production. Uh, model running 24/7, right? And how do I groom data science talent from within my organization? Because it's not easy to find experts,

people who have MLOs and data science expertise and who know essentially how to deploy and manage AI AI applications at scale. So more and more the conversation is. How do I get that centralized, shared environment within my own enterprise that lets me bring together people, process, technology with IT governance. So this is no longer a thing that's happening

in the shadows, but something that's actually cost effectively and expertly managed by IT organizations. And that's why I think we're so excited about this conversation because in Instead of the problem of proliferating stuff in the shadows, we're helping enterprises make this look more and more like a mainstream traditional enterprise workload like it should be treated, right?

And, and I think that's good for all of us. And certainly, if you're looking for a platform on which to trust an enterprise grade workload, why not trust folks who've been doing this for a long time. Now, Victor, it's not really fair, but Tony teed you up, right? He did everything short of say enterprise data

cloud, but I, I'd love to get your take on that from the pure perspective. And so, and this is the reality of um bringing AI to as a, as a new experience and a new uh muscle that you have to develop organizationally. Um, when we talk about the enterprise data cloud, all of the data that's sitting at my customers, uh, fingertips is actually sitting in very large data lakes.

So the very first thing that they have to develop is data capabilities and data discipline. They have to become really good at helping their data engineers, their data scientists look through all of the institutional data. And decide what's gonna be key in helping them answer. It might be very line of business specific if we're talking about,

you know, large finance organization. Um, it might be very risk driven, it might help, uh, the business optimize a workload or even optimize the type of, uh, transactions that they do and do them much more efficiently. Um, and this is, this data discipline needs to be the very first things we, we help our customer with.

Um, once we grab, we're helping them mine all of this exceptional amounts of data that are collected. The other thing is, OK, so now that you have your institutional data, how do I bring other data sets into the fold in a very disciplined way with governance and controls that are allow it, allow my data scientists to create new novel interactions between data, as they're all. Also using new novel ways of implementing,

say, a vision model to correlate risk and to do uh simulate Monte Carlo simulations with uh diffusion type uh models. Um, and, and so now we're getting into like the interesting part where we have a bunch of data, we're getting really good at mining it. Um, we're helping them create velocity in that data.

It's usually done in a very batch-driven way that takes a very, very long time and it's not very conducive to Uh, a full real-time inference chain. So, so the idea then is to create those real-time even eventually create these real-time paths to the source data and have your models interact and do make decision making very close to that data. So data discipline,

optimize the data flow, and then create real-time access to the source data as you improve your models and you have more confidence in them. Of course, now when, when it's in production, Uh, I now have to think about it in different terms. I, I have to have multiple data centers. I have to have, um, maybe, maybe I have need the flexibility of creating inference and

training clusters at will as the volume for each one of those modalities, uh, arises independently. Um, so creating one experience then becomes a very big, uh, priority for our customers. And with what we've learned from them and learned with them.

Is, is creating that experience and being able to extend that across any data center, creating hybrid access into their data, their data centers, and then extending and bursting out into cloud resources as necessary is really becoming the modality that they're, they're starting to really land on. So beyond just physical data center capabilities, extend that data set,

extain the, the pipelines, extend the data access into cloud resources, spin up a bunch of capabilities, and then maybe turn them off, right? And then bring back that, that control on premise. And every customer is looking for, for leverage ultimately. They're looking for.

Leverage on and ownership of their data and control they're looking for leverage in in the way that they have vendors provide services to them. So by using our enterprise data cloud, we actually help our customers create levers so they can optimize throughout their life cycle and have their data sets into their AI life cycle. So Victor, I wanna, I wanna stay with you for a

minute because this morning we heard about enterprise AI capacities, right? That was, that was a new, new term for me. And so as we look at that, what does that mean for customers? Like how, how does that impact the customer environment and what does that mean for them? So when we talk about AI capacities, it's really the building up the capabilities to

deliver AI at scale. If you don't have a huge pool of data scientists, you're not really doing very fundamental work, you're trying to use off the shelf capabilities. You're going out to the cloud for resources that are easy to turn on by API, um, and then you're gonna try to risk adjust that and the exposure to that.

Uh, and as soon as the risk becomes too high, you try to bring all of those capabilities on premise. That includes the people that you need to hire, the infrastructure that you need to build to support that, and the agility that needs to be built in that stack. Tools change very, very quickly in the space.

Heck, when I look at the portfolio that Nvidia has developed with Nvidia AI Enterprise, I'm constantly just impressed by the level of innovation that keeps happening. And you talk about a flywheel, uh, that innovation and the tooling and the capabilities keeps on accelerating. And that acceleration means that you have to retool while your plane is flying.

While you're flying, while you're in production, you need to be able to frictionlessly retool. You need to be able to expand those capabilities, uh, without disrupting this very critical now mission, uh, mission critical workload that is happening inside your walls. Tony, Tony, does Nvidia view that any differently?

Is it is the same kind of uh. Call it nomenclature, uh, present from your view? Yeah, absolutely. And a lot of everything you said just absolutely resonated what I'd add on to that is that when you hit that tipping point. And you decide that because of, you know, data sovereignty, locality,

um, criticality of the stuff that you're working on and your cost model, right? The, the escalating opex, whatever those parameters are and you decide that, OK, I need now fixed cost infrastructure that allows me to achieve, um, you know, the lowest cost per token if you will, the, the customers. You all now then obviously need to almost don't,

don't get offended by this, but run like a hyper scalar without the complexity or cost that is usually assumed by trying to be all those things, right? And I think what I love about, you know, the pure architecture in combination with what Nvidia does is the simplification of that. That task, right? Being able to have, um, you know,

something that behaves more autonomously, more point and click, if you will, uh, that is more dynamic and agile relative to user demands, right? Who nobody wants to hire a team of 10 infrastructure ops people to run this stuff, right? What they simply want push button simplicity, and I think Pures always had that.

Obviously from the Nvidia perspective with the tools that we're building, you know, if I throw names around like Mission Control or or base command and, and what we use to manage our infrastructure, it's all with the, the intent to make your life simpler such that you do not need to on board the skills of a hyperscalar, but you derive all the same benefits of.

Agree 100%. And I think that that's where success starts to be um something that, that outcomes get outcomes start to get delivered much more quickly. At first, it's a very, very slow process, but once the muscle memory is created, once you understand what you need, how you curate, you build automation around it.

Um, that's, it, it really is impressive. I've seen use cases grow from 3 to 5 just core fundamental, uh, knowledge-based use cases to, uh, 200 and change these cases, and you, we talked earlier about organizations building the whole full matrix to deliver AI. There's AI centers of excellence. There's AI governance boards, uh, really trying to bring that,

that, that discipline to this, this endeavor. You know, I want to lean into one more thing related to what you just said, right? If we think about the journey that I've been describing unfolding since 2016, right? I wanna talk about your users and what they looked like,

let's say 8 years ago versus what they look like they changed, right? So that well funded data science user who had access to money to buy, you know, a quarter million dollars of infrastructure back in 2016. They were probably training a couple of really big models, right? And they would basically submit jobs to

training infrastructure and that one job was this huge monolithic thing that occupied the totality of that infrastructure for hours, days, maybe weeks, right? And that was pretty normal if you think about roughly the 1st 3 to 5 years of. The massive infrastructures that we've been deploying around the world.

Fast forward to today, the inside of like y'all's organizations, I would not be surprised if you said we have hundreds to thousands of users running much more smaller discrete jobs in parallel with huge amounts of concurrency. And while these aren't large monolithic training jobs, the latency requirements.

Right, the responsiveness, the, the equality of experience in the uh answers coming out in the form of tokens off of this production workload is almost more intense and more demanding than training that big, you know, a billion, hundreds of billions of trillion parameter models, you know, from years ago, right? So I, I think the world looks very different, but I feel like it's even more demanding.

On the infrastructure and the kind of tooling or the apparatus that Pure and Nvidia are essentially putting together to support. Well, that's also that brings in the again going back to Bernetti's that that's my thing. That's what I talked about, right? But that's where we get like GPU sharing and time slicing and other things like that because back in those days,

I remember people had to make decisions. Do I want to stop running this model to run this other thing or do I want to keep it going? And no one wants to make that decision anymore. They want to just say, I'm gonna add it to the mix and I'm gonna change the priority, and I'm gonna keep on going. And that's, that again is where,

you know, Kubernetes can really help out. So in case you haven't noticed, if you're not looking at Kubernetti's, you'd be looking at Kubernettis, right? Make that, make that happen. Um. So we, Victor, you did a great job of kind of summing up, you know, where we're at from a pure storage perspective and how we integrate with Nvidia.

But Tony, I would love to get your feedback from an Nvidia perspective, how you see the relationship from your side. Yeah, absolutely. I'm very proud of the fact that if um I rewind back to 2017. I think about the a lot of the customer meetings that we did, uh, organizations like yours, we'd find ourselves pure and Nvidia at the same meeting

room, same conference table, opposite sides of the table trying to help the same customer and the customer inevitably had these questions, uh, how do I design the right balance of compute storage and network? How do I put this together? How do I manage it, right? How do I scale it? And they'd say to us, this stuff feels like rocket science,

uh, or brain. Surgery, and I do not care to be a rocket scientist or a brain surgeon. So you two, they're pointing out Nvidia and Pure, need to get together and make this stuff simple, right? Make it, take out all the black arts of designing, you know, AI infrastructure, make it simple to acquire,

easy to deploy and effortless to manage and scale. And out of that was born Airy. I mean, Ari is on here, there we go. So Ari was basically the, you know, that was the genesis. area and why we got together and put together the world's first integrated infrastructure solution.

Since then, Pure's been on this remarkable trajectory to continue to evolve and expand and improve this portfolio across, you know, using the totality of Nvidia's technologies, right? And if I was to fast forward to today, this is kind of what this looks like. This is now, if you will, the flagship of what you might put inside your AI factory.

Like the gold standard of today's AI factory, right? So this is the Nvidia DGX B200. Um, this is best of breed infrastructure for those who want to support the full life cycle of AI from, you know, training all the way to fine tune, customize to deploy and infer at scale for many, many users with the ultra low latency.

This is a best of breed architecture and Pure is deeply integrated. This is What today's area looks like built on our Blackwell generation. We're very proud of it, but what's more important is I think what you're seeing hopefully is that there is a deeply intertwined joint roadmap of engineering and evolution between Pure and Nvidia to take the best of our architectures with the best of

Pure's technology and intertwine computing data, right, in a very meaningful, purposeful way. So we're very proud of this. I think, you know, if I was to give you one piece of advice, it would be, you know, if you, if you saw the stuff on the prior slide, plus this, I'd say, you know, there's an expo hall just down,

down the way here, have a conversation with the folks out there, visit the pure booth, talk to them about A and about all the other things in the pure slash Nvidia joint, uh, solution space, and they've got great formulas for you to be able to start your own AI factory journey. So. So Tony's stealing the, the, the call to action slide that we're gonna go to,

but that's OK, right? Because when the legend leads you in that direction, you follow. Uh, so what are, what are next steps? Get started, right? Attend a Pure AI workshop, uh, participate in our assessments, come to the Expo Center where we're glad to talk to you.

We'll talk to you in the bathroom if you really want to. Wherever you go, AI is there and we will be too. Uh, evaluate validated designs, right? So look at those peer certified AI solutions and really take a deep dive into that, and then bring people like Victor in to be able to have those conversations and, and really understand what that means for your environment.

And then collaborate with experts, work with pure AI experts. There are other folks out there. You have partners that have expertise in this space too. Remember? We go in together. We've got Nvidia, it's Pure and our partner in order to be able to meet your needs. And so just remember there's those folks that

are out there as well to help you answer some of these questions, provide services around delivering it for your organization, and bringing the whole thing home. So, before we go, I'm putting you on the spot, OK? Victor, what is one thing that everybody in here can walk out that door and do this afternoon in order to be able to start their AI journey off right?

Start, take that first step. It can feel like a lot, but with a, with a great, great, uh, great first step made for you, ready for you, uh, we can help you take that first step. Come take the first step with us. OK, Tony, and you can't say, come talk to me, right? That, that's not,

that's not a lot. You got to pick something else. What I will say is, Nvidia and Pure will meet you where you're at in that journey, in that evolution, where Wherever you're at, wherever you're at on that scale, our, our companies, organizations, our teams will meet you where you're at and help you chart out a path to get to your own AI factory in 2025.

The factory can look and evolve according to what you need, right? In terms of users, scale, performance, complexity of models, all those things, right? But along that entire journey, there's an AI factory that fits essentially what you need. So let's come discover it.

And my piece is, remember, although your pink lanyards indicate that you're a customer. You're really a partner, right? You're a partner with all of us, right? We're all working together in order to be able to drive this home. So pick your other partners, pick who else you want in your partnership.

Go and find that partner's gonna work with you, that bar that's gonna help you out. Go and find that data scientist that you can work with inside your organization. Go and find your internal champions that you can build up within your org in order to be able to carry this home and make it matter for the organization. So, uh, with that, I just want to give you some stuff that you can go,

we can continue this conversation. We have a new, brand new customer community if you. Haven't got it, you can scan that QR code. It'll take you there. You can sign up. There's a lot of interchange that we have on there.

A great place for our customers to be able to exchange ideas without, you know, pesky people like me getting in the way, right? So that's fantastic. And then the socks, right? You can go get a pair of socks. So, I'm gonna get you some. I'll get you some.

Uh, this is adding your voice to the Gartner Peer insights, so you can scan that QR code. You can provide some feedback, and you get a really cool snazzy pair of socks. So think about that.

We Also Recommend...

Personalize for Me