Dismiss

Innovation

A platform built for AI

Unified, automated, and ready to turn data into intelligence.

Dismiss

June 16-18, Las Vegas

Pure//Accelerate® 2026

Discover how to unlock the true value of your data.

Dismiss

NVIDIA GTC San Jose 2026

Experience the Everpure difference at GTC

March 16-19 | Booth #935

San Jose McEnery Convention Center

42:22 Webinar

Unifying AI Data Pipelines: High-performance Storage for the Modern Enterprise

Discover how high-performance, scalable storage powers end-to-end AI/ML workflows and accelerates innovation.

This webinar first aired on June 18, 2025

The first 5 minute(s) of our recorded Webinars are open; however, if you are enjoying them, we’ll ask for a little information to finish watching.

Click to View Transcript

Uh yeah. Well, today we're gonna be talking about something very different than the keynote. Uh, we're gonna talk about unifying data pipelines for AI. Uh, so it's gonna be a little bit of several things going on. We'll be talking about, well, data pipelines, etc.

then we'll also be talking about some of the work that I specifically do, so we could, uh, go into some of the details there as well towards the end. Uh, so. What about myself? I'm Robert Alvarez. Uh, I have a PhD in applied mathematics from Arizona State University,

some levels. Uh, oh, hell yeah. Oh yeah, huh. Yeah, man. So a lot of fun levels here. Uh, before that, before actually coming to Pure, I was a researcher at NASA's Frontier Development Lab, which is a machine learning and AI incubator within,

within the NASA. I worked with AJ. Uh, so we did some really, really cool stuff. And I've worked at startups, I've worked at large enterprises, not just pure, uh, governmental agencies, and I live in San Francisco and I enjoy drinking rum and going out. So, any, if you like any of those,

please hit me up. Uh, but we're gonna be talking about first a little bit some of the data trends and challenges that we've been seeing in AI. Uh, so, you know, we'll also talk about sort of the latest trends in data engineering in AI. So how data lakes, right, so data lakes have been evolving and object storage still dominates in AI, so, uh, there's obviously now like iceberg tables and uh open table

formats that really help accelerate the usage of objects in. In in cloud environments, but also we're gonna talk about how we are accelerating them. Uh, the rise of the open table formats and then storage innovation has really become like a linchpin in the focus of, of AI, right? We, you know, it's not the least talked about part of AI, right?

Everyone's talking about GPUs and Nvidia and like all the sexy things that you can do with AI, but really we're showing how we can innovate that with our AI with our storage stack as well. And then obviously there's economic pressures of prioritization, etc. that are gonna be really, really important for that.

One of the first challenges that we've been seeing in in AI in general is just 80% of data is unstructured. Excuse me. And we have 20% of that is structured. Now, one of the challenges were on this is that if you want to make use of your data in AI, You're gonna have some of these challenges around, well,

if your data is unstructured, how do you get effective use out of them and this is where we were talking about, I was talking about like data lakes and data lake houses, sorry, which are gonna be better, more efficient for you able to query your data we can talk about a little bit about Dremel, and these have to be organized into some semi-structured sort of queriable way that then allow you to get used like things like your

model context protocol for, uh, for leveraging that in your large language model or for any AI or AI agency. You might be having there. So it's really, really important here, uh, because this is only gonna continue, right? You're now gonna have synthetic data also being generated from, from large language models that are gonna be helping uh or growing to this,

but also giving you that business value back as well. Another challenge that we've been seeing is just the, the cost of the adoption of AI, right? So, on average, companies are spending a little over 8 months to go from pilot to production, and I think that's probably gonna be increasing as uh hardware becomes harder to find.

Right, so, uh, if you're still relying on Nvidia GPUs, right, you're gonna have some supply chain issues, but only about half of those, uh, uh, of those initiatives are actually actually achieving employment. So it takes about 8 months to, to achieve a production, but only half of your, your actual pilots are achieving that.

And then scaling has a lot of upfront costs, right? You need to buy, if you're on-prem, you are buying a lot of uh hardware for that, whether it's your compute stack, storage stack, etc. So this is, this is really one of the challenges around technology adoption and AI and I'm sure you've all seen this as well, so, uh, but Excuse me.

It's gonna, this is one of the things that we, we've been seeing is that there's upfront cost from like going from the cloud back to on-prem. Like we, I honestly think that one of the things that you should be doing is starting in the cloud like your pilot, like if you're trying to do like a large language model, you should start in the cloud, pilot it, get that business ROI,

and then start bringing that on-prem before your cloud costs go. Berserk. I was talking to a customer yesterday who already in Q1 are $3 million over budget for what they had planned for for all year. So they're gonna be about 2 to 3 times. Their their expected budget because they decided to deploy in the cloud and in that

network. Uh, fine tuning is actually one, well, the thing is like what's there. I would not fine tune in the club. Yeah. So, uh, they just didn't expect to see that high in in demand. They offered the product for free, which is partially to blame.

Uh, but the thing is. When you offered, they offered the product for free, so they had a lot of adoptions, they're like, now they have a high cost, but they don't know actually what the true adoption is. So But by bringing it down to on-prem, which we're gonna, we're helping them with, they're able to then be do things like instead

of limiting the number of queries that a user can do with the free version of their prop model or their pro program they can then say, well they have unlimited because it's not really impacting their system and it's not impract cost because they're not paying for every single query that goes out to the cloud. Yeah. Yeah, OGBT charges you by the token.

Not by the query, so. Bit more of a challenge there. Any other questions? OK. Yeah, so the data, uh, yeah, because they have to have, they have to have all the data on the cloud accessible, yeah.

Mhm. Yeah, so one of the things that we do with Portworks actually is allow you to to start in the cloud and then migrate down. And then when you're setting up, you're when you're really thinking about like your AI adoption. I'm like, look, we're a storage company, we sell on-prem, but we totally get that you should start on the cloud.

But if you do it right from the get-go and thinking about like how you should do your corenes rustration, manage that with Portworks, then you can bring that down to the, to the, to on-prem and really reduce your cost because the pilot is easier to do in the cloud. Yeah, yeah, yeah. And obviously there's maturity, uh, like AI and machine learning and like are going to help

companies differentiate. So how many of you know how many models are available on hugging face? Yes, 1.7 million. Yeah, you say the same number? Yeah, yeah, 1.7 million different models. Obviously there's a derivatives of a bunch of models, but like I was looking atuin the other day and I was like,

OK, I just need to use Quin. I was like, I need to use the 1.5 billion version. And I go to Quinn and I'm like they've got 40 different models and it's like. Fuck. I was like, which one do I actually need to use? It's very annoying. Uh, and then obviously the companies are actually accelerating the usage of their AI and

they're becoming more efficient and getting better at it, but that maturity isn't, is, is causing strain on existing data workflows and pipelines, right? Uh, so. It's, we really, we really do see a lot of that happening right now. Uh, this is all from uh State of AI from last year.

Actually, highly recommend you listen to the State of AI talk at 2 p.m. DJ. Yeah, 20 p.m. with a par boats, our VP of AI infrastructure, and a close friend of JJ and I is Richard. Uh, so. Now we're gonna go a little bit to the AI workflow deep dive, some of the things that you'll be seeing and well,

one of the first thing you have to do is before you do your AI workloads, you gotta find out what your decision tree looks like. And here we're sort of splitting it up. The first two boxes on the upper left are really the infrastructure owners and what they need to carry about, and then the business side is the ones to the right.

And if you see those workflows, they're very different, right? Infrastructure leaders and infrastructure owners are talking about how effective is their GPU investment like they need to talk about the performance, the complexity of storage, you need to get your ROI back for your GPUs. You want to move from batch to streaming, like all those different challenges and how uh legacy technology might actually be hindering

your innovation, right? If you're using like Hadoop tables, for example, that's no longer state of the art. Uh, but what the business leaders care about is like how much data can we use, how easily can we scale, like our data silos impacting the business, but those are very different things, but we need to have,

you know, data consolidation for analytics and AI, you're growing complexity of, of your state, uh, slow business, uh, complexity slows business, right, and innovation. So there's a lot of, a lot of things happening there and that data efficiency, so they're caring about different things, but ultimately, With the right technology in place, you can take care of all those all at

once, right? So we're removing data silos with fusion, you can find out where your data is, uh, so. So in in terms of like the AI sort of workflow, you've obviously got your product design, you've got your tables over here, you've got your databases, your data lakes, streams and streams of data.

Uh you need to acquire all that data, you need to do that for your preparation, data indexing, right? And that's gonna obviously have a little big, big challenge on your data center footprint, business continuing and then, you know, so this, this all has to happen before you can go on to model engineering, right? This is where the fun stuff is like this is,

this is the part that I, I, I live in, right? You gotta select your model, you gotta train your model, you gotta fine tune your model, evaluate it, refine it, bring back, do more model selection, define different models, scale it out, Kubernettis, do hyperparameter tuning, right? And all of those fun stuff, and then you

eventually have to develop and deploy that. Right, so you have to think about, well, how is that affecting your security, your data governance, your GP usage, business continuity. So all of these are, are very important to, to keep in mind. But one thing we say is that AI is just another storage workload,

right? We've got for your data engineering pipeline, whether you're using Spark, Starburst, Gremio, you need to read that data in stored in data for your model engineering, you've got uh weights and biases, tensorflow, Pytorch, right? Python, all of these, Kubernetti's workloads doing gene like both reads rights and generating metadata.

So actually, this metadata sign should really be a little bit over that way, um. So you're carrying about all those different things at once, and then you have to do your MR systems engineering, well, ML flow, Elastic Search, all of that are hitting your storage, right? So we're agnostic to the type of workload that

we have that you have. We just do them all very, very fast. Hopefully. How many of you have been deploying AI models? I'm just, just curious. Yeah. What types of models are, uh, still a lot of, uh, there's still a lot.

Mhm. Right, OK, progression type models. That's Good. So when it comes to people aren't bottle to touch. Actually, I have, I have worked on a date. Oh nice. Nice.

Yeah, they usually write your hand on the top, are they still on the ML sort of like computer vision, like predictive side, or are they more on the generative side, kind of curious. Generative generative. Traditional, uh, both, both. All right, yeah, so we've got a little bit of both, uh,

so yeah, so you've all seen these tools before, right? But these are all like impacting our storage and we wanna make sure that you're able to have these all efficiently because you're supporting multiple teams that once you're supporting data engineering, you're supporting your data scientists, and you're supporting then you're also deployment, you know, your production engineer.

So if we think about some of the products that are used in our AI workflows, right, so we go back to the table that we saw before, you've got your databases, your data lakes, all your streaming data, you can acquire your data Apache Spark, and then you could also be, excuse me, managing that either in Flash Blade or Flash array, and then, uh, using all their cloud blocks or if you're,

you're in the cloud as well. So these are all, uh, good things there. And then for data preparation, you can be using that uh flash blade architecture to really scale out and think about how you're Uh, ingesting data in parallel for your data indexing, whether it's Starburst or Dr Dremio, again, you can use our flash blade,

Flash array, or even our cloud block store and help with that data duplication. Uh, for, uh, model engineering, right, well, for really these are the touch points, the ones in blue are where actually storage actually happens. So when you're training your model, you wanna basically be doing that in conjunction with flash plate and Port works to do that scale out hyper brand we're tuning workflow and then

think about also bringing that back from the cloud or even bursting to the cloud. So one of the things that we enable you to do. I think about, OK, I'm on prem, I, but I know I can't, I don't have a good predictor of variability. So that if you then want to burst to the cloud with Portworks when you set up on your cloud

environment you can then copy that data over and then say, well, I'm seeing a usage, a spike in usage. Give me 1000 GPUs on AWS and my data is already there and ready to perform. So it's really, really good strategy for going not just from migration from the cloud, but also bursting to the cloud.

And then for Utilization reference Elastic Search, Kubernettis, again, this is actually used a little bit of all of our products. You've got Flash Blade, Flash array, cloud Block Store, and Portworks as well. in Vector, I will talk about that, you know, I'll talk about.

No, no, no, no, you're good, you're good, but you're you're, you're jumping the gun. So I think Elastic Search now has a vector database. Actually, so does Microsoft SQL server now. SQL Server 2025 has it. There's a couple of talks today around, actually today or tomorrow, around uh SQL Server's integration of vector embeddings.

Uh, Right, and then sort of the standards we're gonna do uh. Little walk through through a hypo hypothetical company here, it's a real company, but I can't say their name. So it's, uh, we call this uh Earth analytics because they actually do data on, on planet Earth.

So they're analyzing satellite data to understand traffic volume. This is something that actually happens. There's actually like hedge funds who look at satellite imagery of shopping malls. To determine how. Much traffic they're getting and then they can either make bets and trades on them,

right? So this is actually a common workflow in in hedge funds. Uh, there, there can be consuming data from a third party to track shipping vessels in this case versus uh uh parking lot data and their analytics team is producing pricing information on publicly traded companies. So if you see the Walmart parking lots are empty, you might wanna short.

Walmart suck. But they need to ingest their data, so you've got your data streams coming from whatever GIS platform that you're using, whether Planet Labs or whatnot, and you're got acquire all that data, you got your metadata and your actual satellite imagery happening in real time. You're gonna be acquiring it, you're gonna be processing all of that with Spark data and they

think, well, you're training your model right before you deploy it. But in that training environment, you're gonna be using your your model. So it's gonna be reading all of this data through here, evaluating it, refining it, using data flywheel, looking at How the, how all of the models performing, right, do a lot of model selection,

and then deploy that with ML flow, and then And they'll get a cache of results for utilization and er and and then you're off to trading system, right? Yeah. Yeah, but, yeah, that, yeah, you can, yeah, whatever. Whatever streaming platform of your choice.

So, so really here is gonna be important. But they always have a tape on and they have to do this and one of the things is, as you all know about hedge funds, they want to do this in like sub subsecond or very, very fast. Actually, I would be surprised if this deployment actually still uses Pytorch. They might actually convert back to like raw C

or C++ for that, but. So machine learning takes days to iterate our new model because of AWS access speeds and the costs are crazy, right? They're gonna ask us for help. So here's some of the things they could do that if they're already on the, you know, if they want to migrate down to the cloud because one of the challenges there is

that there's a lot of data coming in, but access patterns are slow on on AWS and then you're also paying for all of that storage every, every month. But with Pure Storage, they're able to actually reduce their cost. The costs are now 1%. CapEx versus Opex. VP doesn't lose jobs, best friend of Pure Storage now.

Uh, so these are the things that we actually help by having fast S3. Uh, and help you inferncing and scale out. So all of these workflows are gonna be able to be done on our pure storage platform. And so there's a lot, a lot of cool innovation we're doing here. And, right, going back to what we were showing before is that on all of these systems,

we've got flash blade, flash array, that this might be uh uh. All being interconnected here, so flash blade, flash array port works, I think it's probably a little bit redo of an earlier slide. But when we're streamlining data pipelines with, with pure storage, right, for the data collection side, we've got a highly performing and easy to use storage

system, right? Very, very plug and play as we like to say, we have like one switch, just turn it on. Everything else is pretty simple, storage admins love us. For your data discovery processing, we've got things like fusion, faster processing for new real-time insights, uh, MI AI and ML labeling,

right, you may want to manage your storage explosion and your security, uh, for training and evaluation, we're gonna help you with the high throughput, low latency for, for hyperparameter tuning, model storage, reading and writing, uh, lots, lots and lots of really cool things that we're, we're doing here. I actually gonna get into that in just a bit.

Any questions thus far? All right. And some of the things that we actually help on our end to end AI vision. So this is the things that I work on. So we work on our AI ready data engineering pipelines, so data lake houses and specialized data pipelines. We do AI exploration,

development and testing to help AI enable businesses and products. And then the last thing is we're also trying to look into large scale AI models. And one of the 4, the four pillars that we really talk about in, in our group is really accelerating your time to data, your time to science slash exploration.

And your time to deploy and accelerating your time to skip up. Decreasing your time to scale. Yeah, you say at large scale yeah. Bedrock, yes, well, so what we, when we help the large scale AI models, we're talking about like the work we're doing is talking about like reads and writes so like

our new flash blade Xa is, is a platform there that's gonna support all that. Yeah. But we'll talk about like asynchronous checkpointing, what that does, and how we actually accelerate it. So here's sort of the, the good stuff and I, and I've left a lot of time here at the end for just like a nice little panel discussion like just hear your questions and like one of the

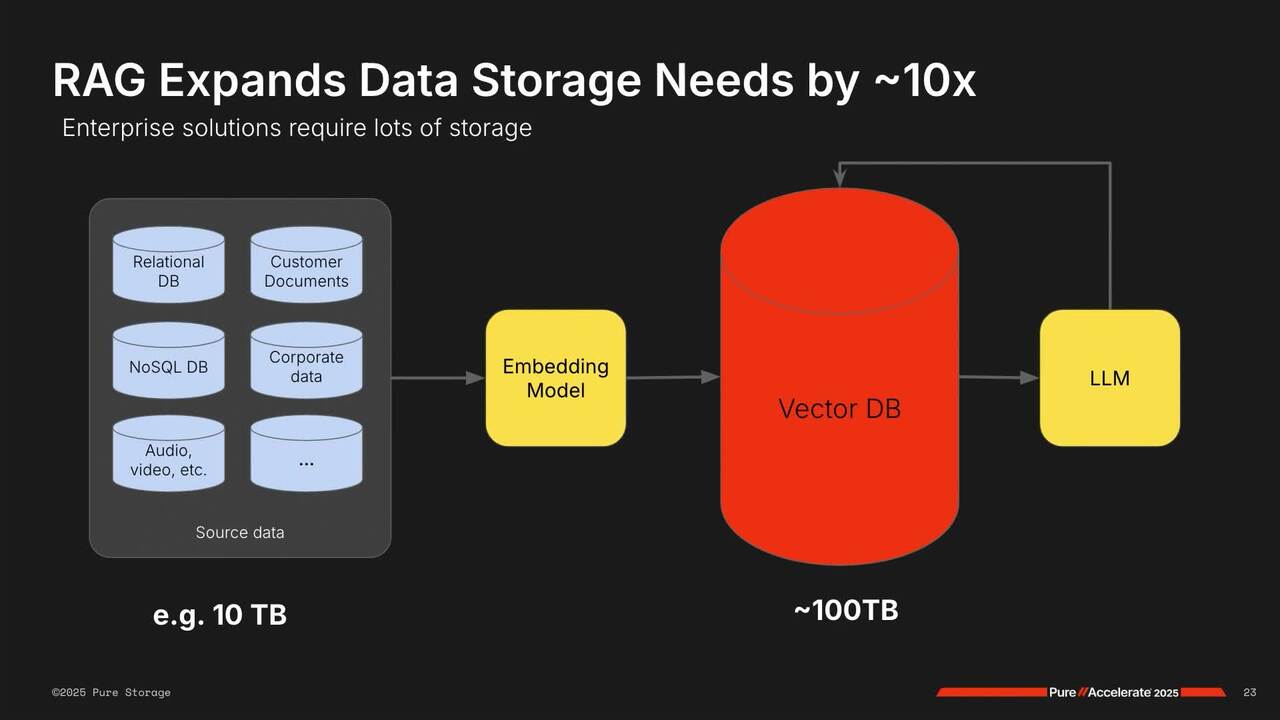

things that you're struggling with, but one of the things that we've been doing innovation in AI was we've been doing a lot of work with retrieval augmented generation or RAG so. One of the sort of challenges with rag is that whenever you're taking text data, whether that's at the paragraph level, sentence level, or just the whole document, you need to turn that into vector embedding. So you're taking a paragraph of data.

A paragraph of text and turning that into uh 1, you know, 768 or 1524 or whatever vector embedding model, yeah, 3, yeah, yeah, you're generating a vector that size for every single sentence that you have plus metadata and whatnot. So you have to pass it through the embedding model. That's sort of the secret sauce of Of the LLM,

and basically turns finance like, you know, if you think about what an LLM does that embedding model is, it's taking data and it's separating it out into different dimensions where finance data lives in one dimension or one sort of place in this uh in vector embedding model in this vector space then you have then like sports in another, science, etc. and you're also doing this with your own,

you know, business documents like HR could live in. In a certain area, so when you're, so when you then want to access that, you want to store that into your vector DB, whether it's Milvis or PG vector or Microsoft SQL Server, and you want to then be able to query that by your LLM. And then one of the challenges, you know, what we're saying is that you're gonna ingest all of

this type of data and ultimately you're gonna feed that back into your vector database plus good responses. So one of the things that you could do is also then track and have like a thumbs up, thumbs down where like we think this is a really, really good response that you can be tracking in your systems. And then saying this is a good response, we should actually store that so that if someone

asked that question again, we now have like the gold standard for that response there. If you just see like access pattern. Sure Yeah that. After being adjusted, that's, that's huge, right? Yeah, for right chit chatting. Now you're talking small,

small IFO, yeah, well, so, so, so here's the thing is that for document ingestion in the pre-processing phase, yes, it's a large IO, but you're then chunking it and passing small, small IO. So this actually happens like here there's a little still still hell yeah. Yeah, you're using line chain or takes a little while.

Sun chunker, yeah, chunk size, but you're still, it's still pretty large. Yeah, yeah, so. So one of the things that is really smart that yeah, lots of small a small I because uh we'll actually talk about how we, we can accelerate that as well, but the access patterns that we see is just a lot of random reads,

um, small I reads. They got graphic up there at the uh the uh. art. First I want to get a picture, then I have a question. So actually the customer and They, they're looking they were looking for an infrastructure refresh. They wanted to use, they, they wanted.

Stories give us a refresh, right? It was kind of us to give the reputation. So um I have got our. HBC specials on the infrastructure side, an expert. He and I can a lot horns because he's really big on commodities to that's be good enough, right? checks all these boxs he says it's and so he

and I all that. As I look at this, what I, what I hates that came here today looking for a takeaway so I can go back. So here are the differentiators that justify not just the traditional even if it's all flash storage, but because I was meeting with flash, right? You need to understand why not flashway anyway, he was the expert so he want that

recommendation went out or the customers buying, right? So I'm kind of gritting my teeth, but I'd like to come back with. And so this graphic I think is probably where that is. Yeah, do you know where if like what type of deployments it was HPC's kind of a test for the value with the Air Force and it's the right of

these little cells have their own mandate, they're trying to do things right so what's specifically in scope for him, he didn't tell me much. So you know he's not, he's like you, I'm not doing anything crazy. So it should be within the navigation, uh, but to make a case for why the flash in particular or the

the right fit, that's, I don't have the great answer. Yeah, so flash is gonna help with the, with their scaling out of the, so if, if you think about just even just hyperparameter tuning, you're doing thousands of reads and writes or You you're scaling out your model so that you can pull the lever and say like, if I change this parameter,

what model do I get? And the easiest way to do that is just to scale it out to tens of thousands of experiments. And That's going to be hitting your storage, random reads and writes, metadata checks, checkpoints, etc. But flash rates, because of its high throughput, sort of scale out architecture,

you're able to do that with very little slight delay in for your data scientists scale space, yeah, there's like why if they're scaling out part of it, so you buy it. Look, you ready walk it down, you're addressing it with an IP address something like that be the protocol. Now you need more storage. If that can't grow anymore.

Are you putting a second one over? Now you're changing the code to talk to this storage where that where it flash but yeah, scale up the storage that covered as far as you need to go, just talk to that one in your face. That's actually just say on the face, yeah. Yeah, file block, sorry, file objects on flash blade, but,

and a lot of the workloads in AI use objects. Yeah. Was I? Yeah. All right. So, uh, going back to, to the rag, like just a typical use cases, I know that everyone's been big Chad GPT has sort of changed the game a little bit.

So when we think about RAG, you got all your documents, right, stored on your flash blade or your flash array, but you need to process them. You gotta do and you know, 9 chain like whatever you're using. You gotta do the pre-processing, the chunking, store that metadata. You can pass it through your embedding model, right, and then store that into your vector

database. And then the second flow is well a user comes onto your platform, does a chatbot, you know, ask the question. That question then also has to be embedded so that it can retrieve relevant documents that live near it. And then it eventually goes back to your LLM and the round trip time between that, that user asking a question and it getting a response

back that time to first token, so that's when the first character appears in your chat GBT uh like interface is sub 1 2nd. That's sort of the SLA type thing that we've been seeing. And that's pretty complex, but This is a simple version of what would be an enterprise scale version of this where you've got hundreds or thousands of databases,

uh, you got many of these multi data processing engines, you're storing that metadata, you need to then store those chunks so that if you need to rerun or change to a different embedding model, you don't need to do that pre-processing again. And if we think about multimodal um models, right, so if we thought about embedding just text, that's one type of embedding model, but if you're embedding images,

you need a different one. If, yeah, so these are, you know, text embedding models, image embedding models, and you gotta to store those embeddings in your enterprise data warehouse, whether it's Milvis or whatnot and, and. So that as well, then the user workflow, right, you're gonna have thousands or hundreds of

thousands or maybe millions of users hitting that. They're gonna go see an AI agent first, right? Meaning like the query comes in and AI agent says, what do I need to do? Do I need to get text data? Do I need to get vision data or, or, or image data?

Do I need to pull data from a database, right? So if you're asking a question around like, uh, the, what is the next, what is the next two quarter pipeline for blah blah blah blah blah, I actually might need to go to a, you know, SQL type like table. So I actually need my model to generate text to SQL. Do that vector search. Look up, look in my databases,

pass that through. Now there's another LLM in the way here that's gonna say like, is this user actually allowed to see this data? You have role based access control that could happen at this agent or here as well. It says, are they, are we giving any information that may be wrong or misleading? And then you still want this round trip time to be maybe not sub 1 2nd but sub tens of seconds.

Right. Well there's a lot of challenges there. We've actually done work with, uh, I hate, they made us all choose black backgrounds and unfortunately there was black text on an image that I can't, uh, so I'll try to walk you through this. So we actually did data ingestion with, uh, Nvidia and we were using Milvis uh vector

database. So the Milvis vector database is one that Nvidia has been pushing a lot because they're one of the few vector databases that do GPU, um, like. Indexing for vectors. Yeah, multimodal Yeah, not all of them are, but they're, they're great,

great vector database, and what we've shown is that because we can speak native objects, we don't have to use MO as a protocol to go into Melvis. So Milvis uses MIO and we said like, well, let's get rid of Mina, we talk object. We're actually able to give you almost a 50%, I think it was 37% reduction in ingestion time.

So that means you can load your vector databases faster or load the indexes faster into your vector database means you're able to query faster, especially if you start thinking about um Real time indexing or like pretty consistent indexing overnight if you're doing all the processing of all the new files that you just got in, uh, then that's one of the nice things that we're actually transparent to

the architecture. So if these are your GPUs or your Mellanox switches, you've got, you know, they're over over Ethernet, and then this flash blade, one of the nice things about it is that if, as you scale, as you need to use more data or scale up your, your size. Since we're transparent to the video network

and we have non-disruptive upgrades, you could just add more blades to your flash blade and add more storage, and you never have downtime. Right? We have what, 6 9s of non-disruptive upgrades, 99.99999. And the other thing is that as we scale out, going back to Grant,

as you were saying, like a scale out architecture of flash blade. We then also give you almost linear scaling as you go to more and more DGX nodes. So we went from one node to two nodes and we basically get a so so not only here, one thing that's not clear because of the colors. So this is a direct attached SSD and this is flash blade,

but this green, sorry, this gray line here was the previous flash blade version. So we're now imagine that smaller bar. And then you're gonna scale that out to more GGX nodes. Yeah, so now you then get basically two Nvidia nodes and there's a 40%, I'm sorry, uh 40% reduction just by scaling it out, so you're almost having your time and

you're able to scale that out and you do your querying, your indexing, all that through uh through your video name microservices as well. Yeah, was this a test environment or is this a Test this is in our lab, but we did this with Nvidia. We actually have a reference. There's a reference to the switching top or is there other that the series.

The reference ar we published has the the the Nvidia switches, but if you wanted to switch them out 10 100 gigs, yeah, for us it's just the ethernet you put like. So did you adds to the flash from 12. No, same exact system scaling out to more DGX's.

Yeah, yeah. And then, so this is also on ingestion, right? You're mostly, but then as you scale out for data querying, so as more as you need to get to scale out to more DGX nodes, you're able to get linear scaling on your query or your throughput per second. So one DGX node with the same flash blade, 2 DGX nodes,

3 DGX nodes. And you've got basically near linear scaling of that as well. So this was really, really cool work that we published. It's on our uh solutions page on PureStorage.com/soluts/AI for always forgot one of those.

Uh, yeah. Any questions on RA? I'm gonna sort of switch subjects in just a second on KB Cash, which is the, the new, the new hotness that we're actually gonna show you some stuff that is not published yet, but Still really, really cool. So anyone know what KB Cash is?

So in a large language model, there's in a transformer architecture in particular, there is the QKV queries, keys, and values. And well, the query changes. Per user, but the keys and values is every time that the archi that the LLM is generating the next token, it needs to then reprocess the previous tokens that just saw.

So when it's when it, you know, let's say you ask it a question and then the first output is the, then it needs to reprocess the queries and then the the again to then get fluffy. And then the fluffy boo, so this is really an N squared operation. What Nvidia and what has happened, and we see this all the time, is that in GPUs, you're caching that every time. So you go from N2d operations

to order N operations by caching it on every single iteration. They have to they have to constantly go back and because they're generating only the next token. There's some, uh, there's some innovations happening now on speculative decoding where it's like I have so much compute where, yeah, this is, this is the this is the decode process.

This is all the decode process, right? So the encode like you pass the security do that and then you now have to start the encode the decode process. So, you know, if you think about The storage capacity in there as models are getting bigger, queries like, you know, you want to have a longer context window, these caches are becoming increasingly larger.

So if you think about, you know, 30 billion parameter model with 60 gigabytes uh for your model storage, your KV cache can actually grow to be almost 180 gigabytes, which no GPU memory can hold. So we can wanna bring that down to storage and then say, well, why don't we cache the queries so that we can just get to the in the code phase faster

and not have to reprocess that. So one of the things that we've been doing is saying, well, if I see this query all the time, I should just cache the keys and values for that query so that I can get faster in decoding for my user. One of the things that we've been doing is also saying, well, we understand that.

You might have prompts that don't change, but the and the last bit of it of what the user is asking, you can have a whole like, you know, um, multi-shot prompt and then say, well, here's the user's question, like now refine it, we can cache part of that prompt and then say, well, now only process the last bit of it and giving you a speed up in that as well.

So does it act like a cash kind of thing. We Left Yeah, I know caring for a lot, but you also think about like if you're bursting to the cloud or you've, you've got an influx of users that are like hitting the same query or you're like you need to like you got your system goes down and you need to restart it on a different node, you can then just say, well, that user was asking about this question,

here's their cash already, spin it up and it's to the user it's sort of seamless and magic. Uh, but bursting into the cloud is also a good one because you just transfer that cash over. It's pretty, pretty like. Uh, actually, I don't know if I actually talked about the paper here. This actually comes from a paper, like an actual paper called Katie Cashoptation,

like an actual like academic, yeah, or archive paper, but I'll, I'll, I'll find the name for it. So I might actually skip the slide for the sake of time, but this is the new Nvidia Dynamo architecture. The one on the left is the previous version of it where you would get an input, get through that first prefill,

and then start to do decoding. What they're doing now with uh the Nvidia Dynamo is actually dedicating specific GPUs for, for encoding and for the prefill version. And some of them are just dedicated to only decoding so that you have a little bit more faster efficiency, you're able to then do what we call speculator decoding,

which is then say, well, I have this question, generate the next like 10 tokens, and then, and then from there be like, oh, which is the one that's gonna give me the best response? Uh, because you've got extra there. Uh, let's skip this a little bit. One of the things that they've seen with the dynamo desegregation though is the uh tokens

per second, right? So, uh, They got a 30x increase in the tokens per second by using this disaggregated architecture and 2.5x on this is on DeepSeek, this is on Lamo 7 EB, so that's a huge improvement on throughput. So that means that you can scale this out to more users. And one of the things that we've done, this is a Literally like hot off the press I generated

this graph yesterday. Uh, is that KV cache optimization. So instead of running the query, so what, what the gray line shows is what happens as I increase my token count in my query. How long does it take for me to get my first token back out?

So gray is just query, no caching. This one is KV caching on pure storage where we're actually passing that up, passing that cash back to the GPU as it needs it and we're seeing that this is conservative 3X. we've seen 6X in speed improvements uh by using. Or the act library that my colleague and I wrote.

So it's all been Really good. The other thing that we've been doing is asynchronous checkpointing. There's a blog that I wrote, uh. Couple of weeks back. So one of, one of the standard ways that you would do checkpointing, especially for large language models is, as it's trains through all of this data,

you want to stop and say like, give me, save the model so that if I need, if I have a failure, I can restart from this step. What happens is that the GPU has to offload all that memory, put it in the storage, and then once it gets the signal back that it's done, it can continue training. But waiting for that signal to come back was

super slow. What Pytorch is, so this is not, this is not a pure storage innovation, is saying, well, why don't we offload it from the GPU to CPU memory and then let the CPU do whatever it needs because that signals really quick and then the GPU keep on training. So what you'd be able to do with asynchronous checkpointing is how long does it take you to

save a a model of this many parameters and you're seeing a 5, 10X, sometimes even 20X increase to go saying. Just pass it to storage but keep on training. This the previous looks like reca. This looks like right acknowledgement that all one of those things that Pureors did 10 years ago that made their rights so much faster

because it feels very similar as that concept, right? segregated across multiple places that are still looking at the same kind of challenges and you're still solving kind of thoughts. I need be with byorch or can just that the liber pytorch in returning that uh this is, this is native pytorch a synchronous checkpoint.

This is not released yet. Uh, VLLM does have a KB cash connection. We found a bug. We filed a bug, it's just not working, but this ours does work. We, we also, one of the things that that that we're not showing here is that this is also

available on file and object. So you can cache on object and pass it back to the GPU or you can cash and file and pass that back to the GPU. Faster maybe. Uh, this is tech, this is the grapher file taper object is probably around that way. So we showed you the this benchmarking.

But yeah, file file is very, is faster. Uh, we could probably skip that. Yeah, this is just, uh, I got 3 minutes left. I wanted to open up the questions. You guys have been great thus far.

We Also Recommend...

Personalize for Me