Dismiss

Innovation

A platform built for AI

Unified, automated, and ready to turn data into intelligence.

Dismiss

June 16-18, Las Vegas

Pure//Accelerate® 2026

Discover how to unlock the true value of your data.

Dismiss

NVIDIA GTC San Jose 2026

Experience the Everpure difference at GTC

March 16-19 | Booth #935

San Jose McEnery Convention Center

39:46 Webinar

What’s New in SQL Server 2025: AI, Performance, and Everpure Optimization

Explore SQL Server 2025’s AI, vector search, REST support, and see how Everpure boosts performance for modern database workloads.

This webinar first aired on June 18, 2025

The first 5 minute(s) of our recorded Webinars are open; however, if you are enjoying them, we’ll ask for a little information to finish watching.

Click to View Transcript

All right, thanks. How's he doing? Good? Yeah, who is the best? Valerie, yeah. Yeah, she's awesome. That person knows everything. Uh, cool. All right, let's get started.

This is SQL Server 2025, uh, in pure storage. Cool thing is, uh, we've been in the private preview with Microsoft for a long time, so we've been working with this since about November, and seeing some constant iterations on a product that's now, uh, in CTP or Community Technology preview, so you can just go download this now. Um, there's a bunch of new features and

technologies that we're gonna go through today, but really today is gonna be focused on kind of the intersection. Of pure storage and also SQL 25 and stuff that we've done in our labs, uh, and kind of show you some hopefully some pretty cool things. Uh, in your environment, or that you can use in your environment, who are legit question, right? Um, who thinks they're going to be using a

vector database in their data center sometime soon? Yeah. Like is there any AI strategies in your companies that you have to like be concerned about? Is that anybody, no, yeah, right, not half of y'all, cool. All right, let's get started. Uh, that's me, I talked about databases a lot.

Like all the time, no, no, uh, just kidding. Uh, I'm Anthony Nocentino. I'm a senior principal field social architect at Pure. I've been a Microsoft MVP for almost 10 years. I get to work directly, uh, with the Microsoft product team to bring new features to market, uh, and work with their engineers, um, things like performance and functionality.

So pretty cool part of my job. Uh, we're gonna cover obviously two big things today, right? SQL Server 2025, uh, really the kind of the story behind kind of the enterprise ready AI strategy that Microsoft has. We're gonna talk a little bit about security and a lot about performance because like,

I like to make things go fast or really make, make the green lights on my data center blink. As fast as possible. Um, we're gonna talk about how that applies to the technologies that we have as pure storage, really focused on the block side of the house today. Um, we covered Ryan and I in the back there covered some pretty cool object integrations

yesterday. Who was in the session yesterday with me and Ryan? Not that many of y'all, OK, cool. And I'm gonna do that presentation too. So Microsoft has had this strategy called Ground the cloud for a couple of years, right? They um they pushed it into the cloud really

hard initially and now they're kind of figuring out that, you know, this is really hybrid is the way that they're seeing how their customers are going to use databases. And so we see as pure kind of the intersection with what we have kind of maps well with what Microsoft has. Who knew SQL Server runs on Linux?

All right, about half of y'all in containers, anybody? I use a SQL Server container probably every day, uh, because that's kind of my first go to, uh, when I'm testing things and building things, uh, with SQL Server. Uh, Azure Arc, anybody familiar with Azure Arc, right, the kind of that hybrid management plan for Azure to bring kind of the Azure capabilities to other clouds and also to

on-prem. Uh, one of the cool things that we see, uh, from Microsoft and SQL Server 22 is we saw them bring pay as you go licensing to SQL Server, which means you can check the box during installation and burn your Mac wherever you're running SQL Server, whether it's on-prem or in someone else's cloud. Right. And so this I think is a huge enabler going

forward of people making the right decision on where to put put workloads. And with uh more, most recently with Pay as you go, uh, it started in 22, but now they're doing it for all in-market versions of SQL servers. So if you're running a supported version SQL server, you can actually burn your Mac, uh, for your enterprise agreement with Azure, which I think is a fantastic way.

And obviously that maps well to our Evergreen architecture is pure kind of that subscription innovation, kind of give you the new features and capabilities uh of the platform. Uh, Anybody excited about XLR 5? Yeah, yeah, we're gonna have some pretty cool performance metrics here and obviously, Everdeen is kind of the program that helps you kind of adopt those capabilities and

technologies as fast as possible. Uh, one of the big things that we see a lot coming out of Microsoft, specifically for SQL servers to focus on developers, but I'm gonna talk about how this applies to us as storage professionals and DBAs. When Microsoft kind of goes to market with, with SQL Server,

they want that to be the database engine of choice for developers when they make new projects, right? They're competing against Oracle, they're competing against Postgrass and Mongo and all these things. And so you see a lot of iteration and the capabilities are coming into SQL Server for developers to do things. I'm gonna do some pretty cool stuff with

nativeES integrations inside of SQL server today, so you're gonna see that. I'm actually going to coordinate storage activities from inside the database engine. Every storage person just went, don't do that, don't do that, right? Uh and obviously performance, we're gonna talk a little bit about a lot a bit about performance today,

uh, as it applies to kind of modern data pipelines. And one of the stories I do like to tell about developer efficiency, uh, when I was earlier on in my career, I used to write um software for electronic medical records and I had to convert a data type, uh, from a money column to, I forget the exact data type. I converted from one type to another,

and obviously the money data type is a pretty important one when you're building a financial system that supports medical records. And so when I was running that project, uh, it took me about 6 weeks to make that conversion to test like the script that had to move the data between the two columns. And the reason why I took 6 weeks isn't because I'm that bad at code.

It's because every time I ran the script, found a bug, I had to go restore the whole database again to run the script, find the bug and fix that and I iterate over that. Over a couple of days, and that took me a long time to do that. Imagine if I had the ability to just snap, clone, revert instantly to be able to do the

things I need to do. I probably got that done in a day. Luckily, I get paid by the hour and I was a consultant, so that worked out well. The big strategy that we're seeing out of Microsoft inside of SQL Server is Vector search. Vector search really is the way to give you the ability to communicate with the database in a natural language way, right? I could just say,

you know what, where are all the red shirts that are extra large in New York and just pass that as a string in the database and it's gonna do that work and give that back to me. I don't have to type wear extra large product in stock, whatever. TSQL I used to have to write. To get that answer out of a question. In fact,

the demo I did yesterday intentionally had a misspelling in the query that I wrote to highlight the fact that it's gonna be, it's the similarity that matters. And so you can actually get some pretty interesting outcomes driving that. As pure, we spend a lot of time in our lives kind of testing the performance of this. So we're gonna talk a little bit about that later today.

But the idea is, you know, we're gonna have a growth in data inside the database. You're gonna be able to need to deliver that to our end users as fast as possible. And obviously object, um, one of the demos I did yesterday, if you want to watch the recording, is I actually took um about 3 million rows of data. I left the data from 2022 on hot block and I moved all the old stuff out the object,

right? And I had no code changes back to my application because I could present that as a unified set of data back to my application so they don't have to change their actual code. The database engine just does the work for me, retrieving the right data from the right place in the environment. And so that gives me some cost optimization where I can place data,

but also reduces the amount of data that's on the hot block side of the house when I do things like take a backup or build an index. There's less stuff that I have to cultivate inside. That side of the house and kind of add some agility to the database because I can guarantee you all of us have databases with transactions in them from like 1997, right? That mean nothing to the business now,

but they might mean something to the business for an annual report or a quarterly report that we'd hit periodically, right? So that's kind of the use case that we would see there. And side of AI again kind of focusing on that part here. Uh, we're gonna SQL Server 25 brings this kind of this concept of similarity of vector search combined with traditional relational search and

Microsoft has branded this thing hybrid search because you can use both techniques to interact with the data in your database. Building rag patterns or retrieval augmented, this is so hard to say. Can I just say rag patterns rather than the whole acronym? Retrieval Augmented Generation patterns, which really again,

is that natural language way of communicating with the database engine. Uh, you'll hear kind of people use the term vector and maybe embeddings interchangeably when they talk about, uh, vector databases, but an embedding is just a numeric representation of the data that I want to basically perform a machine learning query on or similarity search on. And the reason why it's a numeric

representation is when we come back and kind of compare that to other things in a set of data that I have so I can find the most similar thing, right? So that's a way for us to be able to apply. Uh, a mathematical concept called um cosine similarity to the data to find out the similar things. That's why that misspelling that I talked about

earlier, just works because it knows that it's similar enough. When we look at things like Flash array and Flash array Excel, that's where we're gonna be able to drive the outcomes for the business for generating embeddings at scale, uh, and speed. I was actually talking with Cody Hosterman this morning about how can we apply what we're doing with SQL Server 2025 and cloud Blocks store,

and Ryan talked about this yesterday in our session where I could take, uh, data that's on Flash on-prem, where I might not have GPUs, replicate that to Azure and cloud blocks store, rent some GPUs for a little bit of time, and do the heavy lifting of a vector embedding, uh, at scale. On someone else's GPUs that I don't have to purchase, I could just rent for the duration of

that process. And Microsoft's also taken a strategy of having the models be external to the database, so I think it is core because now I can, I can use different types of models rather than have something that's managed by Microsoft. So I can use OLama on-prem, I can use Open AI in the cloud, I could use chat GPT, Gemini, whatever model I want to use to uh manage the um AI's part of

the database. Any questions or comments team? No, so why is kind of the big one. Like why, why are we gonna do this inside of a relational database? And this is actually the strategy slide I used internally to talk to our leadership about why I think this is gonna work, uh, in our customer base and also for Microsoft.

And there's really 4 big points, uh, kind of the idea behind the fact that we have developers that know TSQL, right? There's not a new thing that they have to learn to go do the things that we want to do from an AI standpoint. They don't have to go learn Milvis or Python or other strategies or other development techniques. Or tool kits to do the work.

They just can apply this to their exact uh pipelines today to get the outcomes that they want from an AI standpoint. SQL Server and also Oracle have very mature enterprise grade availability, right? Who's using availability groups in our data center to protect data, right? Or fail over cluster instances.

And so we can get those very mature HA and DR uh technologies to apply that to these systems, right? Not having to build a bespoke solution for something, uh, that may or may not be, um, let's just say enterprise grade, right? Enterprise grade security is also a big one. When I kind of talked to our internal AI teams about what SQL servers bringing to market,

I talked about low level security and they're like, wait, tell me more about that, or the ability to within a set of data apply uh security policies so I can only see the things that I'm allowed to see. Maybe I can only see the financial data for pure North America, right? Not worldwide because I'm, I don't cover the

whole world. From um a business standpoint, but my manager certainly does, and so he would be able to see all of the data in that table. So the ability to filter is a very powerful construct that our AI teams are very interested in. Obviously encryption, all the things that SQL Server has around TLS and TDE and always encrypt it to protect data can be applied to

these technologies as well, but I think the bottom part is probably the biggest one, the operational readiness of a team to support something they already have, right? SQL Server, Oracle, whatever that is. Uh, in a sort of a platform and I have to learn a new thing or learn new operational techniques to protect a set of data and also kind of the richness of communities and how Microsoft's

moving or tooling forward. I haven't used SSMS in weeks because Microsoft released a new uh VS code extension for SQL Server. I don't even bother using uh SSMS anymore, but my friend's the program manager for that, so I probably shouldn't say that too out loud, right? Cool, but she also runs the BS code extension

too, so there's that. All right, so let's talk about kind of the flow here, like what this really looks like architecturally inside of a system. So if I have a database that's got relational data in it, I want to sprinkle AI on top, this is kind of the pattern that we're gonna see. Uh, we're gonna integrate with the large language models.

a SQL server will communicate with a large language model to generate those embeddings or those vectors and stick them back in the database or the numeric representation of the data I wanna to do natural language search on. I want to write an application where I can have a user come along and just interact with my application in a natural language way, right? Where are all the red shirts in New York that

are extra large, and that's gonna generate an embedding for the prompt or the request that I, uh, passed in my application, and then we're gonna give that back to SQL Server where it's gonna go and find. Which ones are most similar to the prompt that I just passed in, right? So that's kind of the pattern that we're gonna see and then the results that's gonna be given back to the application and I can combine that

with traditional relational search, right, where quantity equals 7 or whatever it is that I need to from an application standpoint. And so let's go and see what that looks like in. SQL server, although it's really weird that the AI solution architect just texted me right now while I'm presenting on AI.

That's weird. Guess what? Yeah, I know. OK. Uh, the decks will be available and the recordings will be available, um, and also, uh, this code is all available, uh, on my website, or not my website. If you go to GitHub, uh, my last name Noentino,

there's a thing called, I, I wrote about a month or two ago called Alamma SQL Fast Start. Uh, there's some infrastructure that you have to stand up to get what I'm about to show you. I have to stand up a large language model. I have to have a TLS certificate between SQL Server and a large language model, and what I want you to do is just be able to hit a button and be able to do what I want. And so this is all implemented in Docker,

uh, where you just type Docker compose up, it starts a SQL server, it restores the database, it starts up a llama, it connects it with TLS, and you don't have to do any of that, and you can just start running this code, right, and kind of see what. Uh, the outcome is that you have, and I blogged about that and my,

my partner in crime at work, Andy Yon wrote a similar implementation completely in PowerShell and Windows because some folks just don't use Docker for some reason, and I just never understood why. So the first thing we do is restore a pretty, a pretty small database uh for for a demo standpoint, and we're going to create this thing it's called an external model.

And that external model is just a, it's a REST API endpoint that allows me to communicate with a large language model and here I'm using Nomic Embed text, which is a, is an embedding model to help map, you know, the words to the numeric representation or the embeddings that I want to stick back in the database. So I'll pass my data into the embedding model.

It'll generate the embedding. I'll stick that back as row data inside the database. Now, the first thing I want to do before I. Go and do this for 100,000 times. I just want to test the plumbing, right? Can I talk to the larger language model?

Can I get an answer? And so what you see here is I'm using uh a new function called AI generate embeddings. And what that is is for the ability for me to pass into uh the AI model, the thing that I want to build an embedding on. I just have test text in there and it's gonna return back to me and embedding again this is just kind of testing the network plumbing before I go and do this 100,000 times.

And embedding again is a numeric representation of the thing that I want to be able to perform natural language search on and so basically we're taking the natural language text and turn it into things machines understand, right? So we have here um 768 floats, right? What that means is this particular large language model that I'm working with is a 768 dimension model and the dimensionality of a

model is defined by the person that built the model, right? I selected this model because it's kind of it's, it's kind of lean to me and it's not. A gigantic multi-terabyte thing that I have to cultivate. It's just a couple of gigabytes. So again, kind of the usability of getting started with this experience. But you can see here I have 768 floats and

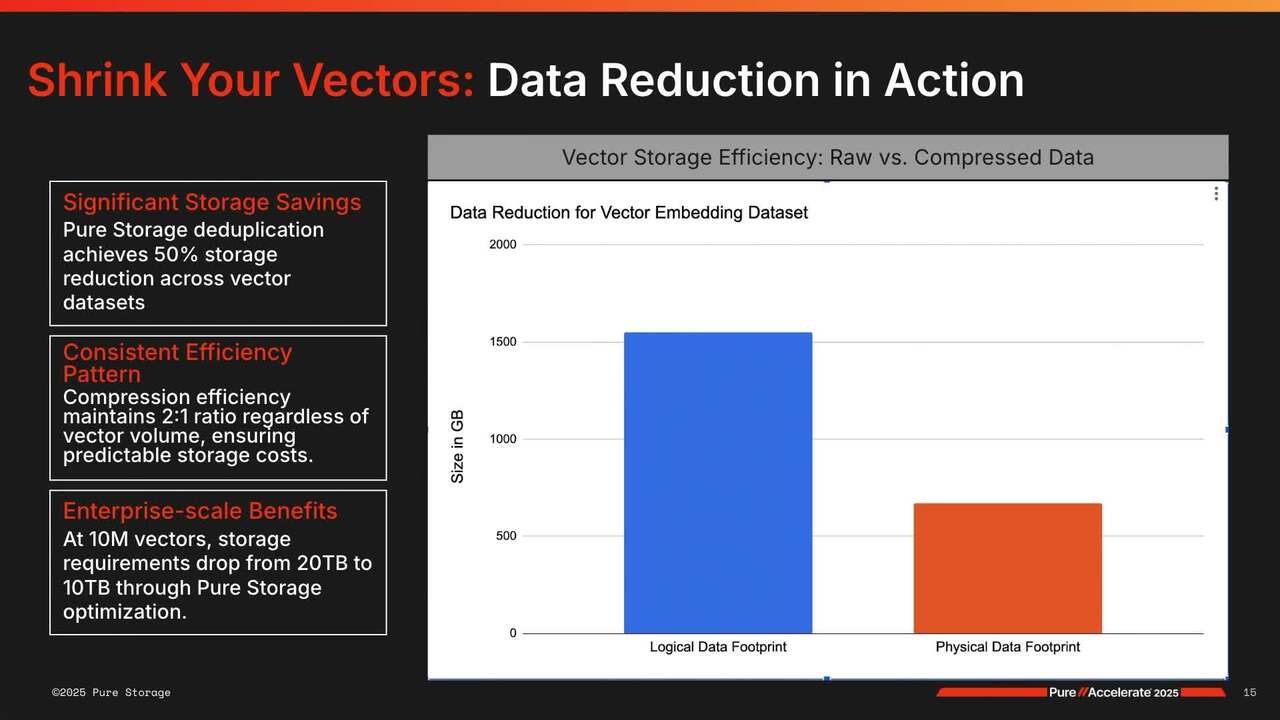

you're like, man, I have a data reducing flash array that generally doesn't do well when it has this kind of high density, uh. Numeric data, actually, we're going to look a little bit later that we do a pretty good job with data reduction, even with uh historically is a diabolical uh workload from a data reduction standpoint. I use that trick to count the number of

comments real fast, so 768. So what this looks like to you or your developers is you're going to add a column to a table that's going to hold the embedding that you want to perform the natural language search on, and I also have the embedding in there too so you can see what that really looks like because it doesn't have to be a singular row.

It can be a collection of rows that describe an object in my database, right? So what I'm going to do here is update. I'm gonna actually add the embeddings. For a couple uh columns that are concatenate the string together that describe products in this database of color, size, description, things like that,

and I'm gonna pass that as a string into the large language model and insert that back into the database. And so this particular implementation is not GPU accelerated, uh, which means it's slow, right? But with, uh, there's only a couple 100 rows in here again. It's just kind of get started with this from a demonstration standpoint.

Cool, 10 seconds, not too bad. And so let's look at this, what it looks like when we start to do this for a larger set of data. And so these are the rows in the table now and you can see all the embeddings inside the system. Uh, when you start to this particular data set, when I scaled it up to about 3 million rows,

uh, this exact model was about 12 gigabytes of additional data in the database. When we look at kind of the, you know, we hear a lot of, let's just say things, uh, where folks say like, Adding to your database is going to blow up the database, make a giant 10x, all these different things. Well, there's really a more nuanced story around that.

Historically, when you went back to a database, I created an index, right, it's really it's a copy of the data in a different order that fulfills the access pattern. The developer wants to retrieve the data, it's a performance optimization. What we're doing, we add vector embeddings to a database is we're gathering the data,

we're physically store it in different ways so that we can access it in a way that we want in a natural language way, right? So we're adding data to the database so we can search it in a different way. So we really want to view it as, you know, we're not gonna blow up the database, but we're adding value to the database. We're adding data to the database so we can get answers out of it in different ways,

right? So here's me doing my first natural language search. Let's go back a little bit, so we can see what it is. So on line 102, the string that I'm gonna pass in, I can't read that anymore, is I'm looking for a safe helmet that doesn't weigh too much.

I'm gonna take that, pass it into the large language model on 103, and then come back and then ask the database for that. Answer. So run that code there and you can see the distance from the previous query, the lower the value, the closer to zero, the more similar the things are.

And now as storage professionals, we want to be concerned about the idea is that's a large table, there's no index on that table. So when I go ask a question to that table, I'm going to read all the rows, right? That's where we get sad as storage professionals because that's going to beat up the front end of our controllers. With um with vectors now we have the ability to create an index,

but it's slightly different than a traditional index in the sense that um it defines a similarity pattern uh and there you can see the kind of type cosine and disk ANN are proximate nearest neighbor. Uh one of the core differences between a traditional index and uh A vector index is kind of this idea of similarity, it's not as deterministic as a traditional non clustered index is.

Who Yeah, so it'll go through and I'll add that index and I come back and do a search and now I'm gonna seek instead of scan, right? So I'm just going to return to rows that qualify for the query and that's what we'd expect our developers and architects to do on the application side of the house when they build the systems. Oh. Any questions or comments to you? Cool.

All right, so. Data reduction. When we look at data reduction, historically, you know, we stuck data into a database in a structured way, right, very well-defined way, and we kind of can figure out like on average, you know, across our whole fleet of arrays, we see about 3.5 to 1 or 4 to 1 depending on the

workload, maybe a little bit higher as you move into the data warehousing. But the unique part about vector indexing is things, there's a couple more knobs to turn than kind of the regular structured data is like the dimensionality of a model. If I have a bigger model that has more than 768 dimensions, maybe 1500 or 4000, that's 4000 floats that I'm going to stick in for each embedding into the database,

right? So now, that's another knob that I have to be concerned about when I talk about how much data is going to be added to the database and also the reducibility of that when Flash array comes along and does its data reduction. Uh, similarly, when we talk about, uh, AI, there's this concept of chunking. If you notice when I built the chunk, I kind of aggregated a couple columns together.

But what if I went in the other direction? What if I have like an 80-page PDF that I wanted to generate an embedding for? I don't want one embedding for all 80 pages. It's not gonna give me a good answer and I go search that PDF for a thing. I might generate. 10 embeddings for each page, that's 1080, 800 embeddings potentially for that one thing that I want to have in the

database, right? So that's another knob that I have to be concerned about when I have to size the system. But for what we looked at in our labs, uh, with that 768 dimension model, uh, adding gigabytes of data to the platform, we saw about 2.5 or 3 to 1 depending on kind of where the data was at kind of in its life cycle, which was honestly a fantastic surprise to me

and to our team. And we also learned that the cosine index is also uh data reducing out as well, which is great, um. Great optimization because now I can have the, it's kind of the best of both worlds, right, add an index or reduce the size of the data, but I'm also going faster back and reporting queries to the application.

Questions, comments, good? Is this too nerdy, nerdy enough? What do you want? So, all right. It is still a desert. So more developer stuff, uh, but we're gonna hijack this and take it over as storage professionals. Uh, Microsoft added, uh, arrest integration so

the database engine itself could communicate directly to arrest endpoint, right? You saw that kind of implementation of that when the, the model is talking to the rest endpoint, which is my Oama large language model, but SQL Server and I talk to an arbitrary rest endpoint. This has been in Azure SQLDB for about 3 years, so it's a pretty mature,

secure implementation. Uh, but they brought it to the database engine. When they released it in DB uh, a couple of years ago, I was like, I misread a document. I was super excited because I thought it was coming on prem because now I can do things like, like orchestrate snapshots inside the database,

which is gonna be pretty cool. So let's go ahead and check that out. So a friend of mine I worked with about a week ago. Sent me a DM. There's a release notes for um our API 2.4.4 and on 2.4.4 uh is the ability to add tags to a protection group snapshot, not the protection group, the snapshot, and then I can replicate that snapshot potentially

to other arrays and the tags go with that snapshot, right? So, uh, I woke up a little too early that day, uh, about 3, 3:30 in the morning. I looked at my phone, unfortunately, I was like, huh, OK, hopped straight into the lab and started writing into code that you're about to see.

So this code's about a week old. Uh, it's using SQL Server 2025. It's using the latest REST N. 244, which is on 687 Purity, and we're going to go and create a snapshot catalog for databases inside of Flasher. Does that sound pretty cool, to build up the drama enough to make that sound sexy,

like Snapshot catalogs and databases, yeah. So we're gonna turn on the feature of being able to talk to an external rest endpoint. So inside the database engine, I'm gonna go log into the flash array. That's the first thing that we're doing right here on line 29. So when I log into the flash ray, best practice is not hard coding your API token in your

string, put that in like a secret store, please, right? Again, the code's only 6 days old or 7 days old. I log into the flash ray. I generate an authentication token. I store the authentication token in my session. Uh, there's a SQL Server 2022 feature called TSQL snapshot Backups,

uh, which is one of my favorite features to use, because now I can take an application consistent snapshot of a database with no external tooling, like no VSS, nothing. Dramatic pause, and it's also rocket fast, I want to show you that here in a second. So the next thing I want to do on 78 is go take a protection group snapshot so that you can see

the rest endpoint, and I have on line 82, I think it's 82, my protection group. That snapshot rest call returns the actual snapshot uh with the the suffix of the number uniquely identifying that snapshot and then I'm gonna take a bunch of data about what we're doing right now like the SQL server name, the type of snapshot it is, the date, uh the database that has snapshot,

and I'm gonna store that in tags. What I'm doing right here is I'm taking what's called a metadata backup, which is really just the backup header that you would see in a traditional like SQL server native dump. And I'm going to stick that in an S3 bucket as part of the system. So now I have this protection group snapshot.

I'm gonna have, I'm gonna apply some tags to it, and I also have a backup header that describes exactly what's in the snapshot. And so here I'm building the tag and strategy of database name, SQL instance name, you're gonna see the uh backup time. The backup name, backup type, and where I stuck that backup header,

and then the tag payload, and I'm gonna go tag that here with the new API call for protection group snapshots tags. Actually have some error handling in there, so I do write somewhat good code every once in a while. So let's run all of this. This entire script takes about 4 seconds to run, uh, it takes a snapshot,

it does the tags, and writes the backup header out, and the IO freeze for that um database when I do the snapshot backup is 10 milliseconds. Which is pretty wild, if you think about that. So now I have an application consistent snapshot of the database, and that's relatively deterministic uh as compared to something like VSS and also doesn't

have the size limitations of VSS. So you can see kind of Microsoft adding the value to uh SQL server that you have, uh. For the snapshot backup, so there I just have some debug output that you can see what happened inside the database. I do find it hysterical that somewhere like uh someone a developer must have missed a thing and it says zero pages written out for the

backup. But if you're on a regular SQL server backup, you'd actually see the page count there. I imagine when you have a snapshot backup, you probably don't want to see zero pages in your backup file. So there's the tag payloads. This is the the URL for the backup file. There's the tag payload.

This is the thing that's stored inside the flash array, right? So all that data they're describing exactly what happened at that point in time. So when this replicates to another flash array, all that data is gonna go with it. And I can come back later and ask any flash array that has that snapshot.

Give me the latest snapshot for this database for that server, not what volume it is, what protection group it is, so I can actually answer that question. Of where that thing lives in my environment. So here is the second part of that. I'm gonna go right here, uh, in a separate session, so I imagine I came back later and I go and I ask on line 63

for my SQL server, go give me from that SQL server for that database, sort all the snapshots descending in time, so the newest, and then give me that snapshot name and then I'm gonna make another round trip to get all the metadata and the tags to go start the back the restore process. Right? And so now we have a backup catalog, no

external tooling, just TSQL in the engine and just flash array, right? I think it's pretty awesome. Is that cool? Yeah. Yeah, so the rest of this is just um some code for me to turn to JSON and the raw data as I retrieve it. So don't worry about that there's some crazy

TSQL gymnastics that have to occur to kind of crack the JSON and extract that all out. And at the bottom here, when I run all that code, you'll see, I'm gonna go ahead and connect. I'm gonna go ask the flasher and give me that snapshot uh for that particular database name. And there it is. So that raw data is actually the JSON tags from

Flash array. I was like, hey, flash array give me these things via rest and there we could see the output of that. And then later on on line 159, I cracked the actual backup header that describes uh where the database is so I can do an application consistent restore of the database. So expect that and the GitHub repo soon somewhere.

So performance, let's get into that a little bit. We're gonna talk about some performance numbers on our 5, but first we're gonna talk about what Microsoft brought to the party in 25 around performance availability and backup. Uh, on around performance, this thing called opportunistic locking is gonna increase the

concurrency into tables. So you know, you ever have this thing where someone comes to you and says the database is down because locking is occurring, right? So Microsoft is attacking that problem to make that less uh frequent due to this thing called lock escalation. Uh, how many of us have ever had to go back to a system or a DBA and say,

hey, your tempDB, your T Drive is just torturing the database, right? We've had or torturing the storage array. Well, historically we could handle uh IO governance, I guess you could say resource governance for user databases, but now we can do it for TEMTB, which means we have a query kind of go a little wild to dumps a bunch of data into TETB,

uh, we can actually kind of put some boundaries around that and not have to handle that in storage. We can actually handle that inside the application side of the house. Uh, Microsoft is also investing a bunch in availability in terms of uh faster data synchronization. They added some kind of knobs for us to turn to be more efficient how things move between

availability group replicas, uh, in terms of flow control, and also be able to, they added some value around, um, backing up off of secondaries, which, which I have never been a fan of. Um, conceptually it makes kind of sense, maybe I want to back up off of a secondary replica. But I want to back up the data where the data is changing,

not a copy of the data where replication might have broken, uh, or had some lag in the backup data that may or may not be the latest and greatest copy of a thing. Even in sync replica AGs you can get a little out of sync, but this one came out, um, right, this is a kind of a last minute push across the goal line for Microsoft, this thing called Zan backup compression.

Uh, historically, especially in devices like Flash Blade, where we have a really high throughput rates, when we do our database restores, the CPUs on the SQL server are the bottleneck for the restore, right? And so Microsoft solved that problem, and they took a backup algorithm that actually Facebook wrote and applied that gave that to us

as a, as an option for backward compression. So you can still use the regular compression that you see today called MS Express, but you have this thing called Zan, and we also have some knobs to turn on compressibility, which means we can control how much CPU we can dedicate to backward compression. Because also when you do backward compression.

You burn it CPU cycles on the host side, which means your workload might slow down. Then the other side of that is when you restore your uh bottleneck potentially impacts your recovery objectives for your database. Uh, on the application side of the house when we see on the performance for performance, we're looking at kind of the for SQL Server 25, we're going to compare R1 and R5.

Um, R1, or XLR1 pretty well, it's a pretty fast box, but it got a little long in the tooth, and we got our hands on a, on a, on a brand new XL a little earlier this year and we ran the TP Troxy benchmark, which is really TPCC against R5 and quite literally for that workload, SQL server is twice as fast as the previous generation XL. What's really remarkable is in a 5 rack unit device, uh,

we eclipsed a million IOPs for this workload. This workload kind of amortized around 90, which sounds kind of weird, but the uh the TP TPCC or Troxy benchmark is a is a transaction workload, so it's really low, uh, kind of, uh, in terms of IO size. Traditionally, I'd see most OTP workloads on our customer base land around 30, but we wanted to run an industry standard benchmark.

So 9K9KIO's landed us at about a million IOPs and a 5 rack unit device, kind of scale that to like 4K, you're looking at about 2.2 million IOPs in 5 rack units, which I think is just borderline comical, right? So big fun there we got those numbers out. The other thing that we observed was when we pushed this flash array to its limit, a 100% CPU load,

we didn't really see any variance in uh in latency. They kind of have that hockey stick when the array hits 100% load. We actually at 100% load or right at about 1.1 milliseconds for 8 SQL servers running these tests concurrently. So we're driving a pretty comical amount of performance out of the platform.

And obviously I wouldn't run it at 100% load all the time. I'd probably size it correctly. But we wanted to kind of take the system to to an extreme. Uh, Yi Xiao and I are going to cover this in much more detail on a session this afternoon around the performance for XLR 5.

So let's talk about backups. So I have some pretty cool demos around backups. You're like, how can you make demos about backups cool? Watch me. All right, so one of the things I do get to do a lot with our customers, help design POCs for pretty extreme workloads.

And well, this is an extreme workload, it's like a 4 gig database because I got demos. Uh, so this one, I also have some sins, uh, behind the scenes in terms of resource constraints intentionally because I want to really highlight the value of what the compression algorithms bringing. But when I get involved in POCs, the first thing I do with customers and we talk about

backup and restore, is I actually do what's called a backup to null. And what this means is I'm just going to read the data off the disk as fast as possible and throw it away. So now I know kind of the high water mark of the system, like how fast I can pull the pages off of whatever the primary storage device is. Hope it's a flash rate. So you can see, and I'm also doing that with no

compression because I don't want any bottleneck from a CPU standpoint, but what I do want to show you as this video starts playing. Is the impact of the current compression algorithm on throughput, OK, so I run that first backup with no compression and I'm throwing the bytes out and I, I landed about 2700 megabytes per second, so big B,

right? Cool. Now I'm gonna do, I'm gonna use the legacy compression algorithm called MS Express. Now watch the impact of just turning on compression for a backup. No rights are happening right now, right? More than half as fast, or less than half as fast.

It's a pretty dramatic impact for throughput, right? Cool. Now, let's look at Z standard. Z standard also gives us the ability to have a couple of knobs. The default compression, uh, compressibility is low. Let's go ahead and run that first one.

We're almost back to as fast as the original one where we just threw it out with no compression at all, right? So it's 27 before, now it's 21, so we're a lot better off than MS Express. In fact, more than twice as fast, right? So that's a pretty dramatic improvement in terms of performance. So it's got to write a backup into a flash

blade. Like I said, there's some sins under the covers in terms of IO intentionally, you're going to see a less than dramatic number here in a second, but with no compression as a right and a flash blade, it's gonna land at about 400 megabytes per second. Man, that's not great.

We're gonna make that better. So MS Express, so now that was no compression, let's back up with compression. This is the kind of the CPU bound algorithm, 692. This is an effective data rate, how fast I was able to pick up, compress and ship. So that's why you see that go back up.

The standard low. 1192, so we're getting some pretty good numbers there. Now I'm gonna kind of turn up the compressibility. We're gonna do a medium and a uh and a high uh back up here in a second. I really do like running backup low test because you can really drive some pretty

comical things inside a SQL server. You can also adjust the IO size, so I actually use it sometimes as a low test because I can ship variant sized IOs. Alright, so that's the high compression run it. So now I have a set of backups for both compression algorithms and low, medium high, and we're gonna look at kind of the output here. So really low throughput there at 293 megabytes per second,

but that's the most compressibility for the system. So that'd be good for like an archiving scenario. And so let's ask SQL Server like what it did for all of those backups. And so we have here on the bottom the compression percentage, so basically how compressed it was and how long it took.

So with no compression, obviously no compression occurred 4 gigabytes. MS Express got us about 42%. This is where we're giving back a little bit. So Z standard low is gonna give me less compressibility but faster backups. So it's a knob we have to turn there, so 30%.

When I go to Z standard medium, the compressibility is kind of in the same universe as MS Express, but the backup's slower, right? So maybe that's not great. And then also the standard high, really, I don't get much more out of the compressibility from low to high, and it takes a lot longer. Your mileage will vary. Like this is, this isn't like the actual data

that you're going to get in your system, it's gonna depend on the compressibility of the data that you have and also your infrastructure. So go and do these tests if you want to use this feature. But I'll tell you this, nobody cares about backup, right? What they care about is restores, right? All that was fine,

but the real value of this compression algorithm shows up now, right? Cool. So let's do a restore with no compression. So a lot of our really high performance low RPO customers are low, 30 years, I still mix it up. RTO customers. Don't use backup compression because CPU bound on the resource,

so we'll throw flash blades at the party so we can just slam that data back into the source system as fast as possible. But this really changes the game in terms of what we have from an outcome standpoint. So no compression restore, let's check it out. 1800. Z standard low or no MS Express restore.

800, that's not great, right? Go back to your uh your IT leadership and say that's twice as slow. Now, the standard, almost as fast as no compression, just shy of 1800, right? So pretty dramatic outcome there in terms of the maintainability of the system.

Did I make backups at least sexy for a minute there? Yeah, watch a paint dry. Cool. All right, well, actually we're at the end with about 6 minutes left. I think kind of the big part, kind of the AIness of things is, is, is really dramatic shift in what Microsoft brought the market,

right? Bringing AI to a relational database and the value of that without a lot of startup costs, right? I can just go and do these things inside of my database, uh, just by simply upgrading, you know, some of the security features that we talked about and honestly, developer side in terms of REST and TSQL.

I did, I left off the um There's part about uh Red Jack is also in there inside of SQL Server 2025 as well. Uh, I was one of those folks that wrote a Regja uh pipeline thing years ago in .NET and stuck it in there as a .NET CLR procedure. I can get rid of those kind of things from the system and kind of the bigger picture around kind of the overall performance that Microsoft brought to the party in terms of uh flash array.

Cool. Any questions or comments team? What's up? Uh, go back two seconds back up scenarios there. Right. That's, that's your life today. It's one or the other, right? Yeah, it seems like almost, yeah, medium or high, you know, you talk about the kn you got a tur there's body specific use

space to go. Yeah. Yeah, almost a no brainer, yeah, yeah, because the restore time is just comically fast. So, cool. All right, thank you, everybody. I'll hang out for questions though.

We Also Recommend...

Personalize for Me